ICCK Transactions on Intelligent Systematics

ISSN: 3068-5079 (Online) | ISSN: 3069-003X (Print)

Email: [email protected]

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

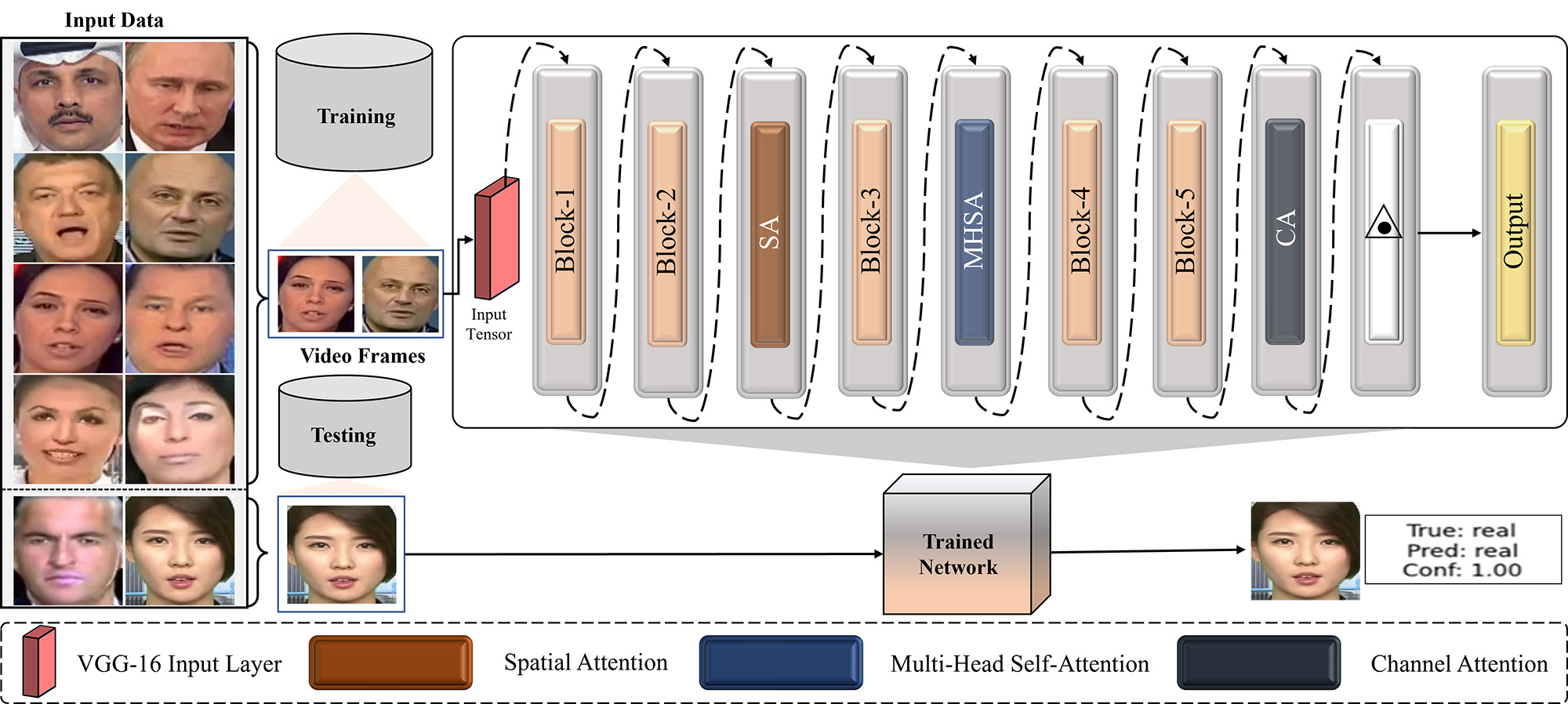

TY - JOUR AU - Ali, Farhan AU - Ghazanfar, Zainab PY - 2025 DA - 2025/11/24 TI - Enhanced Deepfake Detection Through Multi-Attention Mechanisms: A Comprehensive Framework for Synthetic Media Identification JO - ICCK Transactions on Intelligent Systematics T2 - ICCK Transactions on Intelligent Systematics JF - ICCK Transactions on Intelligent Systematics VL - 2 IS - 4 SP - 248 EP - 258 DO - 10.62762/TIS.2025.756872 UR - https://www.icck.org/article/abs/TIS.2025.756872 KW - deepfake detection KW - multi-head self-attention KW - synthetic media detection KW - facial manipulation detection AB - The proliferation of deepfake technology poses significant threats to digital media authenticity, necessitating robust detection systems to combat manipulated content. This paper presents a novel attention-based framework for deepfake detection that systematically integrates multiple complementary attention mechanisms to enhance discriminative feature learning. Our approach combines spatial attention, multi-head self-attention, and channel attention modules with a VGG-16 backbone to capture comprehensive representations across different feature spaces. The spatial attention mechanism focuses on discriminative facial regions, while multi-head self-attention captures long-range spatial dependencies and global contextual relationships. Channel attention further refines feature representations by emphasizing the most informative channels for detection. Extensive experiments on FaceForensics++ and Celeb-DF datasets demonstrate the effectiveness of our progressive attention integration strategy. The proposed framework achieves competitive performance with 92.67% accuracy and 99.30% Area Under the Curve (AUC) on FF++, while maintaining solid generalization capabilities with 82.35% accuracy and 82.7% AUC on the challenging Celeb-DF dataset. Comprehensive ablation studies validate the contribution of each attention component and justify key design choices, including the optimal 3×3 kernel size for spatial attention. Comparison with state-of-the-art methods demonstrates that our approach achieves competitive detection performance while maintaining architectural simplicity and computational efficiency. The modular design of our framework provides interpretability and flexibility for deployment across various computational environments, making it suitable for practical artificial media detection applications. SN - 3068-5079 PB - Institute of Central Computation and Knowledge LA - English ER -

@article{Ali2025Enhanced,

author = {Farhan Ali and Zainab Ghazanfar},

title = {Enhanced Deepfake Detection Through Multi-Attention Mechanisms: A Comprehensive Framework for Synthetic Media Identification},

journal = {ICCK Transactions on Intelligent Systematics},

year = {2025},

volume = {2},

number = {4},

pages = {248-258},

doi = {10.62762/TIS.2025.756872},

url = {https://www.icck.org/article/abs/TIS.2025.756872},

abstract = {The proliferation of deepfake technology poses significant threats to digital media authenticity, necessitating robust detection systems to combat manipulated content. This paper presents a novel attention-based framework for deepfake detection that systematically integrates multiple complementary attention mechanisms to enhance discriminative feature learning. Our approach combines spatial attention, multi-head self-attention, and channel attention modules with a VGG-16 backbone to capture comprehensive representations across different feature spaces. The spatial attention mechanism focuses on discriminative facial regions, while multi-head self-attention captures long-range spatial dependencies and global contextual relationships. Channel attention further refines feature representations by emphasizing the most informative channels for detection. Extensive experiments on FaceForensics++ and Celeb-DF datasets demonstrate the effectiveness of our progressive attention integration strategy. The proposed framework achieves competitive performance with 92.67\% accuracy and 99.30\% Area Under the Curve (AUC) on FF++, while maintaining solid generalization capabilities with 82.35\% accuracy and 82.7\% AUC on the challenging Celeb-DF dataset. Comprehensive ablation studies validate the contribution of each attention component and justify key design choices, including the optimal 3×3 kernel size for spatial attention. Comparison with state-of-the-art methods demonstrates that our approach achieves competitive detection performance while maintaining architectural simplicity and computational efficiency. The modular design of our framework provides interpretability and flexibility for deployment across various computational environments, making it suitable for practical artificial media detection applications.},

keywords = {deepfake detection, multi-head self-attention, synthetic media detection, facial manipulation detection},

issn = {3068-5079},

publisher = {Institute of Central Computation and Knowledge}

}

ICCK Transactions on Intelligent Systematics

ISSN: 3068-5079 (Online) | ISSN: 3069-003X (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/