Chinese Journal of Information Fusion

ISSN: 2998-3371 (Online) | ISSN: 2998-3363 (Print)

Email: [email protected]

In a sensor network, data collected from multiple sensors [1, 3, 4, 8] is synergistically fused to improve overall system performance [2, 5, 6, 7]. An important prerequisite for successful fusion is that the spatial and temporal biases in asynchronous multiple sensor systems must be estimated and compensated. Otherwise, these biases may cause tracking performance degradation, and even worse, may lead to duplicate tracks.

Spatial bias estimation and compensation has been under intensive investigation for the past decades, and various algorithms have been developed in the literature. In [9], the real time quality control (RTQC) routine is developed to compute the bias by averaging the measurements from each sensor. In [10, 11, 12], the sensor registration is formulated as an ordinary or weighted least squares (LS) problem, and sensor biases are then estimated using LS technique. In [13], the exact maximum likelihood (EML) method is used to maximize the likelihood function of sensor measurements to obtain bias estimates. The method in [14] uses the maximum likelihood registration (MLR) method to solve the bias estimation problem of multiple dissimilar sensors. Another series of methods are based on filtering and use Kalman Filter (KF), extended Kalman Filter (EKF) and unscented Kalman Filter (UKF) to obtain online spatial bias estimates [15, 16, 17, 18, 19]. In [16], the KF method is used to estimate the sensor system bias and the attitude bias with the measurement noises being considered. In [17], the EKF method is used to estimate the position and azimuth biases of the distributed radars relative to the common reference coordinate system. Methods in [18, 19] use the augmented state Kalman filter (ASKF) to estimate the augmented state vectors including the target states and the biases of multisensor, so that the two components can be jointly estimated.

All these methods make one fundamental assumption, i.e., the time stamps of all the measurements accurately indicate the measurement times. In practical applications, there may be unknown time delays between them due to the latency of signal processing and/or data transfer. The time stamps cannot always be used as reliable time references to correctly fuse the measurements from multiple sensors, leading to temporal bias problems. The temporal bias must be accurately estimated and compensated, which is the focus of this paper.

Several algorithms have been developed to solve the temporal bias problem in offline mode. In [20, 21, 22], the temporal bias problem is considered in different combination of sensors. The time stamps and the unknown time delays are used to represent the true measurement times of sensors, and the measurement equations are formulated. The objective function of each sensor is built using the measurement error terms and the relevant covariances. The Levenberg-Marquardt (LM) algorithm [23] is used to find the ML estimates of the temporal bias and other unknown parameters based on minimizing the sum of objective functions. In [24], a generalized LS method is used to estimate the radar spatial bias and ADS-B temporal bias, where the radar has accurate time stamps while the transmitting time of ADS-B data packets are unavailable. The two sensors need to have same sampling period for this method to perform correctly. These methods [20, 21, 22, 24] do not consider the case where sensors all have unknown time delays. In [25], a multisensor time-offset estimation method is proposed for different time-offset statistical models and target dynamic models. This method assumes that the sensors are spatially unbiased and only estimates the temporal offset offline without compensation for accurate data fusion. These offline methods use the estimated bias as prior information to calibrate the sensors. This poses a problem that the bias may change each time the system is started, so the sensors have to be recalibrated.

In [26], an online method is proposed to estimate the temporal bias between the camera and the inertial measurement unit (IMU). The time stamps and the temporal bias estimates are used to represent the actual measurement times of the camera. However, due to temporal bias estimation errors, the camera measurements are inevitably processed at incorrect time instants, which may cause errors in the initial stage. Besides, the sensor spatial bias is not considered in this method. In [27, 28, 29], the spatial bias and the temporal bias are jointly considered for different combination of multiple dissimilar sensors. Three spatiotemporal bias estimation methods based on EKF, UKF and expectation-maximization-EKF (EM-EKF), respectively, are proposed to estimate the spatiotemporal biases and target states simultaneously. Since both biases may exist in practical multisensor systems, it is expected to jointly consider spatial and temporal biases into system models. In the three methods mentioned above, only the specific case where the multiple sensors have same sampling period is considered. In most real applications, the sensors may not sample at same time with same intervals.

In this paper, the problem of simultaneous spatiotemporal bias compensation and data fusion for practical multisensor systems, where the sampling periods of sensors may be different and varying, is investigated. In our previous papers [30, 31] on spatiotemporal bias estimation, the particular case where sensors have constant sampling periods is discussed. This paper is a significant extension of the previous work to the general case with varying sampling periods. We consider the difference between the time stamp interval and the true measurement interval of measurements from different sensors as the temporal bias, which is caused by the existence of unknown time delays. First, an augmented state equation combining the target state and spatiotemporal bias is formulated. Multisensor measurements are collected in batch processing or sequential processing schemes to estimate the augmented state vector, results in two spatiotemporal bias compensation methods. In batch processing scheme, multiple measurements from all sensors between two consecutive reference time instants are collected in a measurement vector to update the augmented state vector. We use the time stamp intervals and the temporal biases to represent the true measurement intervals, and an accurate relationship between measurements and states is established. In the sequential processing strategy, each measurement from each sensor is processed sequentially once available. Due to the unavailability of the true measurement intervals, the time stamp intervals are used to formulate state transition and the temporal bias is used to align the measurements with target states in measurement equations. In both processing schemes, the measurements are formulated as functions of both target states and spatiotemporal biases. This enables extraction of both spatial and temporal biases from the measurements. The UKF is used to handle the nonlinearity of the measurements to simultaneously estimate spatiotemporal biases and target states. The contributions of this paper can be summarized as follows:

The multisensor system with spatiotemporal bias is investigated and the time delay difference between the sensors is regarded as the temporal bias to be compensated for proper fusion of the measurements from the sensors.

The feasible state transitions are presented for the multisensor system without exactly known measurement interval.

The measurement equations are formulated to correctly describe the relationship between the measurements and the states with biased time.

Two spatiotemporal bias compensation methods are proposed to simultaneously estimate the biases and target states, one in batch processing scheme and the other in sequential processing scheme.

The posterior Cramer-Rao lower bound [32, 33] (PCRLB) is derived to quantify the best achievable performance.

The rest of the paper is organized as follows. In Section 2, the problem of spatiotemporal bias compensation and data fusion in asynchronous multisensor systems is formulated. In Section 3, the spatiotemporal bias compensation methods are presented in detail. The PCRLB of spatiotemporal bias and target state estimation is derived in Section 4. Section 5 presents the simulation results, followed by conclusions in Section 6.

Consider a centralized system with sensors that provides two-dimensional measurements in polar coordinates, namely range and azimuth measurements. The nearly constant velocity (NCV) motion model [34] of the target is considered in the whole paper. The target state vector is described as

where and are the positions in the direction of x and y, respectively, and are the corresponding velocities. Note that other target motion models can be handled seamlessly within the proposed methods. The target state equation is described as

where is the zero-mean Gaussian white process noise with known covariance , is the state transition matrix, and is the process noise gain matrix. The sensor reports range measurement and azimuth measurement at a rate, which may vary. The subscript denotes the sensor index and stands for the index of the measurement time . The measurement equation is given by

where denotes the position of sensor , and represents the zero-mean Gaussian white measurement noise with known covariance , which is given as

where and denote the standard deviations of range and azimuth measurement noises, respectively.

In practical systems, spatial bias of sensor may exist, where and stand for range bias and azimuth bias, respectively. Range bias may be caused by internal circuit delay in the sensor, zero drift in the system, or velocity incorrectness of the distance clock. Azimuth bias is usually caused by the deviation which appears when the sensor antenna is aligned with due North [35]. Additionally, the time stamps tagged to the measurements may be different from the true time that the target is observed. Normally, there may be time delays in the time stamps for a number of reasons. For example, some sensors take the time when the measurements are produced as the time stamps. Due to the latency of signal processing and/or data transfer, there is a time delay between the true measurement time and the generation of its time stamp. Different sensors may have different time delays due to different processing or communication latencies. If the time stamps are used as the true measurement times to perform time alignment for data fusion, large error and/or false correlation may be resulted, even there is no spatial bias. Therefore, in order to perform accurate data fusion, the spatiotemporal bias problem should be solved.

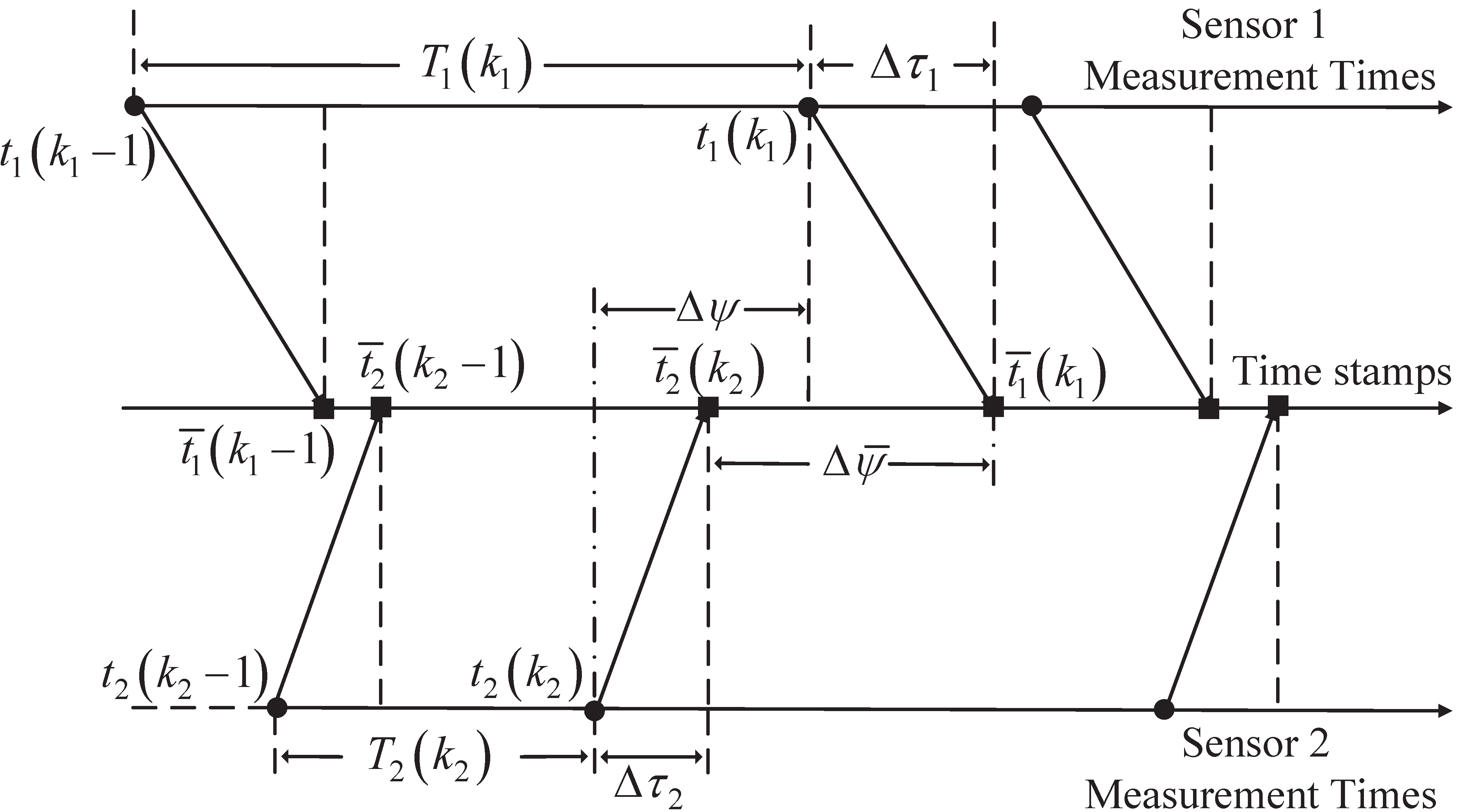

In this section, two spatiotemporal bias compensation methods are proposed for asynchronous multisensor systems. The general situation is considered, where sensors measure targets at different times with different and varying intervals. We take a two-sensor system as an example to present the time relationship among measurement times, time stamps and time delays, as illustrated in Figure 1.

In this figure, sensor measures target state with a varying period at the true measurement time , and the time stamp is . Due to signal processing or communication latencies, there may exist unknown time delay between the time stamps of sensor with respect to the true measurement times. This prevents the time stamps from being used directly to perform proper time alignment and data fusion. For example, to fuse the measurement from sensor 1 and the measurement from sensor 2, the true measurement interval between sensors is required. In practice, we only have the time stamp interval . The temporal bias, , between and should be compensated for accurate data fusion. Note that we can only compensate for the temporal bias between the two sensors instead of the time delay of each sensor. Without loss of generality, we take sensor as the reference sensor and regard as relative temporal bias of sensor with respect to the reference sensor for the general case with sensors.

In order to obtain effective data fusion in the presence of both spatial and temporal biases, the idea in this paper is to augment the spatiotemporal biases as a part of state vector to be estimated along with target states. The augmented state vector is given as

where is the target base state vector, and is the spatiotemporal biases of sensors. consists of the spatial biases of sensors

and consists of the temporal biases of sensor with respect to sensor 1.

Assume that the spatiotemporal biases provided by a sensor at different time instants are constants. The augmented state equation of (1) can be given by

where the augmented state transition matrix and the augmented process noise gain matrix are respectively given as

where denotes a zero matrix, and denotes identity matrix of order . Since the spatiotemporal biases are constants, there is no process noise with respect to the biases. The process noise in (3) and its covariance are same to those of the process noise in (2). Assume the target moves according to the NCV model, the state transition matrix of the target base state is

where is the time interval between two consecutive states, which is considered in the filter. In the following, two measurement processing schemes, i.e., batch processing and sequential processing schemes, will be presented. Normally, the true measurement interval of two consecutive measurements should be used as the time interval . Due to the existence of unknown time delays, the time stamp interval instead of the true measurement interval is used to formulate the state transition.

In the batch processing scheme, we choose sensor as the reference sensor and update the state estimates using all the collected measurements when the measurements of sensor 1 are reported. Since the time delay of sensor 1 is constant, the measurement interval equals the time stamp interval . We can use to exactly represent .

In the sequential processing scheme, we estimate the augmented states once a measurement from one sensor is available, and the consecutive measurements may not originate from the same sensor. Since the time delays of sensors may be different, the true measurement interval is unavailable. In this case, the time stamp interval instead of is used to formulate state transition. Assume two consecutive measurements are the and measurements from sensor 1 and sensor 2, respectively. One has

As shown in (7), temporal bias exists between and , leading to bias in state transition. To eliminate this influence, the temporal bias is used in the measurement equation to align the measurements with target states.

In practical multisensor systems, the sampling periods of multisensor may be different and varying. To handle the multisensor measurements in the general situation, two measurement processing scheme are presented. One is the batch processing scheme and the other is the sequential processing scheme. In the former, the sensor with longer sampling period is set as the reference sensor and multiple measurements from all sensors between two consecutive reference time instants are collected in a measurement vector to update the augmented state vector. For sequential processing scheme, each measurement from each sensor is processed sequentially to estimate the augmented state once it is available. For both schemes, the measurement equations are formulated as functions of the spatiotemporal biases and target base states, which enables simultaneous spatiotemporal bias estimation and data fusion.

We consider the fusion period in this part, where and denote the time stamps of the and measurements of sensor 1, which is chosen as the reference sensor. Let be the number of measurements provided by sensor in the current fusion period. The measurement vector of sensor is given by

where denotes the measurement provided by sensor in the fusion period and its time stamp falls within the period . The measurement vector of all sensors in the current fusion period is given by

As discussed in Section 3.1, the time interval in state transition matrix equals the true measurement interval. Therefore, the augmented state estimate is updated at the measurement time of sensor 1 using the measurement vector . To properly formulate the measurement equation, the true interval between measurement time and measurement time , , is required for alignment of the measurements from sensors with the state to be updated. However, we only have the time stamp interval , where unknown temporal bias between and exists. Here, the solution is to use to replace in the measurement equation. Since is part of the augmented state vector, this replacement enables exact description of the relationship between the measurements in (9) and the augmented states in (1).

Here, we denote as the temporal bias of sensor 1 relative to itself, which is used to ensure that the measurement equation of sensor 1 can be formulated using the general expression, just like other sensors. The measurements in (9) are formulated as functions of the target states, spatiotemporal biases and the time stamp intervals

where denotes the measurement function of sensor , denotes the zero-mean Gaussian white measurement noise with known covariance , which is given by

where

and . The measurement function of sensor is

with

where denotes the position of sensor , and denote the range and azimuth biases of sensor , respectively, and denotes the true target position corresponding to the measurement at time . We use to represent the true measurement interval between the time and the measurement time , which enables each measurement of sensor to be correctly represented by the target states, spatiotemporal biases and the time stamp intervals according to (13). As a result, the spatiotemporal biases and target states can be estimated simultaneously from the measurements collected in (9).

In this part, the measurement equation for sequential processing scheme is presented. We denote and as the true measurement time and the time stamp, respectively, corresponding to the measurement from sensor . The sensor that first provides a measurement is chosen as the reference sensor. Without loss of generality, we assume sensor is the reference sensor and denote as the temporal bias of sensor relative to itself. To avoid ambiguity, we denote as the overall measurement index across all sensors. Each time a measurement is received at the fusion center, is incremented by 1. The example given in Figure 1 is used to illustrate the formulation of the measurement equation, followed by the general case with sensors.

Assume that the measurement provided by sensor is the measurement received at the fusion center. We use to initialize the augmented state at time , as will be presented in Section 3.4. As shown in Figure 1, the consecutive measurements may be from the same sensor or different sensors, and there are four possible combinations of their sources. The measurement equations for all the four cases are formulated as follows.

The previous measurement with the overall measurement index and the current measurement with the overall measurement index are from sensor 1 and sensor 2, respectively: In this case, the true measurement interval between and is unavailable since their time stamp delays may be different. As discussed in Section 3.1, we use the time stamp interval instead of to represent the time interval in the state transition matrix. After transition according to , the true time of the state is and unequal to the measurement time . To eliminate this influence, the temporal bias is used to align the measurement with the state in measurement equation, as given by

where , denotes the position of sensor , denotes the zero-mean Gaussian white measurement noise with known covariance , and denotes the true target position corresponding to the measurement at time . We utilize to represent the true interval between the time of the state and the measurement time , which enables each measurement of sensor to be correctly represented by the target states and spatiotemporal biases according to (15a). Accordingly, the spatiotemporal biases and target states can be estimated simultaneously using the measurement from sensor .

b) Both the previous measurement with the overall measurement index and the current measurement with the overall measurement index are from sensor 2: In this case, the measurement interval equals the time stamp interval since the time delay of sensor 2 is constant. One has

After state transition from the previous update time according to , the time of the state is , which is still unequal to the measurement time of . This influence can be eliminated in the similar way as in Section 3.2.2, and the measurement equation can be formulated in the same way as in (15) except that the overall measurement index is .

c) The previous measurement with the overall measurement index and the current measurement with the overall measurement index are from sensor 2 and sensor 1, respectively: In this case, the time interval is

After state transition from the previous update time according to , the time of the state is and equals the measurement time of . We have denoted the temporal bias of sensor relative to itself as , so the measurement equation of sensor 1 can be formulated using the general expression (15) except that the overall measurement index is and the sensor index is 1.

d) Both the previous measurement with the overall measurement index and the current measurement with the overall measurement index are from sensor 1: In this case, the time interval equals the true measurement interval . After state transition from the previous update time according to , the time of the state is and equals the measurement time of . The measurement equation can be formulated in the same way as in (15) except that the overall measurement index is and the sensor index is 1.

The above cases encompass all possible combinations of the sources for consecutive measurements, without other possibilities. When the subsequent measurements are received at the fusion center, the measurement equations can be formulated according to one of the cases.

Referring to the above formulation in the case with two sensors, the general expression for the measurement equation can be formulated for a system with sensors. We denote and as the time stamps when the previous and current measurements are provided by sensor and , respectively, where . When the current measurement with overall measurement index is received at the fusion center, the time interval is . Substituting into (6), we can formulate the corresponding transition matrix, and the augmented state equation is formulated according to (3)(5). The expression for the measurement equation is the same as (15) except that the subscript needs to be replaced by subscript .

Owing to the nonlinearity of the measurement equations in Section 3.2, the Unscented Kalman Filter (UKF) is employed to jointly estimate the spatiotemporal biases and target states. This leads to two approaches: batch processing-based (BP-SBDF) and sequential processing-based (SP-SBDF) spatiotemporal bias compensation and data fusion.

The UKF uses the unscented transformation [36] (UT) to approximate the mean and covariance of the augmented state and measurement. First, the sigma points and the associated weights are calculated given the augmented state estimate and state estimation covariance . The mean and covariance are then approximated by using a weighted sample mean and covariance of these sigma points. That is

where is the dimension of the augmented state, is the th sigma point, is the associated weight, is the scale parameter, and is the th row or column of the matrix square root.

These sigma points can be updated using the augmented state equation given by (3)

The weighted mean of these predicted sigma points for the augmented state is given by

The prediction covariance of the augmented state is calculated by

where

and denotes the known process noise covariance. We denote as the prediction of sigma points for the measurements. Note that the measurement equations for BP-SBDF method and SP-SBDF method are different, and their expressions have been given by (10) and (15), respectively. Substituting into the corresponding measurement functions, we have

The weighted mean of these sigma points for the measurement is given by

The covariance of the predicted measurement is given by

where

and denotes the known measurement noise covariance and has two different expressions in BP-SBDF and SP-SBDF methods. The cross-covariance between the augmented states and measurements is given by

The filter gain can then be given by

Finally, the augmented state estimate and the corresponding covariance are updated by

and

In this subsection, the one-point initialization method [37] is used to estimate the initial augmented state and its covariance for the two proposed methods. The basic idea is to estimate initial target state using the first reported measurement and find the initial covariance by the measurement covariance. Without loss of generality, we assume sensor first provides the measurement in polar coordinates. The unbiased conversion from polar coordinates to Cartesian coordinates [38, 39, 40] can be given by

where and are the unbiased converted measurements in x and y directions, respectively, and are the first range and azimuth measurements reported by sensor 1, respectively. is the bias compensation factor, and is the mean of the converted measurement error, which are respectively given by

The converted measurement covariance is

where

and . Based on one position measurement, one has no information on target velocity. If the maximum target velocity is , the uniform distribution of target velocity with appropriate bounds may reflect our ignorance. This uniform distribution is replaced by a Gaussian probability distribution function with mean zero and covariance for velocity. The initial estimate of the target state and its covariance are respectively given by

where is a zero matrix, and is a identity matrix with order 2.

There is no prior information about the spatial and temporal biases, and we set their initial estimates to zero, that is, . Additionally, we assume the target states and the spatiotemporal biases are uncorrelated, and the independent bias assumption results in block diagonal covariance matrices of the spatiotemporal biases. As in (39), the maximum range and azimuth biases are assumed to be and , respectively, and the maximum temporal bias is . The initial estimate of the covariance for spatiotemporal biases is

where

As a result, the initial estimates of the augmented state and its covariance are

and

Since the measurement equations are nonlinear, the optimal solution to the spatiotemporal bias compensation problem cannot be analytically derived. A theoretical lower bound of performance would be helpful to assess the level of approximation introduced by the proposed methods. In time-invariant systems, the standard Cramer-Rao lower bound [41] (CRLB) is commonly used for performance evaluation. While in time-varying systems, the posterior CRLB (PCRLB) provides a theoretical bound on the dynamic state estimates [32]. In this section, the PCRLB for the spatiotemporal bias and state estimation is derived briefly as follows.

To avoid redundancy, we only present the derivation of PCRLB for SP-SBDF method. Assume the current measurement is reported by sensor , and the augmented state and measurement equations have been given in (3)(6) and (15), respectively. The lower bound on the estimation error is determined by the Fisher information matrix and the covariance of is bounded by

where is the expectation operator. The general frame work for derivation of PCRLB of an unbiased estimator of nonlinear discrete-time system is described in [32], and the information matrix can be calculated by recursion

where is the process noise covariance, and is the Jacobian matrix of the measurement equation evaluated at the true augmented state , i.e.,

where is the gradient operator with respect to the augmented state . We have

with

If the sensor index equals 1, we have . Otherwise, we have

where

The PCRLBs of the augmented state components are calculated as the corresponding diagonal elements of the inverse information matrix

where represents the element located at the row and column of a matrix. Recursion in (2) can be implemented based on Monte Carlo averaging over multiple realizations of the target trajectory. Given the initial information matrix, we can calculate the PCRLB through the recursion in (2). In practice, the recursion can be initialized with the inverse of the initial covariance matrix of the filtering method as , which has been presented in Section 3.4.

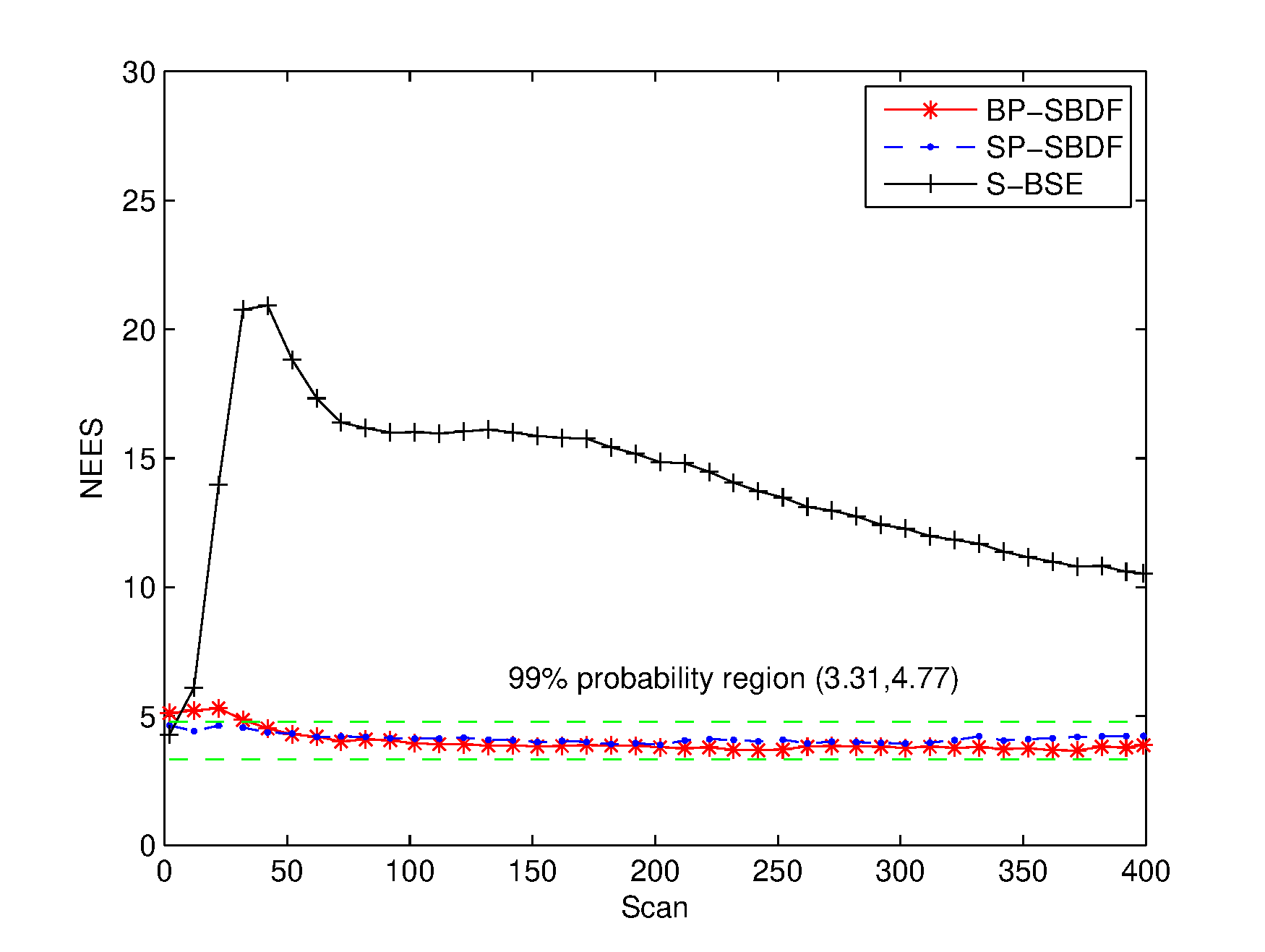

Simulations and performance comparisons are presented in this section to evaluate the effectiveness of the proposed methods. Two scenarios with relatively small and large temporal biases are investigated to evaluate the influence of temporal bias on estimation performance. The root mean square errors (RMSEs) of the spatiotemporal biases and target states and the normalized estimation error squared (NEES) are used to illustrate the performance of the proposed methods. Also, the PCRLB is given to quantify the best achievable accuracy. For comparison, the simulation results of the standard bias and state estimation (S-BSE) method [18] that fails to consider the temporal biases are also provided.

Consider a single target tracking problem with two asynchronous sensors located at the two-dimensional Cartesian coordinates and , respectively. The detection probability of sensors is assumed to be unity, and the measurement noise covariance of sensor is given by

The two sensors work asynchronously and start reporting measurements at and , respectively, and sensor 1 is chosen as the reference sensor. To illustrate the capability of the proposed methods to handle measurements with varying sampling periods, the sampling periods of sensor 1 are cyclically selected from , and in turn, and the sampling periods of sensor 2 are cyclically selected from and in turn. In the experiment, sensor 1 reports 400 measurements and sensor 2 reports 1065 measurements in the same time duration. Note that the proposed methods have no requirement of the sampling periods and initial sampling times. Only time stamps with unknown delays are used. Without loss of generality, sensor 1 is assumed to be spatial-bias free, i.e., and , while sensor 2 contains range bias and azimuth bias .

Two scenarios with relatively small and large temporal biases are investigated. In the scenario with small temporal bias, denoted by Scenario I, time delays of sensor 1 and sensor 2 are and , respectively. In Scenario II, time delays of sensor 1 and sensor 2 are and , respectively. The temporal bias in Scenario I is , and in Scenario II. The trajectory of the target evolves with the NCV model and starts at position with an original heading of and an initial speed of . The process noises are assumed to be zero-mean Gaussian white with standard deviation . Simulations are performed with 1000 Monte Carlo experiments.

As discussed in Section 3, the BP-SBDF method updates estimates at a rate same to the sampling rate of sensor 1, thus 400 estimation results are produced. While the SP-SBDF method outputs 1465 estimates, since once a measurement is received, the state is updated, regardless of whether it comes from sensor 1 or sensor 2. To conduct objective performance comparison, the estimation results at the measurement times of sensor 1 are considered.

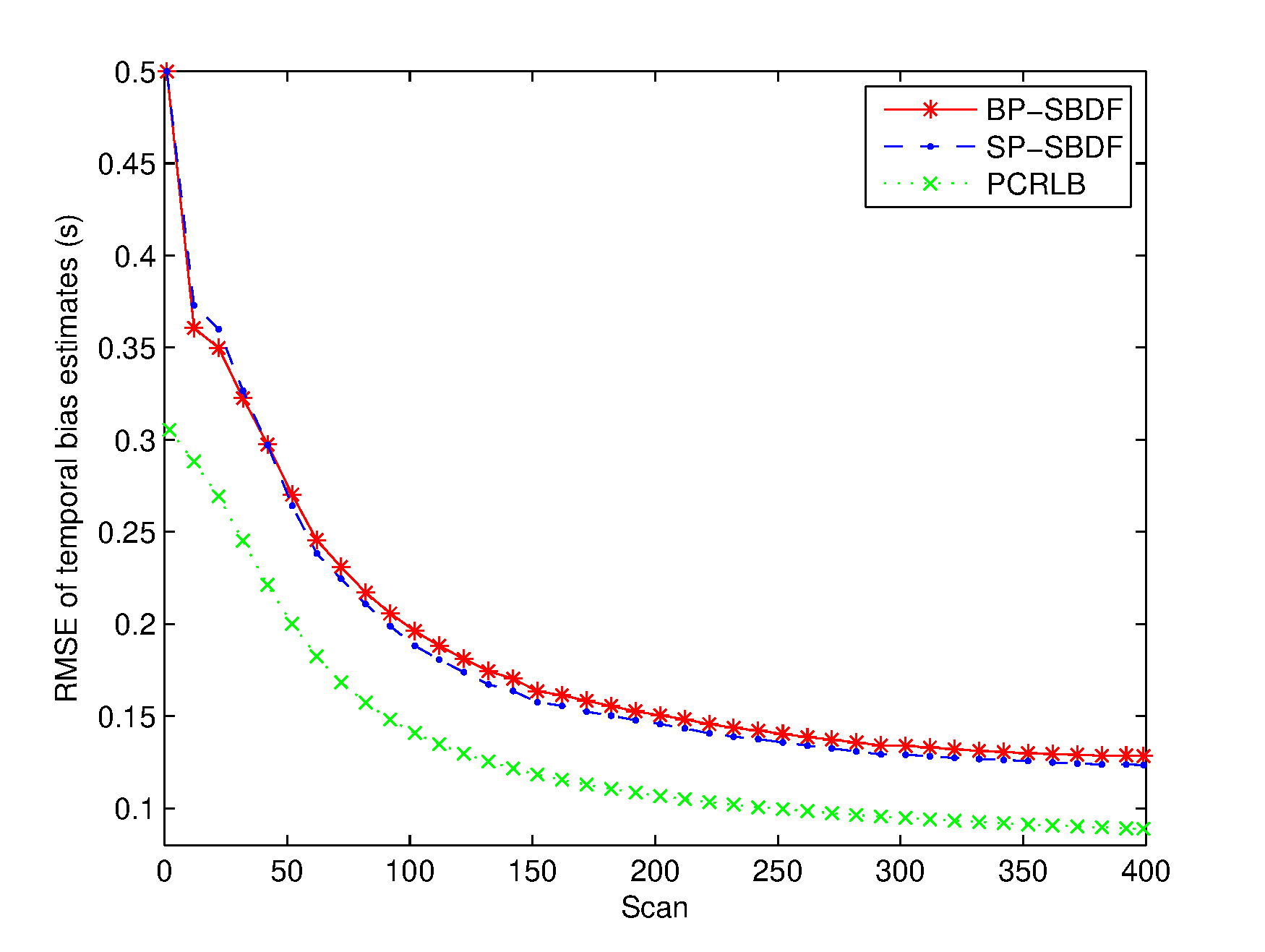

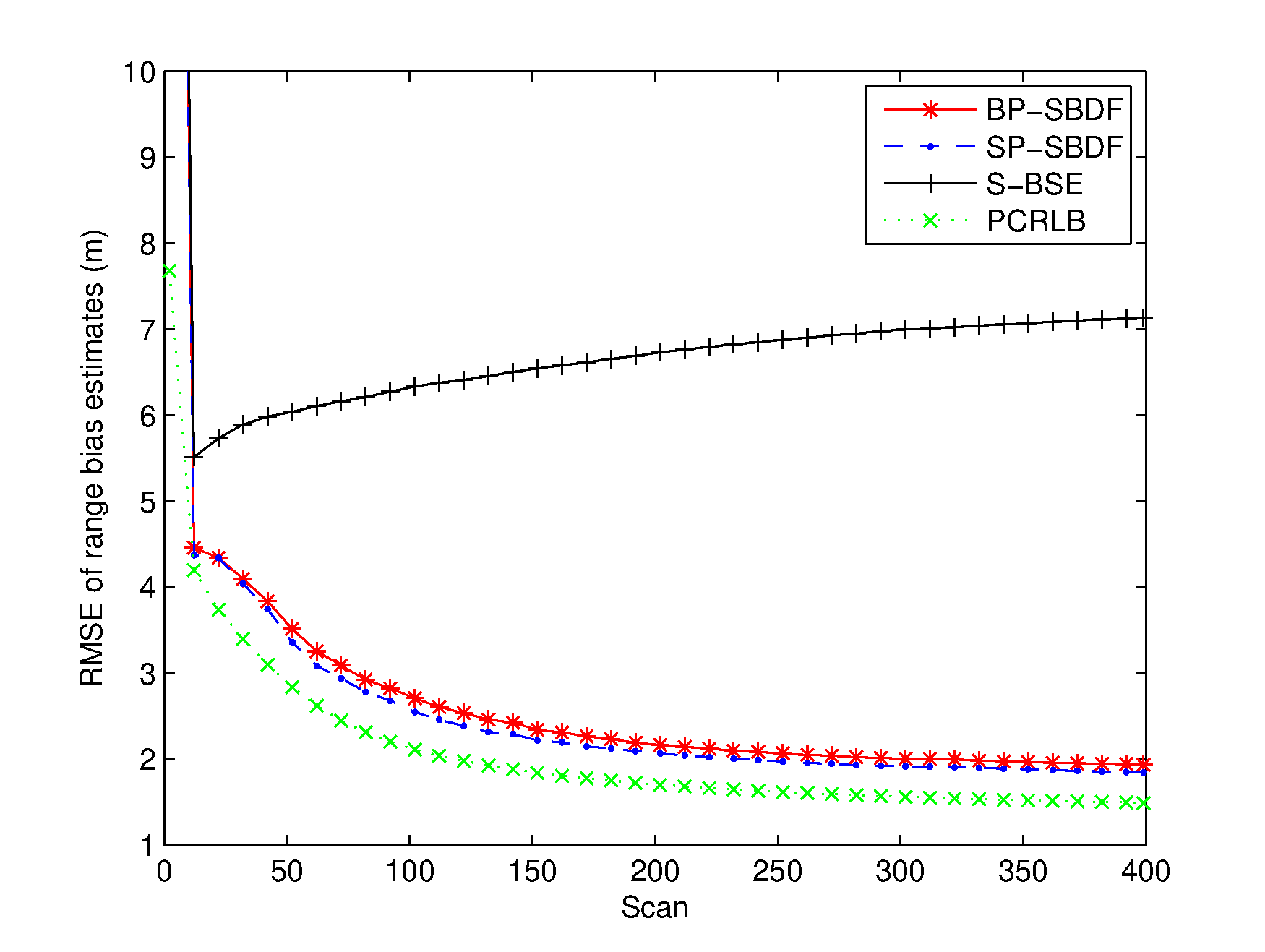

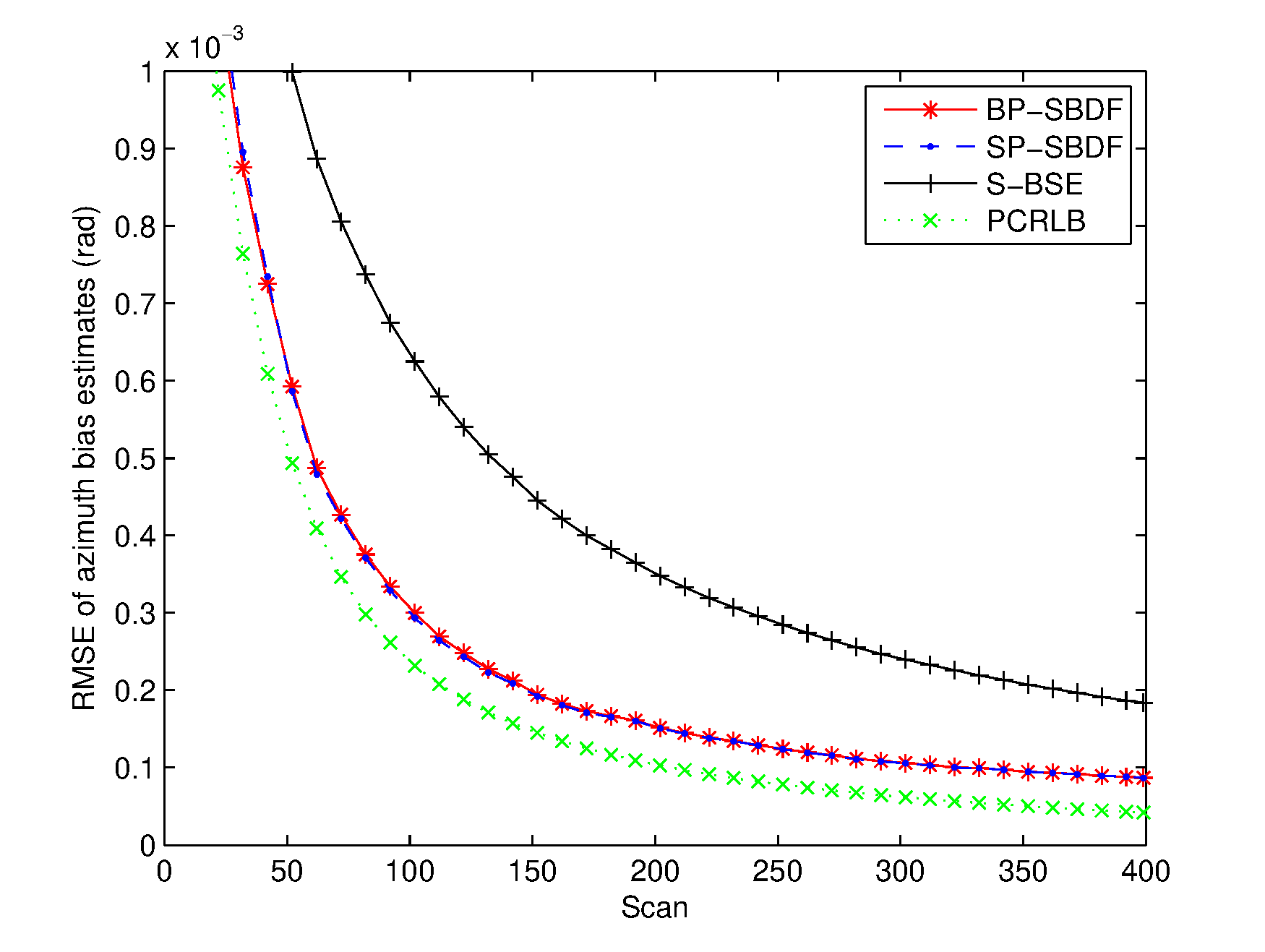

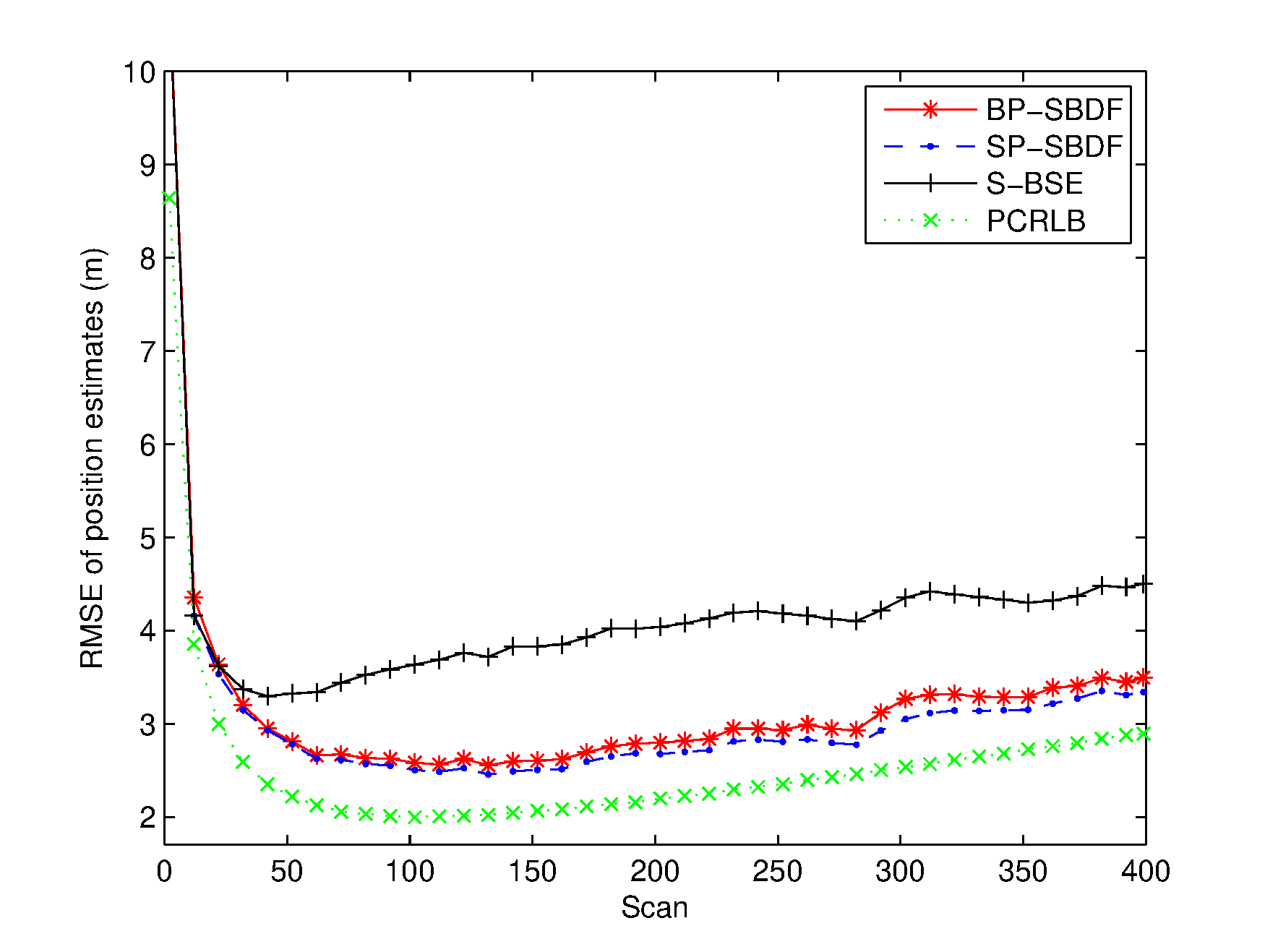

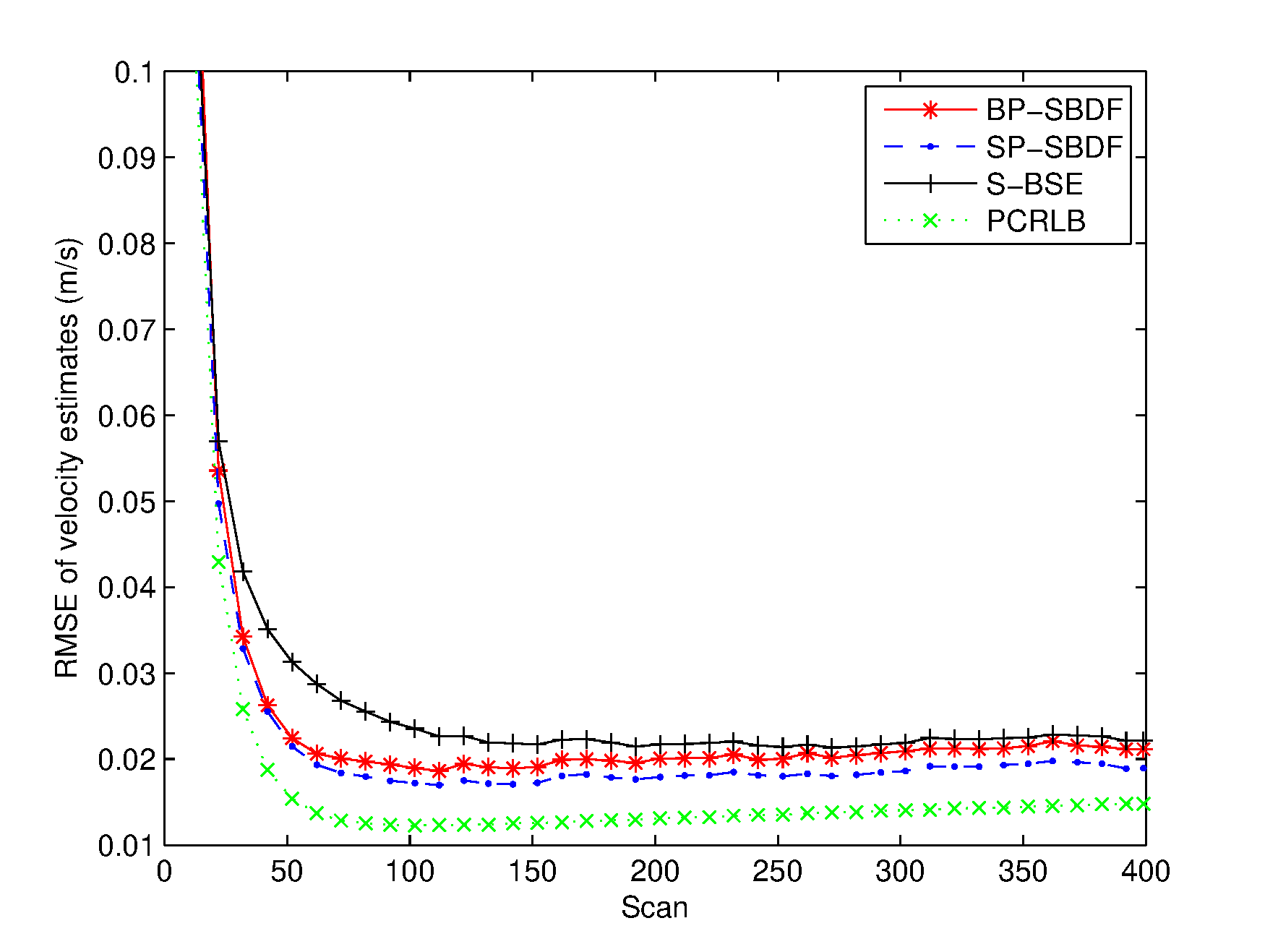

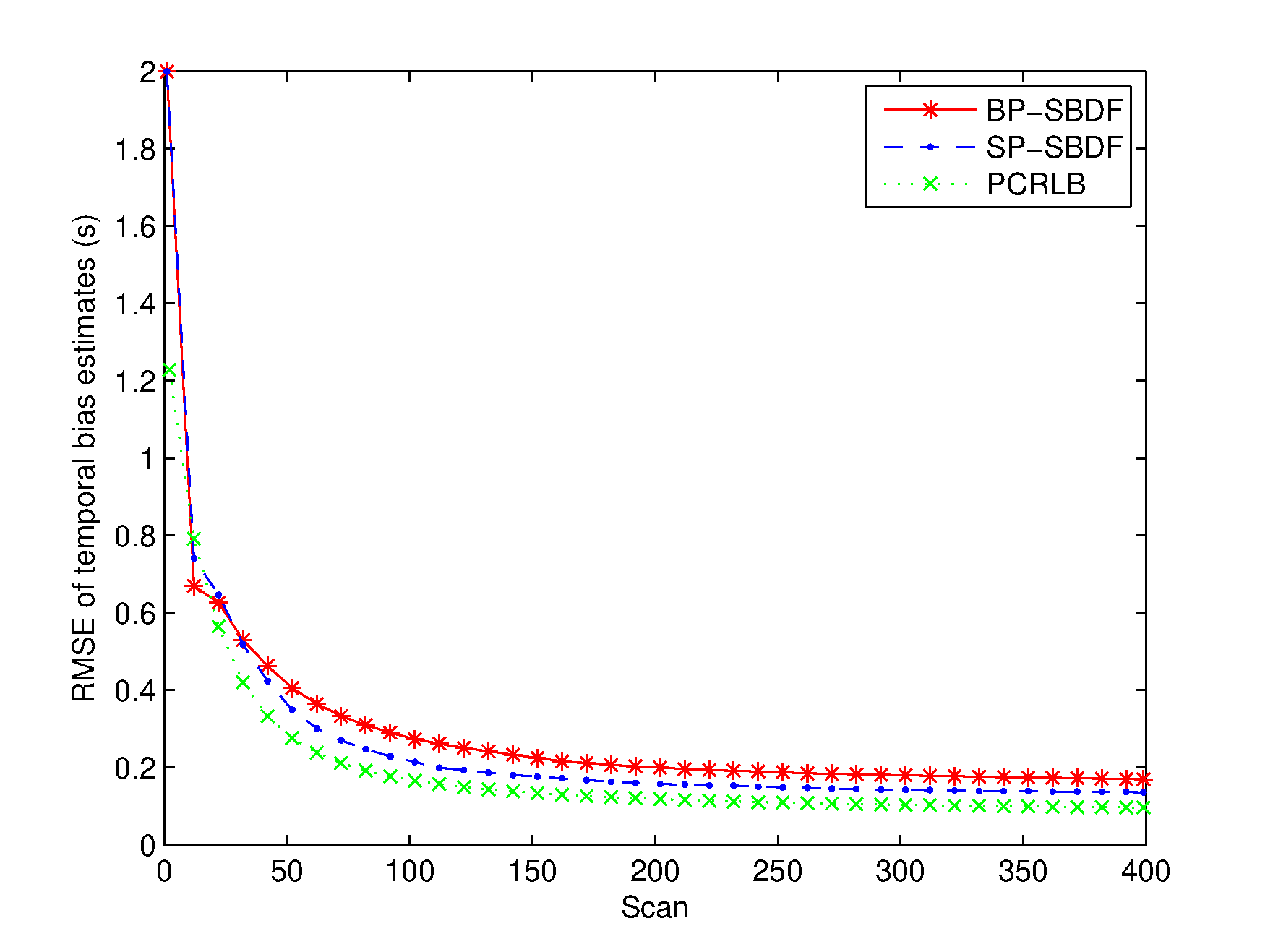

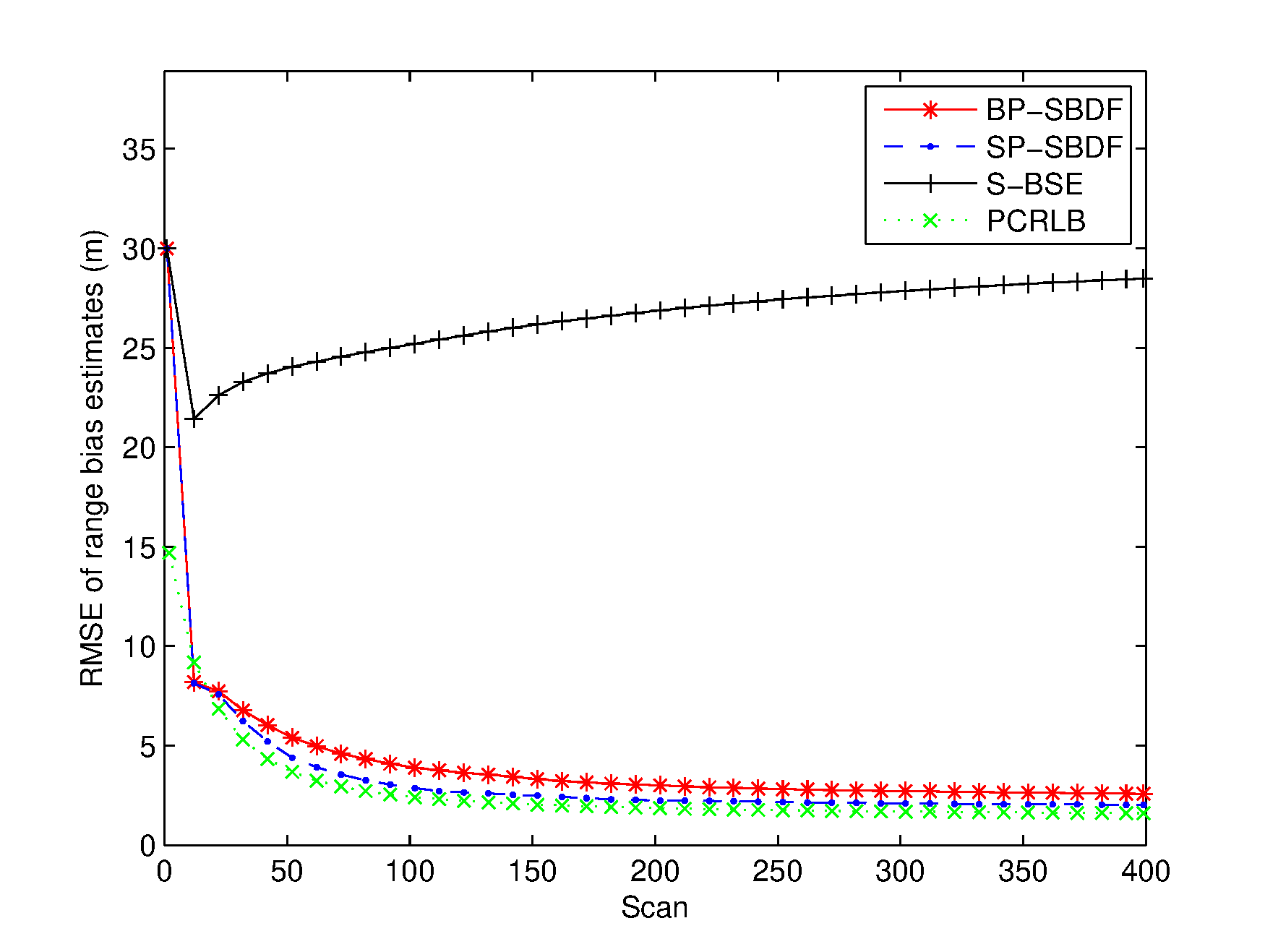

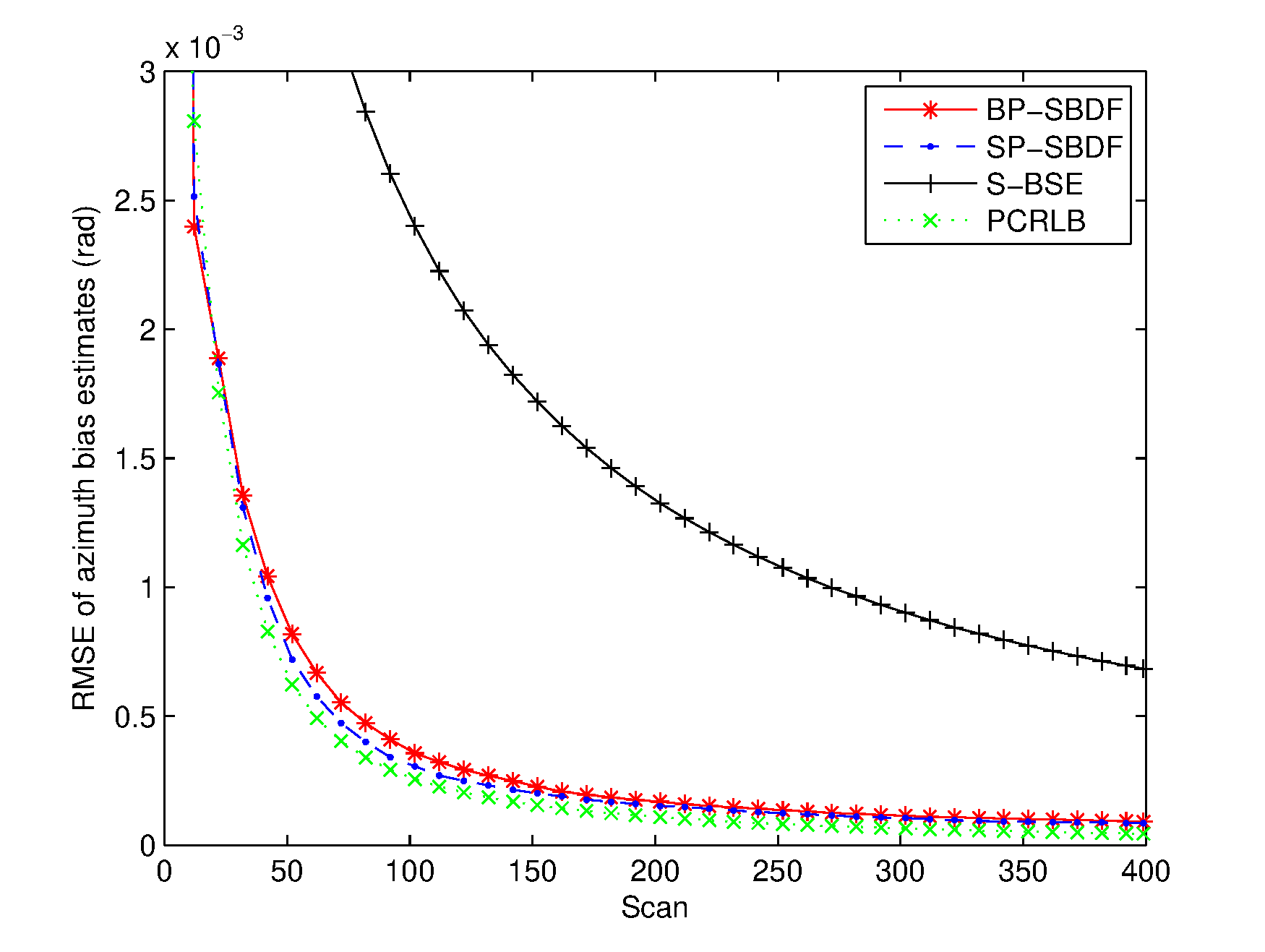

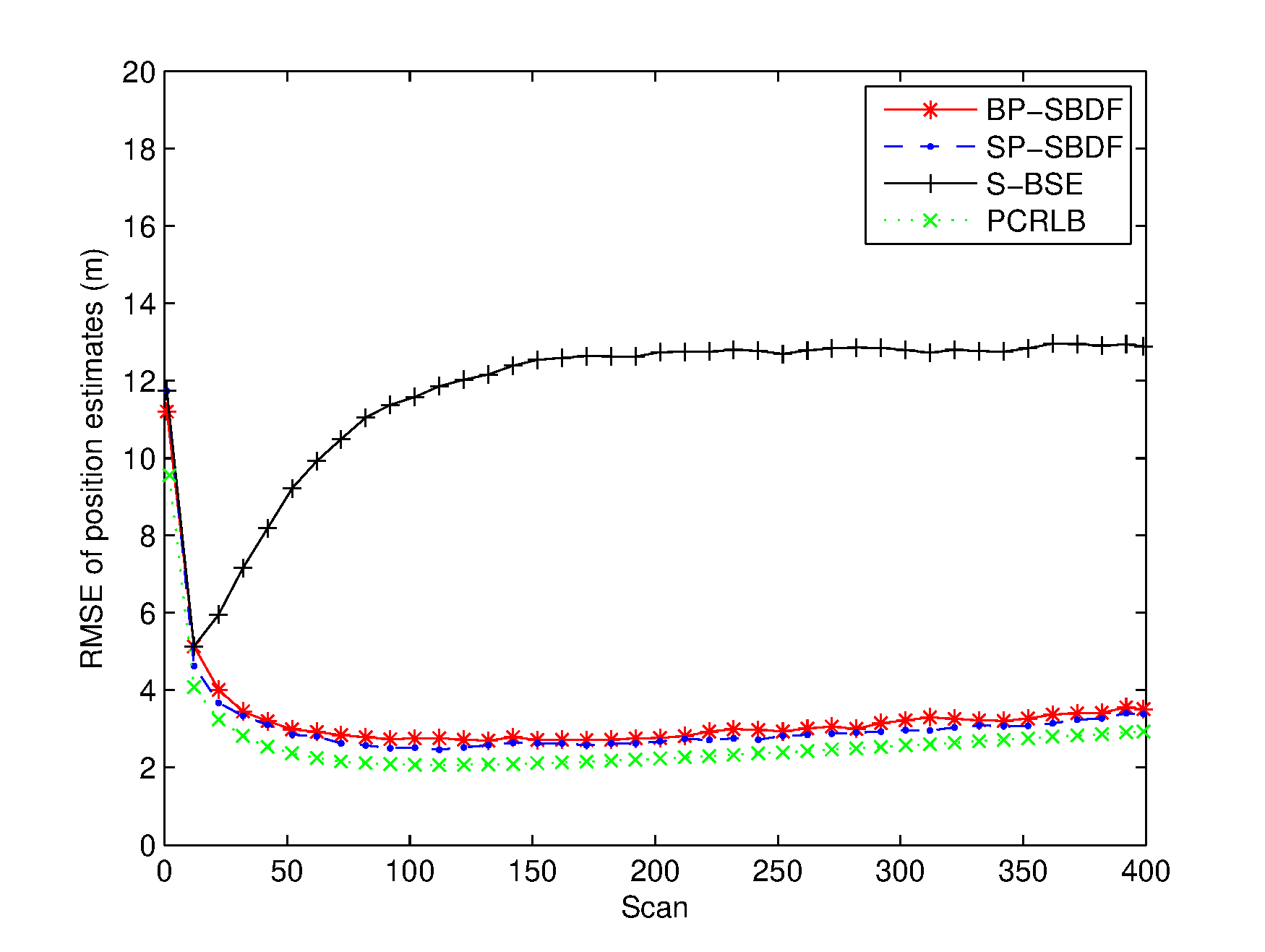

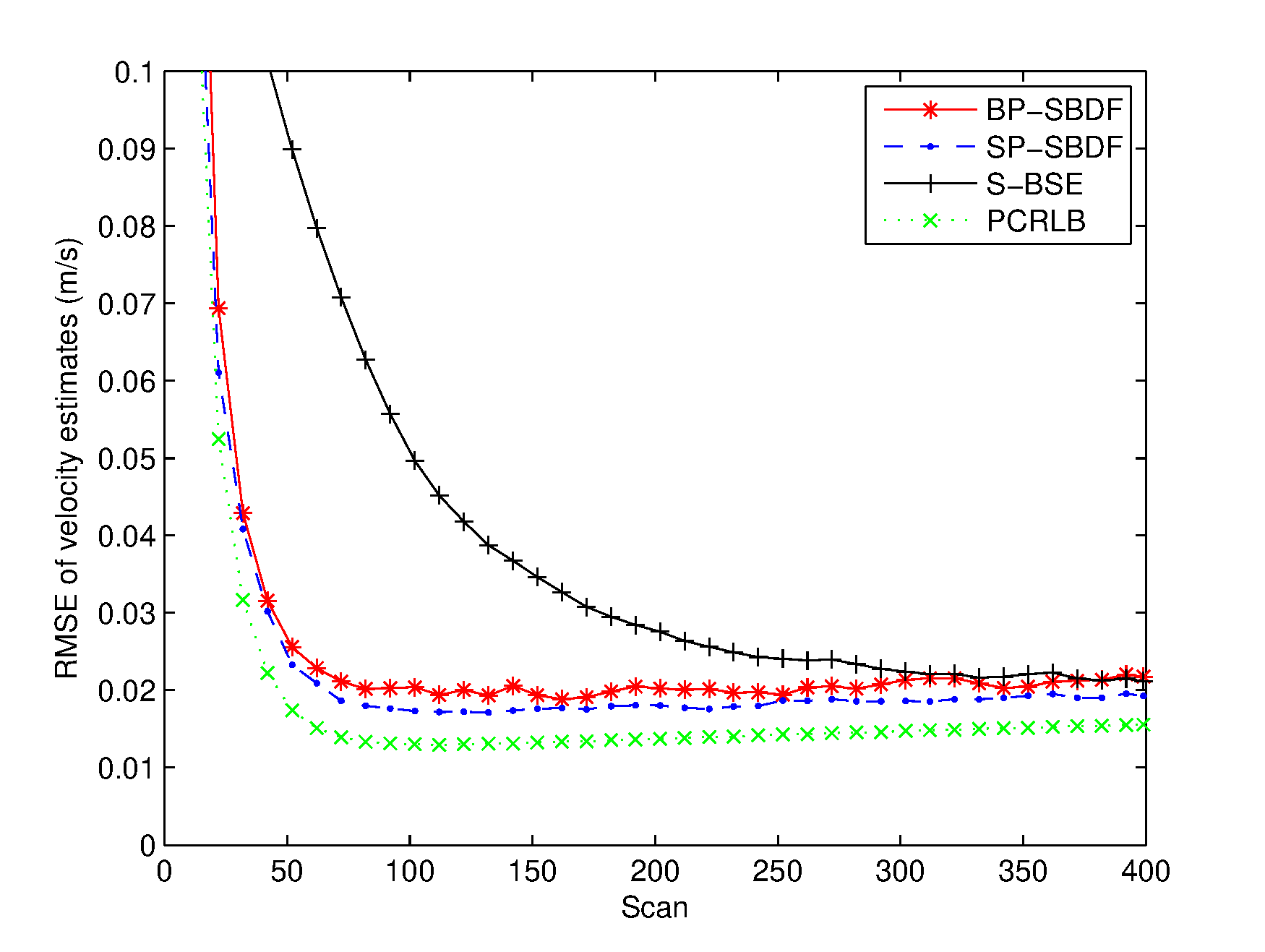

The RMSEs of the spatiotemporal bias and target state estimates are plotted in Figures 26. The PCRLB is provided to quantify the theoretically achievable performance in this scenario. Additionally, time-averaged RMSEs of the proposed methods are listed in Table 1 for comparison. For fairness of comparison, two average running times are provided to compare the complexity of the proposed methods. One is the average running time required to handle the overall measurements of the two sensors, and the other is the average running time required to perform a single filtering process, which are also listed in Table 1.

| RMSEs | Running Time | ||||||

| Temporal Bias | Range Bias | Azimuth Bias | Position | Velocity | Overall | Single | |

| (s) | (m) | () | (m) | () | (s) | () | |

| BP-SBDF | 0.1557 | 2.2431 | 1.7348 | 2.9410 | 0.0203 | 0.2177 | 5.4425 |

| SP-SBDF | 0.1502 | 2.1339 | 1.7163 | 2.8155 | 0.0183 | 0.4981 | 3.4000 |

| S-BSE | 6.7461 | 3.7369 | 4.0488 | 0.0226 | |||

| PCRLB | 0.1108 | 1.7534 | 1.2166 | 2.3393 | 0.0134 | ||

From Figures 36, it can be seen that the spatial bias and target state RMSEs of the S-BSE method are larger than those of the BP-SBDF and SP-SBDF methods. As shown in Table 1, the improvements in time-averaged RMSEs of the range bias, azimuth bias, position and velocity of the BP-SBDF method are about , , and , respectively, with respect to those of the S-BSE method. Accordingly, the improvements in time-averaged RMSEs of the SP-SBDF method are about , , and , respectively. The S-BSE method does not consider and compensate for the temporal bias between sensors, which leads to estimation errors higher than those of the proposed methods. As a contrast, the proposed BP-SBDF and SP-SBDF methods properly compensate for the temporal bias while providing accurate spatiotemporal bias and target state estimates, both of which can reach the steady state rapidly, as shown in Figures 26 and Table 1. Note that there still exists deviations between the RMSEs and the theoretical lower bounds. The main reason may lie in the high nonlinearity in the measurement equation.

Additionally, we can see that the SP-SBDF method performs slightly better than the BP-SBDF method. The BP-SBDF method estimates the state once using all the measurements collected in a fusion period based on the prior state updated in the previous fusion period. While the SP-SBDF method updates the state once a measurement is received, where the state updated by the previous measurement is used as prior information, which is more accurate than the state estimate of the previous fusion period. This results in the slight superiority of the SP-SBDF method over the BP-SBDF method in estimation accuracy.

As shown in Table 1, the SP-SBDF method requires more time to handle all measurements from the two sensors than the BP-SBDF method. This can be explained by the different measurement processing schemes of the two methods. The BP-SBDF method only utilizes the UKF at the measurement times of sensor 1 to generate the augmented state estimates, so only 400 calls of the UKF are required. On the contrary, the SP-SBDF method calls the UKF 1465 times to generate the augmented state estimates when handling the same number of measurements, which results in the SP-SBDF method requiring more running time than the BP-SBDF method. If we focus on a single filtering process, we can see that the running time required by the SP-SBDF method is less than that of the BP-SBDF method. This is because that the measurement dimension in the BP-SBDF method is higher than that in the SP-SBDF method.

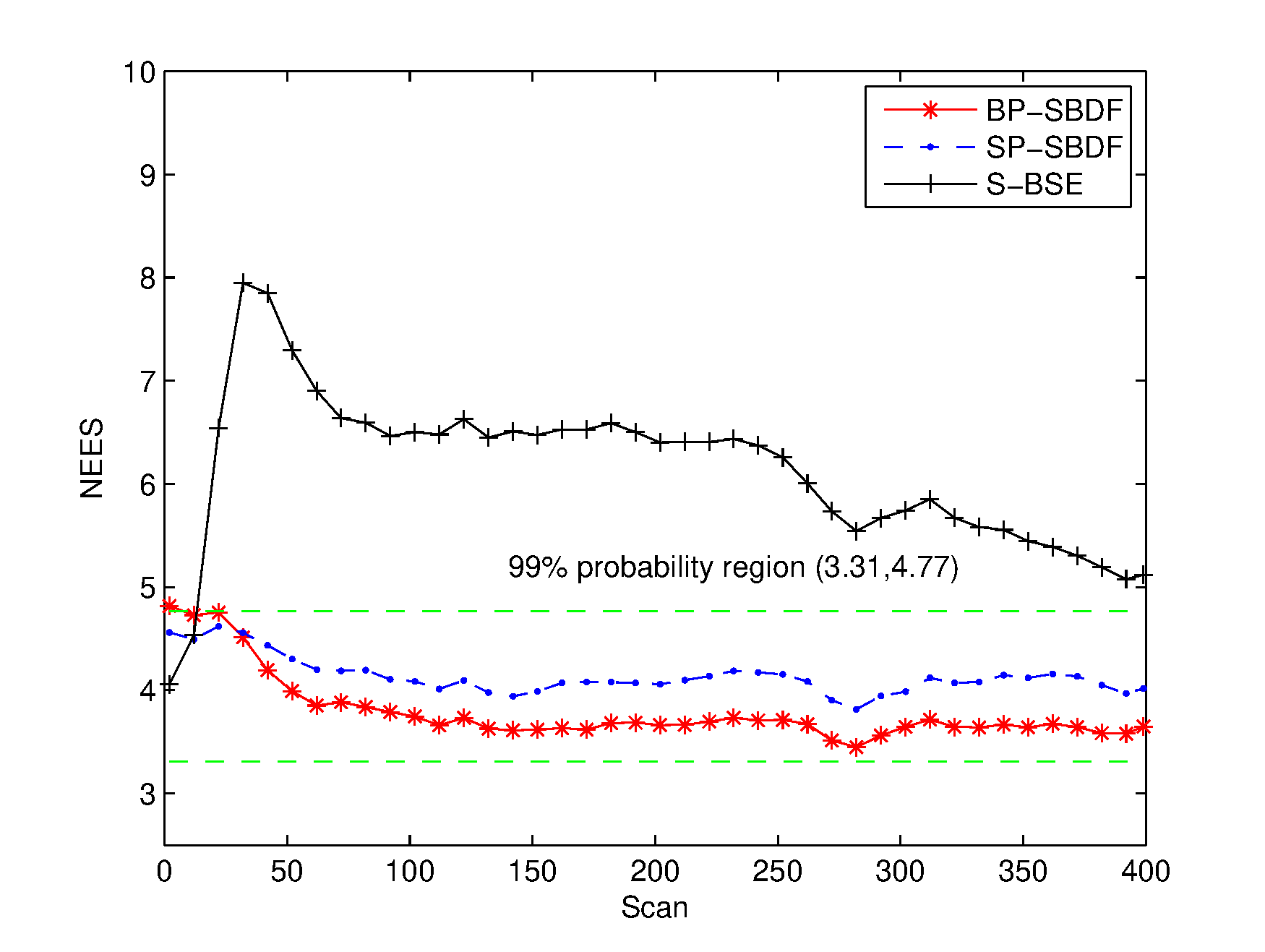

The consistency of the methods is examined based on the evaluation of the NEES, as shown in Figure 7.

Here, we use the two-sided probability region. The results in Figure 7 show the inconsistency of the S-BSE method since its NEES values are mostly outside the region of 99% . The proposed methods are consistent since their NEES values fall within the probability region. Therefore, the proposed methods can fuse the multisensor measurements to provide accurate and consistent state estimation while compensating for the spatiotemporal biases.

This scenario aims to evaluate the effects on the estimation performance when the temporal bias increases. Additionally, the measurements may be reported in the wrong order according to the time stamps since the time delays of sensors differ a lot. This scenario is also used to evaluate whether the proposed methods can still perform well when the measurements are in wrong order. The RMSEs of the spatiotemporal bias and state estimates and the NEES of the tracking filters are shown in Figures 813, respectively. Time-averaged RMSEs and the average running times of the proposed methods are listed in Table 2 for comparison.

| RMSEs | Running Time | ||||||

| Temporal Bias | Range Bias | Azimuth Bias | Position | Velocity | Overall | Single | |

| (s) | (m) | () | (m) | () | (s) | () | |

| BP-SBDF | 0.2223 | 3.1206 | 2.0088 | 2.9882 | 0.0204 | 0.2245 | 5.6125 |

| SP-SBDF | 0.1680 | 2.3662 | 1.7675 | 2.8165 | 0.0183 | 0.5054 | 3.4498 |

| S-BSE | 26.9249 | 14.2627 | 12.4416 | 0.0320 | |||

| PCRLB | 0.1258 | 1.9367 | 1.2223 | 2.3867 | 0.0141 | ||

From Figures 913, it can be seen that the improper processing of the temporal bias degrades the performance of the S-BSE method, resulting in estimation error much higher than the PCRLB and NEES outside the probability region. Additionally, as can be seen in Figures 910, the estimation errors of the range bias of the S-BSE method become larger with time, while those of the azimuth bias become smaller. This is because that the target in this scenario is moving away from the sensors. The impact of the temporal bias on the range bias estimation becomes large with the increase of the target range, while the impact on the azimuth bias is the opposite.

On the contrary, the proposed methods can provide accurate and consistent spatiotemporal bias and target state estimation simultaneously. As shown in Table 2, the improvements from the BP-SBDF and SP-SBDF methods over the S-BSE method are about in range bias RMSE, in azimuth bias RMSE, in position RMSE, and in velocity RMSE, respectively. Since the temporal bias is properly compensated by the proposed methods, the spatiotemporal bias and target state estimation errors keep small values, which also shows that the proposed methods can perform well even when the measurements are reported in the wrong order. As a consequence, the above results confirm the necessity of our methods to consider the temporal bias between sensors to perform correct data fusion. Additionally, the SP-SBDF method still performs slightly better but requires a little more computation load than the BP-SBDF method when handling all measurements from the two sensors, which are and , respectively. Also, the running time required by the SP-SBDF method in a single filtering process is less than that of the BP-SBDF method, which are and , respectively. These simulation results agree with those in Section 5.2, which means the proposed methods can perform well irrespective of whether the temporal bias is small or large.

In this paper, two spatiotemporal bias compensation methods were proposed to compensate for the spatiotemporal biases and fuse the multisensor measurements to produce accurate target state estimates. The general case where sensors have different and varying sampling periods was considered. The augmented state vectors consist of target states and spatiotemporal biases of multisensor. The measurement equations for the batch processing and sequential processing schemes were formulated as functions of both target states and spatiotemporal biases according to their relationship, which enables simultaneous spatiotemporal bias estimation and data fusion. The UKF was employed to handle the nonlinearity of the measurements and estimate spatiotemporal biases and target states simultaneously. Simulation results demonstrated that the proposed methods can provide accurate spatiotemporal bias and target state estimation simultaneously. Due to high nonlinearities in the measurement equations, the performance of the proposed methods has not reached the PCRLB. Further improving the performance of the spatiotemporal bias estimation is a topic of future efforts.

Copyright © 2024 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2024 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. Chinese Journal of Information Fusion

ISSN: 2998-3371 (Online) | ISSN: 2998-3363 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/