Chinese Journal of Information Fusion

ISSN: 2998-3371 (Online) | ISSN: 2998-3363 (Print)

Email: [email protected]

| Symbol | Meaning |

|---|---|

| Frame of discernment (FOD) | |

| Power set of a FOD | |

| Mass function | |

| Belief function | |

| Plausibility function | |

| Plausibility transformation of a mass function | |

| Dezert's entropy of a mass function | |

| Dezert and Dambreville's cross entropy | |

| Dezert and Dambreville's relative entropy | |

| Deng's entropy of a mass function | |

| Gao et al.'s cross entropy | |

| Pignistic entropy of a mass function | |

| Cross pignistic entropy | |

| Yager entropy of a mass function | |

| Cross Yager entropy | |

| Plausibility entropy of a mass function | |

| Cross plausibility entropy | |

| Relative plausibility entropy | |

| Shannon's entropy of a probability distribution | |

| Shannon's cross entropy | |

| Shannon's relative entropy |

How to represent and measure the uncertainty is one of the central issues in information sciences. In general, the uncertainty can be briefly classified into random uncertainty and epistemic uncertainty [33]. Attributed to Shannon's innovative contributions [1], quantifying the randomness of uncertain information is solved through the mathematical framework of information theory. Dempster-Shafer theory [2, 3], also known as belief function theory, is a widely used framework to represent information with epistemic uncertainty, where a mathematical structure called mass functions is provided to simultaneously describe discord and non-specificity involved in the given information [4]. However, measuring the uncertainty of mass functions is not yet well solved currently, especially it still has not a consensus with respect to the definitions of entropy, cross entropy, and relative entropy of mass functions.

With respect to the entropy of mass functions, many researchers have paid attention on the problem [5, 6, 7]. Klir [8] has proposed generalized information theory (GIT) which aims to generalize Shannon's information theory for probabilities to various uncertainty theories including imprecise probabilities, fuzzy sets, belief functions, and so on. However, the proposed aggregated uncertainty (AU) in GIT for mass functions is of some shortcomings [9], especially some of the underlying axiomatic requirements of AU have been challenged [10]. Recent years, with the proposal of Deng's entropy [11], a new entropy measure for calculating the uncertainty of a mass function, the research of uncertainty measures in Dempster-Shafer theory has welcomed a "strong resurgence" [12]. Many novel entropy definitions of mass functions have been put forward [13, 14, 15]. For example, Jirousek and Shenoy [10] designed an entropy measure for mass functions by combining plausibility transformation and weighted Hartley entropy. Zhou and Deng [16] proposed a fractal-based belief entropy on the basis of Deng's entropy. In terms of belief intervals of single elements, Moral-Garcia and Abellan [17] developed an uncertainty measure of mass functions which is analogous to AU. Besides, Cui and Deng [18] presented a total uncertainty measure of mass functions based on plausibility function, which is called plausibility entropy. Facing existing uncertainty measures, Dezert and Tchamova [19] have raised the effectiveness problem of uncertainty measures, and provided four desiderata to check if an uncertainty measure is effective, and a new effective measure of uncertainty for mass functions has been proposed in [20].

Cross entropy and relative entropy are another two important concepts according to Shannon's information theory. Although the research of entropy of mass functions is flourishing, cross entropy and relative entropy of mass functions, however, are relatively rare. This matter of fact is easy to understand, because cross and relative entropies are strongly connected with the concept of entropy, their forms are usually on the basis of the definition of entropy. Many existing entropy measures of mass functions are hard to yield corresponding cross and relative entropies. Very recently, Dezert and Dambreville [21] have provided definitions of cross entropy and relative entropy of mass functions in terms of Dezert's effective measure of uncertainty presented in [20]. In addition, Gao et al. [22] proposed a definition of cross entropy of mass functions based on Deng's entropy [11]. However, as will be analyzed in this paper, the existing definitions of cross entropy of mass functions are of some defects in underlying properties, new cross entropy, as well as corresponding relative entropy, of mass functions are required, which is the purpose of the study.

Specifically, inspired by the plausibility entropy, a total uncertainty measure of mass functions, presented in [18], new cross entropy and relative entropy of mass functions are given in this paper, which are named as cross plausibility entropy and relative plausibility entropy, respectively. The properties of cross and relative plausibility entropies are given, which shows a strong connection with classical cross entropy and relative entropy in Shannon's information theory. In addition, an illustrative example in parameter estimation is given to show the potential application of the presented entropies.

The remainder of the paper is organized as follows. Section 2 briefly introduces the basic knowledge of Dempster-Shafer theory. Related work regarding existing definitions of cross entropy of mass functions are reviewed in Section 3. Then, new cross and relative entropies of mass functions are given in Section 4. An example of application is provided in Section 5. Finally, Section 6 concludes the study.

Dempster-Shafer theory [2, 3] has provided a well-defined framework to represent and deal with uncertainty information with epistemic uncertainty. In this theory, the set of possible answers to a given question of interest is called as a frame of discernment (FOD), denoted as , in which the set is collectively exhaustive and all elements in a FOD are mutually exclusive. The power set of FOD is represented by .

In order to represent the uncertain information involving epistemic uncertainty, mass functions, also known as basic probability assignments (BPAs), are defined in Dempster-Shafer theory. A mass function is a mapping from the power set of a FOD to interval , denoted as , satisfying the following conditions

where is called as a focal element of if , and measures the belief assigned exactly to . In general, a probability distribution can be treated as a Bayesian mass function in Dempster-Shafer theory, where with there is .

Belief function and plausibility function are two equivalent forms of mass functions, which respectively express the lower bound and upper bound of the support degree of a set based on a given mass function , . Given a mass function , and are defined as follows

where . For each , there is , and is called the belief interval of . In general, the wider the belief interval of a set , the larger the uncertainty it contributes to the whole mass function. Therefore, in Dempster-Shafer theory, given a FOD , the vacuous mass function , in which , has the largest uncertainty.

In this section, two existing definitions of cross entropy of mass functions, proposed very recently, are reviewed.

Dezert [20] has given an entropy definition to measure the uncertainty of a mass function on a FOD

with

where represents the natural logarithm, and for any .

On the basis of , Dezert and Dambreville [21] have further proposed a cross entropy of mass functions

with

where for any .

In addition, a relative entropy of mass functions was also proposed in [21] as below

It is proved in [21] that , , and can respectively degenerate into classical Shannon's entropy, cross entropy, and relative entropy (also known as Kullback-Leibler (KL) divergence), and they own a relation .

Gao et al. [22] presented another cross entropy definition of mass functions as below

in which the underlying entropy definition for mass functions is based on Deng's entropy [11] as follows

Since the relative entropy inspired by Deng's entropy is not defined at present, quantitative relation between and is not established yet.

In this subsection, these two cross entropy definitions of mass functions mentioned in the above section are analyzed simply.

At first, Gao et al.'s cross entropy has been questioned in [21] because the underlying Deng's entropy is non-effective. According to the four desiderata proposed in [19], "unicity of max value of MoU" (D4), i.e., for any in which is the vacuous mass function, is not satisfied by . In terms of Deng's entropy, given a FOD , the vacuous mass function, i.e., , does not have the maximum uncertainty. Please refer to literature [21, 31, 32] for more details.

Secondly, based on a similar consideration, Dezert and Dambreville's cross entropy may also not be recommended since its underlying entropy measure violates the monotonicity [17] of a rational uncertainty measure in belief function theory. Specifically, the monotonicity means that holds if for arbitrary BPAs , defined on a same FOD . Literature [23] has first revealed the problem of , however the given counterexample in [23] is a little problem in the calculation of (specifically, is misused). In this paper, a real counterexample of on the monotonicity is given as below.

Given a FOD , and are two mass functions defined on , in which

Obviously, there are and for any . Therefore, it clearly should be in terms of the monotonicity. However, by means of the uncertainty measure expressed in nats, it obtains and . Namely, there is . Therefore, the monotonicity is violated by .

Based on the above analysis, new cross entropy definition of mass functions is required and it is exactly the purpose of the study.

In this paper, new cross and relative entropies of mass functions are presented, which is on the basis of an uncertainty measure called plausibility entropy.

The plausibility entropy was recently proposed in [18], which is defined as

where is a mass function defined on FOD . Alternatively, the plausibility entropy can also be expressed in the form of Shannon's entropy

where is the plausibility transformation [24] of , satisfying , , and is Shannon's entropy of probability distribution on .

It can be proved that the plausibility entropy has satisfied four desiderata given in [19] for an effective measure of uncertainty (MoU) including "zero min value of MoU" (D1), "increasing of MoU of vacuous BPA" (D2), "compatibility with Shannon's entropy" (D3), and "unicity of max value of MoU" (D4). In addition, many other desirable properties are also satisfied by , please refer to [18, 25, 26] for more information. Based on the well-defined plausibility entropy, new cross and relative entropies of mass functions, named as cross plausibility entropy and relative plausibility entropy respectively, are given.

Cross plausibility entropy. Given two mass functions and on a same FOD , a cross plausibility entropy, denoted as , is defined as

Moreover, the cross plausibility entropy can also be represented as

where and are the plausibility transformations of and respectively, and is the classical cross entropy between probability distributions and .

In terms of Eq. (4), a series of properties satisfied by the cross plausibility entropy can be obtained easily.

Having the above definition of cross plausibility entropy, the relative entropy of mass functions can be defined immediately in a similar means. We have noted that reference [27] provided a KL divergence as a straightforward derivative of the plausibility entropy, it is exactly the expected form of relative entropy, which is introduced as follows.

Relative plausibility entropy. Let and be two mass functions defined on a FOD , a relative plausibility entropy, denoted as , is defined as follows

Similarly, the relative plausibility entropy can also be simply represented as

where is the classical cross entropy between probability distributions and , i.e., .

From Eq. (6), some properties of the relative plausibility entropy are derived as below.

What's more, as same as the equality relation for probability distributions and in terms of Shannon's entropy, classical cross entropy and relative entropy, i.e., , the presented , , as well as plausibility entropy , of mass functions also meet the following equality relation

In this section, an illustrative example regarding parameter estimation is provided to show the potential application of proposed cross plausibility entropy . The example is originally from literature [28].

Assuming there are patients randomly taken from a population which has a proportion to suffer from a disease, and each of them is represented by to show if he/she has the disease (i.e., ) or not (i.e., ). Then, these random samples , which are independent and identically distributed (iid), can be viewed as the outcome of a Bernoulli variable. For realizations , the probability can be calculated by

The task is to estimate the unknown parameter according to state descriptions . However, due to the uncertainty, these states are only partially known, and let be the mass function concerning the state associated with patient . Table 1 gives a data set composed of observations, in which the fourth one is uncertain and represented by , , and , where .

| Observation | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | ||

| 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

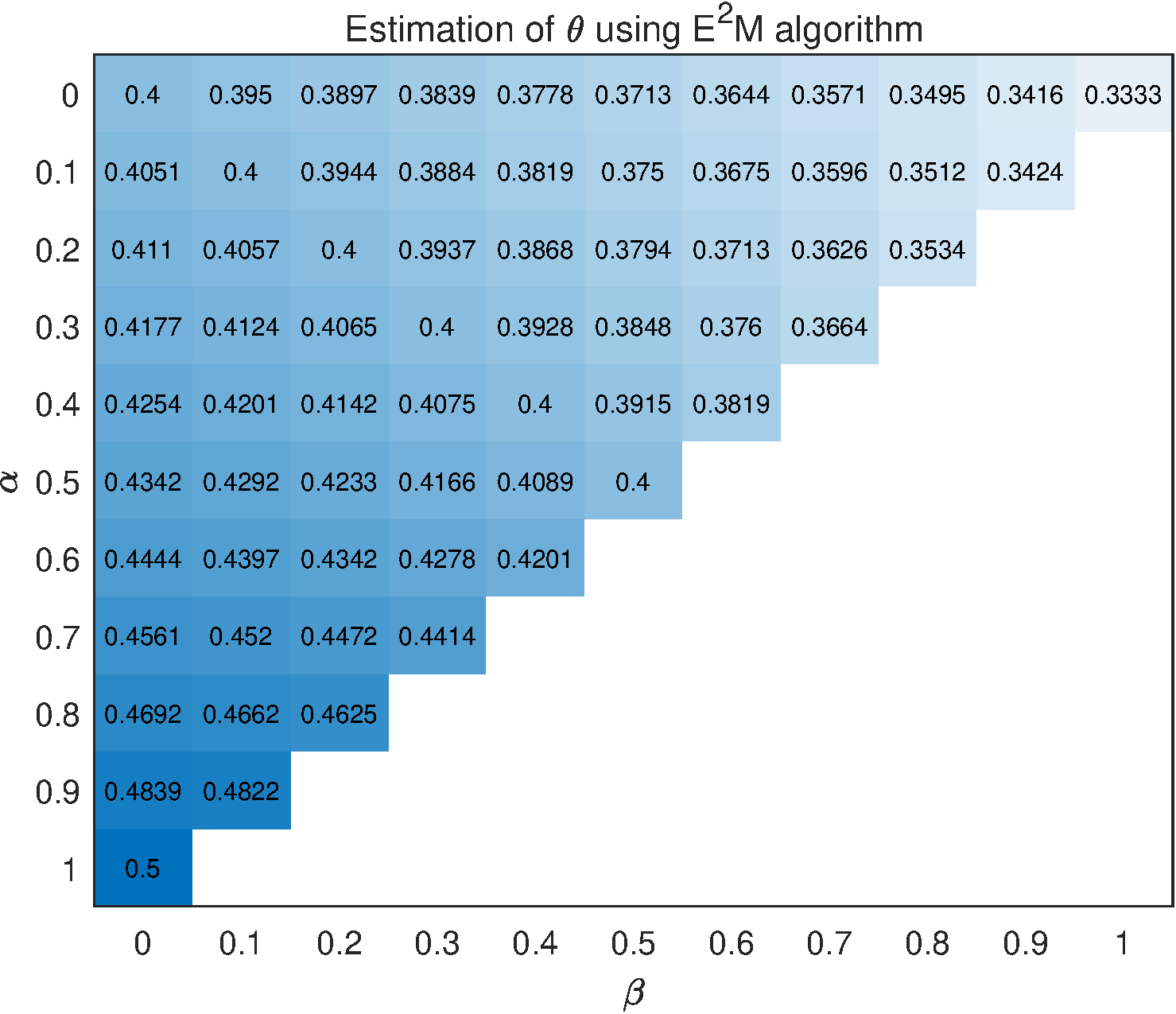

Literature [28] proposed an evidential expectation-maximization () algorithm to estimate the parameter , in terms of a maximum likelihood principle. Figure 1 gives the results of using algorithm with respect to different and . It is found that, by using the algorithm, the result of estimated is unchanged if the plausibility transformations of different caused by changed and are the same. For example, let and be , , , and , , , respectively. There are and , i.e., . Then, by using the algorithm, parameter is estimated as associated with and associated with . Two observations with different uncertainty degrees, and , lead to the same estimation of .

Now, let us use a cross entropy-based method to solve the issue of estimating parameter , where is derived by minimizing a total cross entropy loss, which coincides with the maximum likelihood principle widely used in machine learning.

For the data set shown in Table 1, since there is uncertain observation involving epistemic uncertainty, the proposed cross plausibility entropy is used to calculate the total cross entropy loss . Let be a distribution relying on parameter with and , then

By letting , we have

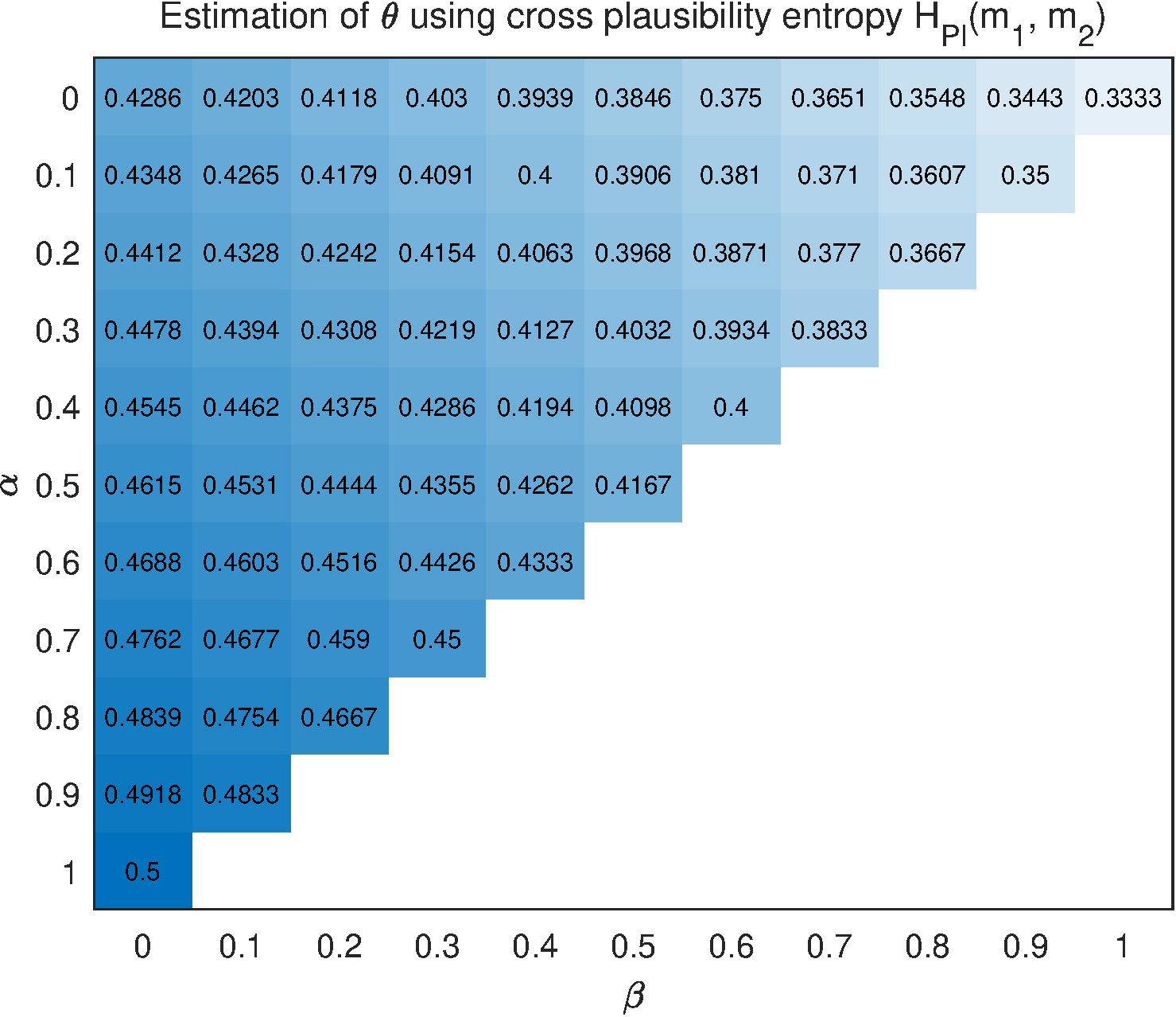

Figure 2 shows the results of using the proposed cross plausibility entropy with the consideration of different and . Compared with the results of algorithm, the estimated is changing with having different uncertainty degrees. For example, for the cases of and , it is obtained that and .

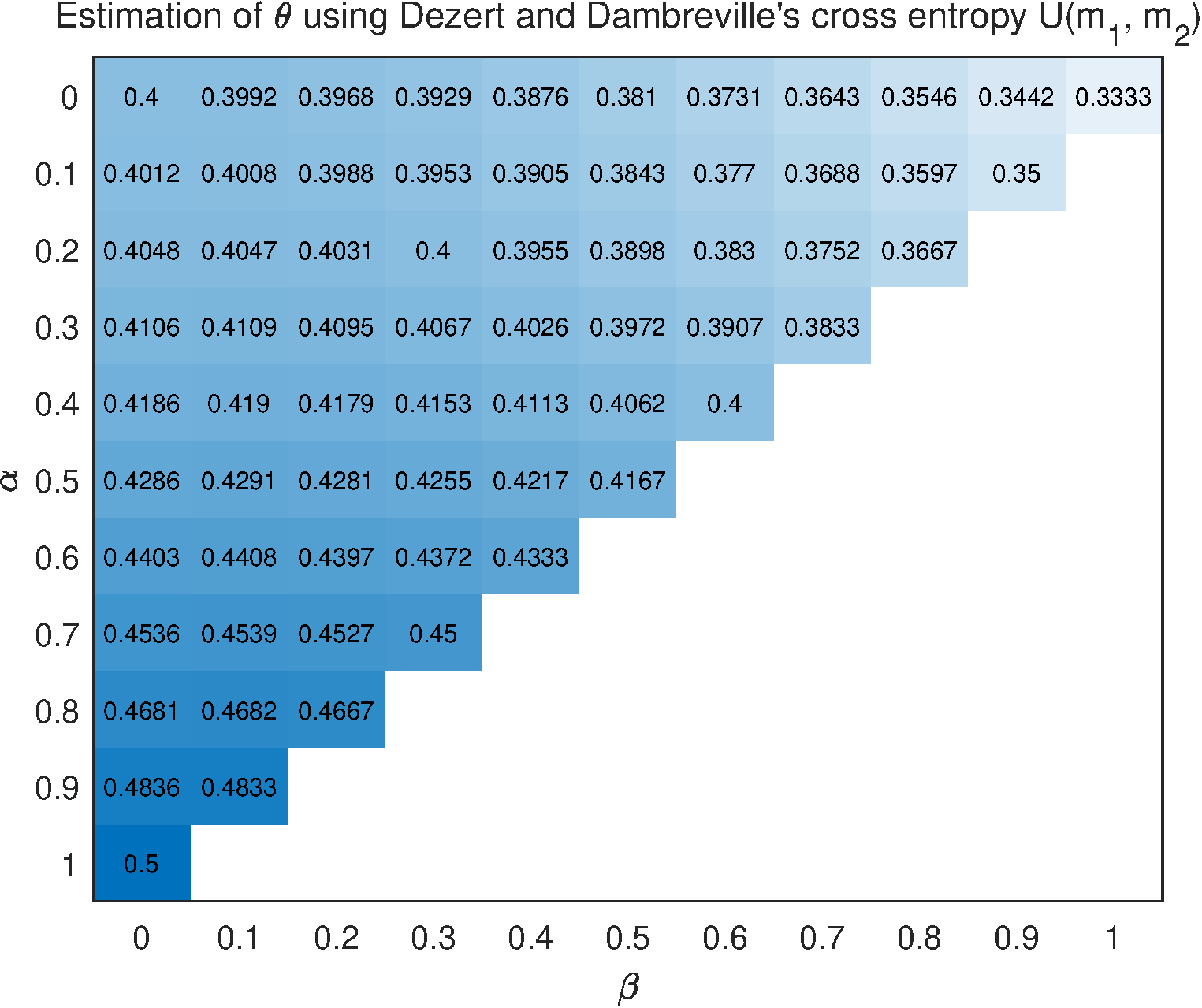

For the comparison, Dezert and Dambreville's cross entropy is also used in the example to obtain the estimation of parameter . Let be the estimation of , in which , , and , where . Then, in terms of Dezert and Dambreville's cross entropy, the total loss is

By means of , it obtains

where . Figure 3 graphically shows the results obtained by using Dezert and Dambreville's cross entropy .

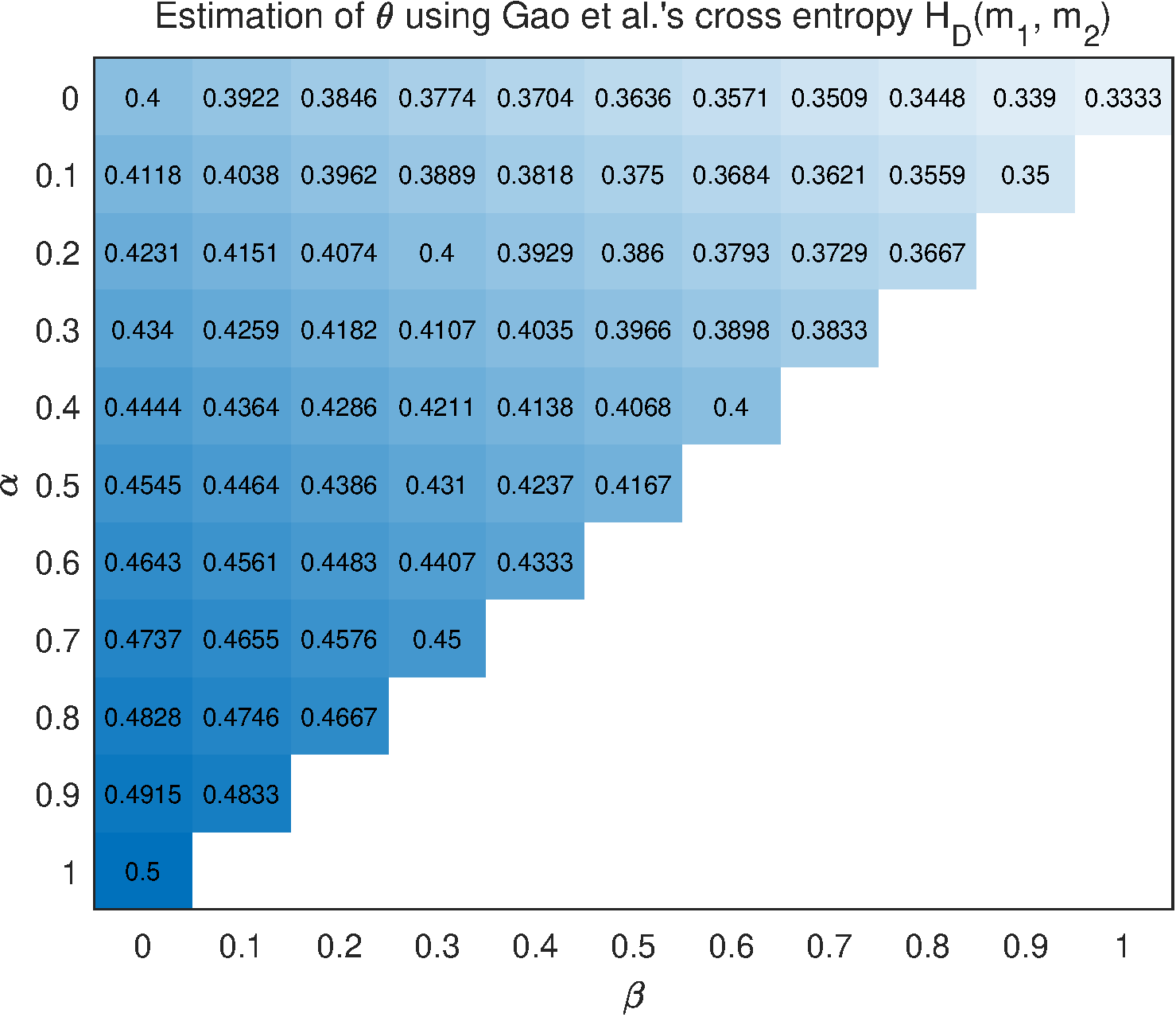

And the cross entropy from Gao et al. [21] is also used in the example. Similarly, a total loss is calculated as follows

Then, the estimation of is derived via as below

in which . The results with the use of cross entropy are shown in Figure 4.

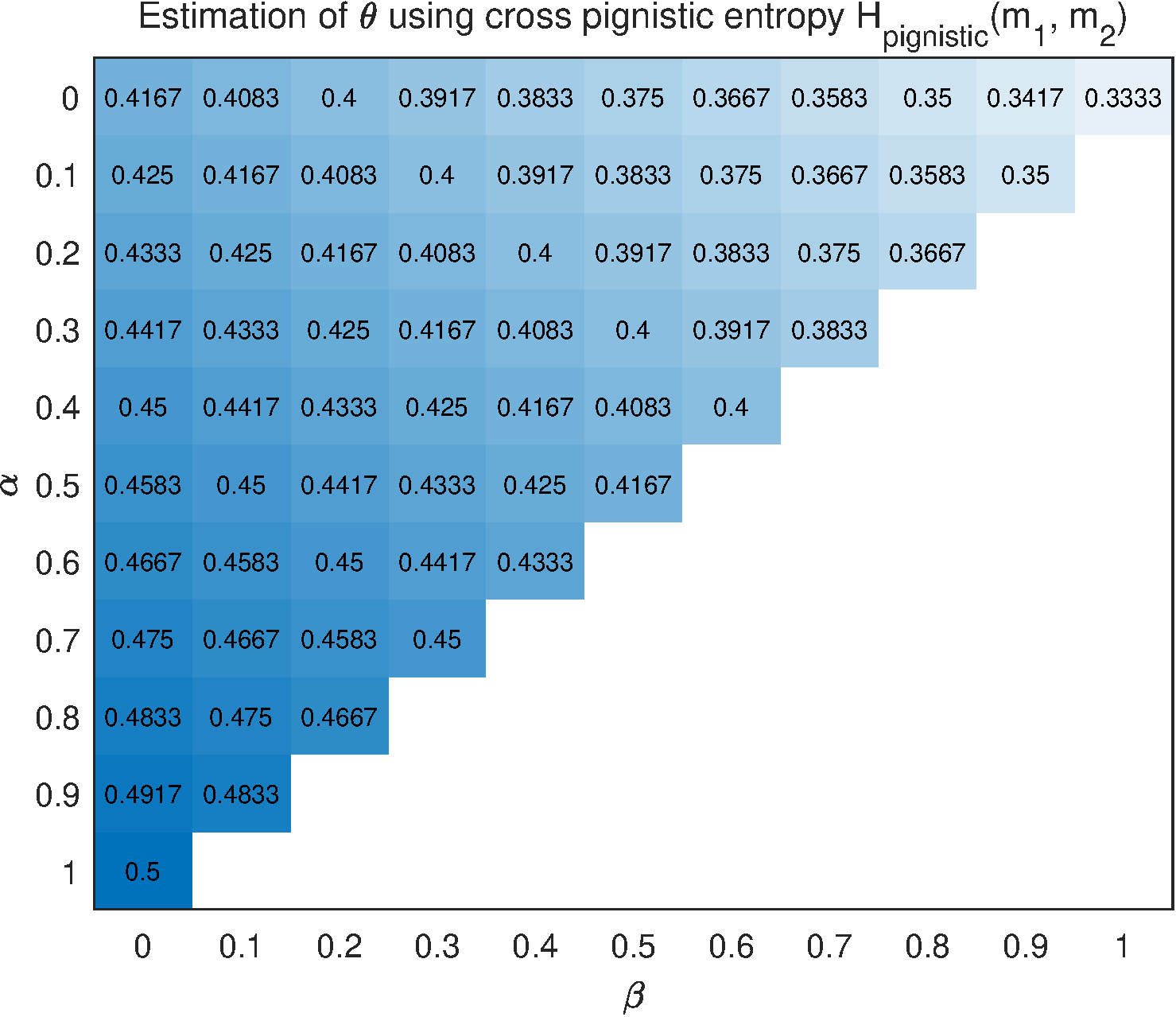

In addition, cross entropies inspired by two widely used entropies of mass functions, pignistic entropy [29, 4] and Yager entropy [30], are also considered for comparison. In terms of the formula of pignistic entropy , where , cross pignistic entropy is naturally defined as . Then, a total cross entropy loss can be obtained by

where and . By letting , we have

whose graphical results with different values of and are given in Figure 5.

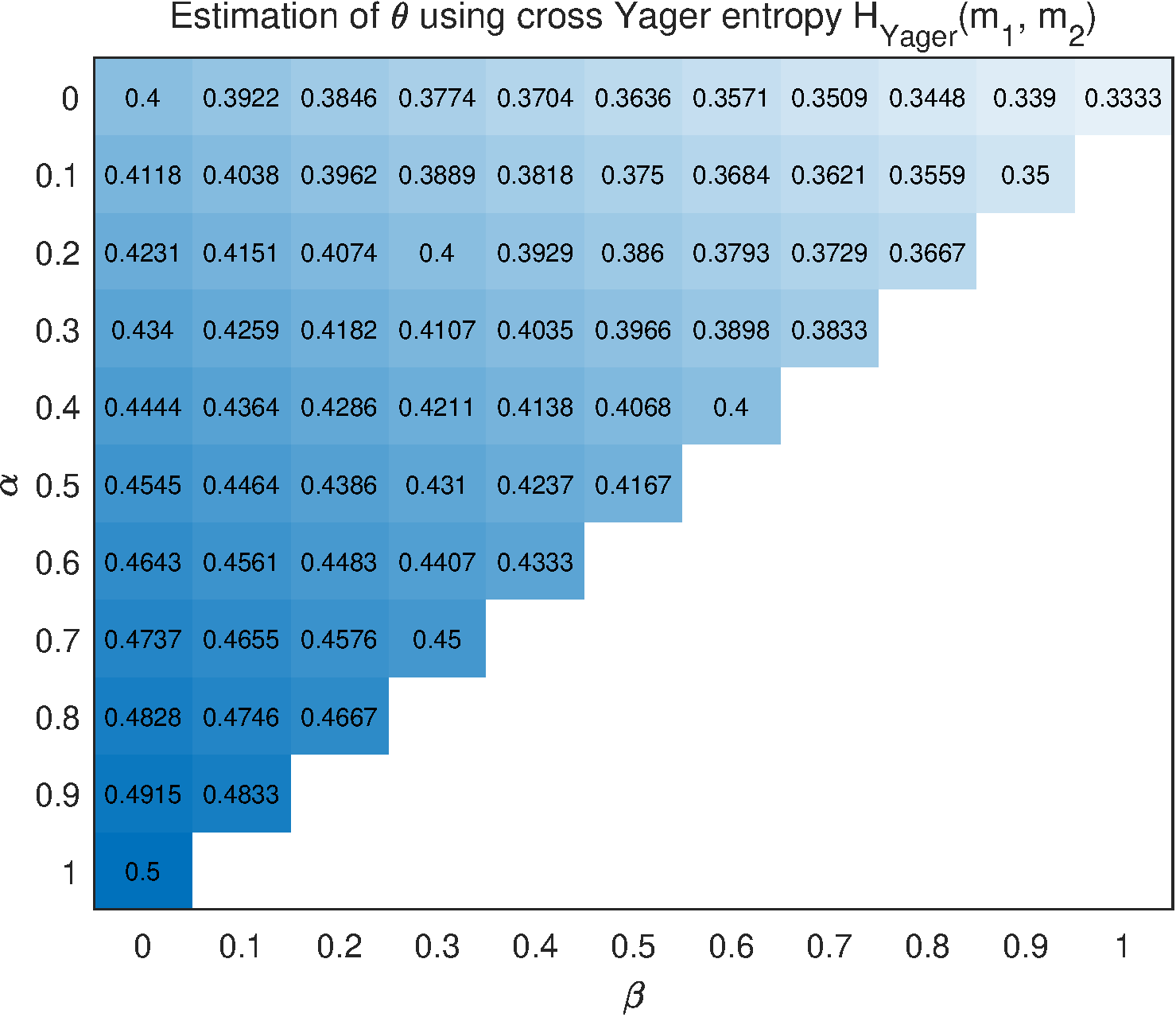

Similarly, according to the expression of Yager entropy , cross Yager entropy is defined as . Then, the corresponding total cross entropy loss is

By calculating , it obtains

which is same with that is obtained by using cross entropy . Figure 6 shows the results of using cross Yager entropy by considering different and .

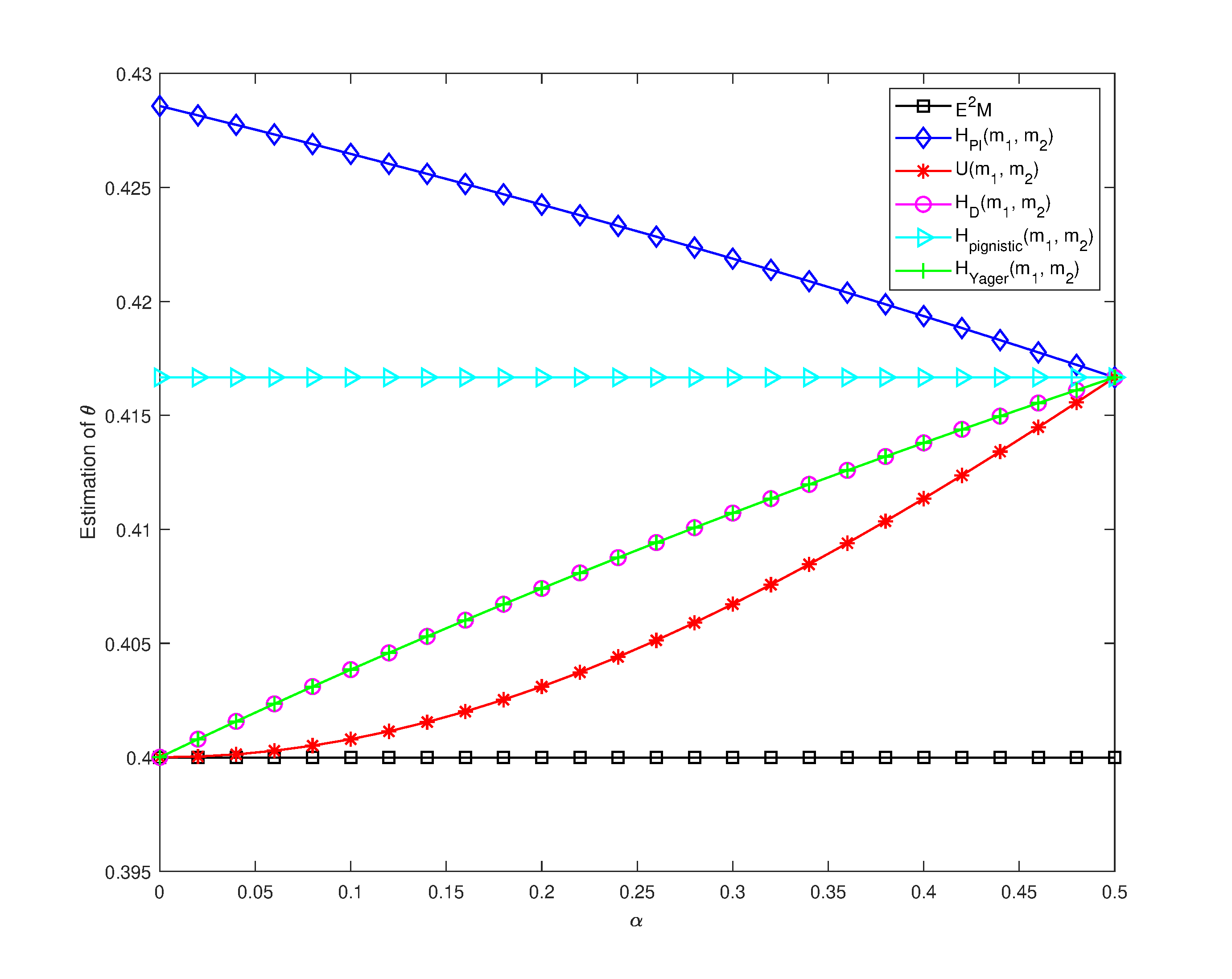

For the sake of further comparison of these methods, by letting , the uncertain observation becomes and , where . If , has the maximum uncertainty, and the uncertainty of is the least while . And it is noted that there is not any preference in between states and because and . Figure 7 shows the estimation results of by using different methods for the case of new in which .

| Observation | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | ||

| 1.0 | 1.0 | 1.0 | 0.0 | 0.0 |

In theory, if we only have observations 1,2,3,5,6, in terms of the maximum likelihood principle, the estimation of should be . With the consideration of observation 4, i.e., unbiased : (i)When , the estimation of should lie in the interval (whose midpoint is ) since the existence of epistemic uncertainty in observation 4 with ; (ii) When , the estimation of should be since in this case the example becomes a mixture model for a two-dimensional Bernoulli distribution with in which there is only random uncertainty; (iii)In the process of increasing 's value from 0 to 0.5, the value of should be changing monotonically, because for the unbiased the only change is its uncertainty degree which is decreasing monotonically; (iv)Therefore, there is a path for the estimation of from start point to end point .

From Figure 7, the result of algorithm is not reasonable since the estimated is 0.4 when . Cross pignistic entropy produces insensitive result for the change of observation . The proposed cross plausibility entropy gives that the value of is declining monotonically with the rise of , while Dezert and Dambreville's cross entropy , cross entropy , and cross Yager entropy present opposite trend of change. The difference between result of and those of , , and , is caused by the underlying logic of different entropy measures. The plausibility entropy is based on plausibility function and it tends to get the maximum uncertainty degree that a mass function could possibly have, therefore the cross plausibility entropy gives a relatively big estimation of . In contrast, it seems that , and are to obtain relatively small estimation of .

Compared with , and , the proposed is more recommended in theory because, at first, the underlying entropy definitions of the formers are of some defects as analyzed in Section 4.1 and related references [19, 5], and the underlying mechanism of using cross plausibility entropy to obtain the estimation of is more clear. In this example, with the use of cross plausibility entropy , the classical Bernoulli distribution based on probabilities is generalized to a new Bernoulli distribution with plausibility distribution observations as shown in Table 2. According to Table 2, the estimation of parameter can be obtained immediately as . Moreover, this Bernoulli distribution with plausibility distribution observations can be easily extended to the case of multi-dimensional Bernoulli distribution.

In this paper, new definitions of cross entropy and relative entropy of mass functions have been given on the basis of a recently presented total uncertainty measure of mass functions called plausibility entropy. And, properties of the cross plausibility entropy and relative plausibility entropy have been presented in the study. In addition, an illustrative example of application has been provided to show the effectiveness of the presented entropies compared with other methods and entropy definitions of mass functions. The presented cross plausibility entropy and relative plausibility entropy of mass functions can be used in the scenarios of multi-source information fusion based on Dempster-Shafer evidence theory for target recognition, fault diagnosis, and so on.

In the future study, on one hand, more theoretical analysis about the presented cross and relative plausibility entropies will be conducted; on the other hand, practical applications with the use of cross and relative plausibility entropies for multi-sensor information fusion, decision support systems, intelligent diagnosis, and so forth, will be further considered.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. Chinese Journal of Information Fusion

ISSN: 2998-3371 (Online) | ISSN: 2998-3363 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/