ICCK Transactions on Emerging Topics in Artificial Intelligence

ISSN: 3068-6652 (Online)

Email: [email protected]

Enterprises across industries are undergoing a paradigm shift, moving away from monolithic legacy systems toward agile, cloud-native architectures. This transformation is driven by the convergence of Artificial Intelligence (AI) and cloud computing, which together are redefining how modern enterprises operate, innovate, and scale [1, 2]. As organizations strive to improve agility, achieve operational intelligence, and harness data for real-time decision-making, cloud-based AI solutions have emerged as key drivers of enterprise modernization [3, 4].

As illustrated in Figure 1, the convergence of AI and cloud computing forms the foundation of modern enterprise modernization. These solutions provide enterprises with scalable, on-demand access to intelligent AI capabilities—ranging from machine learning (ML) and natural language processing (NLP) to computer vision and predictive analytics—without requiring extensive in-house infrastructure or specialized expertise. The emergence of AI-as-a-Service (AIaaS) platforms by leading cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) has democratized access to advanced AI, making it more accessible, cost-efficient, and enterprise-ready [5].

Cloud-native, an approach to building and running applications that fully leverages the advantages of cloud infrastructure, incorporates architectural principles such as microservices, containerization, and serverless computing. These principles empower enterprises to develop and deploy rapidly scalable and intelligent applications, facilitating automation, personalization, and adaptive data-driven decision-making across organizational functions [6].

The benefits of integrating AI with cloud-native platforms are multifaceted: enhanced scalability, reduced time-to-market, improved resource utilization, and superior user experiences. At the same time, modern enterprises must navigate complex challenges such as data privacy, regulatory compliance, latency, and AI model governance—particularly in domains like healthcare, finance, and public administration [7, 8].

This study presents a comprehensive investigation into how cloud-based AI solutions are enabling scalable, intelligent, and responsible enterprise transformation. It explores emerging technologies—including AI-as-a-Service, federated learning, and edge-cloud synergy—as well as cloud-native architectures and deployment models that support enterprise-wide modernization. Real-world use cases are analyzed across sectors such as healthcare, finance, and manufacturing, alongside emerging applications like generative AI and autonomous enterprise agents.

Modern enterprise modernization is increasingly built on a convergence of advanced technologies that combine cloud computing, artificial intelligence (AI), and scalable architecture. This section outlines the foundational technologies and architectural frameworks that support intelligent and scalable AI solutions in cloud-native enterprise ecosystems.

At the heart of cloud-based AI systems are flexible infrastructure layers and intelligent processing engines. Key components include:

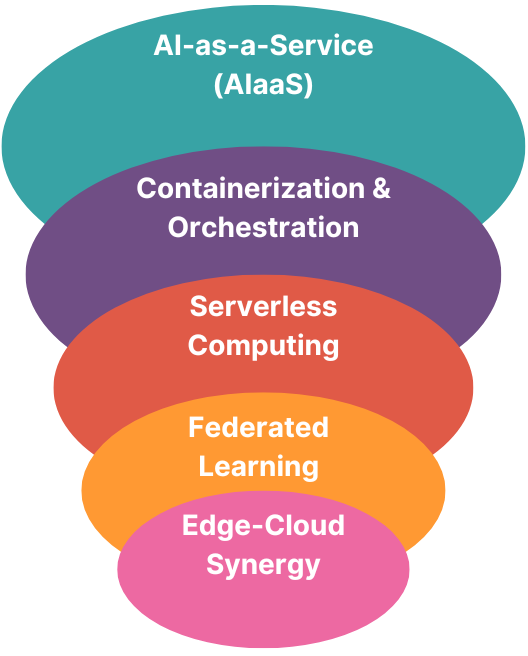

As shown in Figure 2, the Cloud-Based AI Technology stack can be represented as a funnel, where each level builds on the previous one.

AI-as-a-Service (AIaaS) platforms, such as AWS SageMaker, Azure Machine Learning, and Google Cloud Vertex AI, offer prebuilt models, APIs, and managed environments for AI deployment [5, 3].

Containerization and Orchestration, using tools like Docker and Kubernetes, allows modular, isolated, and scalable deployment of AI workloads [9].

Serverless Computing frameworks, such as AWS Lambda and Azure Functions, enable event-driven execution of AI functions without requiring infrastructure provisioning [6].

Federated Learning (FL) allows decentralized model training across data sources, preserving privacy and enhancing compliance in sensitive environments [10].

Edge-Cloud Synergy leverages lightweight AI models on edge devices and synchronizes with cloud backends for real-time analytics and feedback [7].

Together, these technologies enable elastic, intelligent, and secure AI solutions that align with enterprise goals such as agility, automation, and regulatory compliance.

To fully realize the benefits of the technologies listed above, enterprises must adopt robust architectural foundations. Cloud-native architectural frameworks ensure that AI capabilities can be integrated, scaled, and maintained efficiently over time. Common patterns include:

Microservices Architecture, where AI services are deployed as loosely coupled components that communicate via APIs, supporting independent scaling and maintenance.

Data Lakehouse Architecture, which integrates the flexibility of data lakes with the performance of data warehouses to support real-time ingestion of structured and unstructured data into AI pipelines.

Event-Driven Architecture (EDA), enabling real-time automation and reactive AI behavior using message queues and brokers such as Apache Kafka or cloud-native alternatives.

Zero-Trust Security and AI Governance Models, which embed data protection, model transparency, and policy compliance into every stage of the AI lifecycle [11, 12].

These architectural frameworks enable the deployment, integration, and scaling of intelligent AI solutions [13] within modern enterprise systems. By bridging the gap between technological capability and organizational goals, they form the backbone of enterprise-wide digital transformation.

The integration of edge computing with cloud-based AI is emerging as a critical enabler for real-time, scalable, and resilient enterprise applications. This synergy allows computationally light AI models to run on edge devices—such as IoT sensors, mobile gateways, or embedded controllers—while offloading resource-intensive model training and orchestration tasks to the cloud.

Key advantages of cloud-edge synergy include:

Low Latency Inference: Time-sensitive decisions, such as predictive maintenance or anomaly detection, can be executed at the edge, reducing round-trip latency and network dependency.

Bandwidth Optimization: By preprocessing or filtering data locally, enterprises reduce the volume of data transmitted to the cloud—minimizing costs and congestion.

Contextual AI: Edge devices can incorporate real-time environmental or sensor context into AI inference, enabling more localized decision-making.

Privacy Preservation: Sensitive data can be processed locally or used in federated learning, avoiding exposure to cloud platforms while still contributing to collaborative AI models [10].

Cloud providers are increasingly offering edge-compatible services—such as AWS Greengrass, Azure IoT Edge, and Google Edge TPU—that support containerized AI workloads and synchronize with cloud-based MLOps systems [14].

As summarized in Table 1, the core technologies enabling cloud-based AI differ in their functionality, benefits, and limitations. This comparison helps contextualize their strategic relevance to enterprise modernization efforts.

| Technology | Benefits and Limitations |

|---|---|

| AI-as-a-Service (AIaaS) | Fast deployment, access to pretrained models, low entry barrier; may involve vendor lock-in and limited customization. |

| Containerization | Enables modular and portable deployment across environments; requires orchestration complexity. |

| Serverless Computing | Auto-scaling and event-driven processing reduce cost; limited compute duration and cold-start issues. |

| Federated Learning | Enables privacy-preserving training across organizations; introduces synchronization and communication overhead. |

| Edge-Cloud Synergy | Real-time inference with low latency; constrained by edge device capacity and connectivity. |

Recent research has emphasized the importance of cloud-native architectures in enabling scalable AI deployment across enterprises. McKinsey (2024) and Forbes Technology Council (2024) examined how AI-as-a-Service (AIaaS) is reshaping enterprise infrastructure by simplifying deployment and accelerating innovation [1, 2]. Softweb Solutions (2025) outlined deployment patterns for containerized AI services, while AWS (2024) demonstrated enterprise-scale implementations using Infosys Live Enterprise models [3, 5].

Anbalagan (2024) contributed a flexible framework highlighting elastic resource provisioning and microservices to support distributed AI workloads [9]. CIO (2024) explored how Generative AI can enhance cloud-native platforms by enabling adaptive enterprise applications [6].

These cloud-native innovations provide the scalable infrastructure needed to support intelligent systems at enterprise scale—an essential building block of modern digital transformation.

Recent advancements also highlight emerging directions in cloud-based AI. IBM's Watsonx (2023) exemplifies enterprise-grade AI orchestration with built-in governance and MLOps capabilities [15]. HTEC (2025) discusses digital platform capabilities that fuse AI and cloud-native tools to accelerate modernization [16]. Additionally, cross-cloud portability and model retraining strategies for edge-to-cloud pipelines are gaining momentum [8]. These works point to a future where AI services are modular, traceable, and seamlessly deployable across hybrid infrastructures.

Beyond architectural enablers, ethical and governance issues—such as bias, fairness, and explainability—have become increasingly central to AI deployment in cloud environments. Explainable AI (XAI), which seeks to make model decisions interpretable to human users, is gaining traction as a trust-enhancing mechanism in enterprise settings. Bura et al. [11] proposed a trust-centric framework for XAI to enhance transparency and organizational adoption of AI technologies.

Additionally, Bura et al. [12] developed ethical prompt engineering techniques to mitigate bias and ensure fairness in AI-generated content—an area of growing concern as Generative AI becomes mainstream. In a related domain, federated learning was reviewed as a privacy-preserving method that ensures compliance with data governance policies while enabling collaborative model training across cloud infrastructures [10, 17, 18].

These ethical frameworks and privacy-preserving methods reinforce the trustworthiness of enterprise AI, making them crucial components of any responsible modernization strategy.

Another critical stream of research focuses on AI-driven enhancements to enterprise information systems. Myakala et al. [10] surveyed federated learning as a privacy-preserving technique for enabling collaborative AI across distributed data environments. Their insights emphasize how decentralized intelligence supports scalability while respecting data locality and compliance.

These system-level approaches illustrate how intelligent cloud-based AI services can align with enterprise goals such as resilience, privacy, and decision support.

Although these studies provide critical insights across infrastructure, ethics, and enterprise intelligence, most focus on isolated domains. The literature still lacks a unified framework that holistically connects cloud-native AI architectures, ethical governance models, and real-world applications tailored for enterprise modernization.

This study fills that gap by offering a comprehensive, multi-dimensional roadmap for building scalable, intelligent, and responsible AI systems in enterprise settings. By integrating architectural best practices, governance principles, and operational case studies, it aims to advance both the theoretical and practical understanding of AI-powered enterprise transformation.

The integration of AI with cloud-native technologies is not merely theoretical—it is actively transforming how enterprises operate, innovate, and compete. This section presents representative use cases across industries that demonstrate the practical application of cloud-based AI solutions in modern enterprise environments.

Cloud-based AI enables real-time diagnostic systems that process imaging, clinical records, and sensor data at scale. Hospitals and health tech platforms use AIaaS offerings and edge-cloud integration to deploy intelligent diagnostic tools while maintaining patient privacy. Federated learning, in particular, allows healthcare institutions to train AI models collaboratively without sharing sensitive patient data [10]. These systems support early detection, continuous monitoring, and personalized treatment planning.

Financial institutions leverage scalable AI platforms on the cloud to detect fraudulent transactions, assess credit risk, and predict customer behavior. Serverless computing frameworks enable real-time scoring models, while microservices architectures allow individual model components to evolve independently. With compliance and explainability critical in finance, Explainable AI (XAI) frameworks are increasingly integrated to support regulatory audits and build trust [11, 19].

Industrial enterprises use AI-driven analytics for predictive maintenance, real-time process control, and supply chain optimization. Containerized machine learning models running on Kubernetes clusters ingest IoT sensor data and predict equipment failure before downtime occurs. Event-driven architectures allow these insights to trigger automated responses in factory systems, improving operational efficiency and reducing costs [9].

Retailers and e-commerce platforms deploy AI-powered recommendation engines and forecasting models via AIaaS platforms to enhance customer experience and optimize inventory management. These applications rely on real-time data streaming, NLP-based customer insights, and scalable backends that adjust dynamically to seasonal demand. Data lakehouse architectures support large-scale data integration from various sources, including point-of-sale systems, clickstreams, and CRM tools.

Government agencies and public institutions adopt cloud-based AI to deliver citizen services more efficiently, automate decision processes, and enhance digital governance. Intelligent chatbots, automated permit approvals, and AI-supported resource allocation are deployed through secure, compliant cloud platforms. These implementations often require strong ethical AI and zero-trust models to ensure accountability, fairness, and security [12, 7].

Recent advancements in generative AI and autonomous agents have opened new frontiers in enterprise automation. Large language models (LLMs) are being used to generate reports, draft contracts, analyze legal documents, and summarize large volumes of unstructured data. These tools, when hosted via AI-as-a-Service (AIaaS) platforms, allow businesses to enhance knowledge work without the need for domain-specific AI development [1, 20].

Additionally, autonomous AI agents are being integrated into enterprise resource planning (ERP), human capital management (HCM), and business intelligence platforms. These agents can plan, reason, and execute multi-step tasks such as onboarding workflows, financial reconciliation, or inventory audits with minimal human oversight.

These emerging applications highlight the expanding role of cloud-based AI in domains traditionally reliant on manual expertise and offer promising directions for future enterprise transformation.

Table 2 highlights how cloud-based AI is being applied across different industries, along with the enabling technologies that support these use cases. This matrix illustrates the versatility and broad impact of AI in real-world enterprise settings.

| Industry | Applications and Enabling Technologies |

|---|---|

| Healthcare | Intelligent diagnostics, remote monitoring, personalized care (AIaaS, FL, Edge AI). |

| Finance | Fraud detection, credit risk modeling, real-time alerts (Serverless AI, XAI, microservices). |

| Manufacturing | Predictive maintenance, process automation, anomaly detection (Containerization, EDA, ML pipelines). |

| Retail | Recommendation engines, inventory optimization, sentiment analysis (AIaaS, Data Lakehouse, NLP). |

| Public Sector | Citizen services, decision automation, chatbot integration (Ethical AI, zero-trust models, cloud APIs). |

While cloud-based AI presents immense potential for enterprise modernization, its adoption is accompanied by several challenges that must be carefully managed. At the same time, new opportunities continue to emerge, offering pathways for innovation, resilience, and competitive differentiation.

Data Privacy and Security: The movement of sensitive enterprise and customer data to the cloud raises concerns around data breaches, unauthorized access, and compliance with regulations such as GDPR and HIPAA. Federated learning and encryption-at-rest are promising but not yet ubiquitous [10, 7].

Latency and Real-Time Processing: Mission-critical applications in healthcare, finance, and manufacturing often require ultra-low latency AI inference, which can be hindered by network congestion or remote cloud dependencies. Edge computing and hybrid architectures are evolving to mitigate these concerns [6].

Cost and Resource Optimization: While cloud solutions promise scalability, uncontrolled usage or lack of workload optimization can result in escalating costs. Organizations must implement monitoring, automation, and right-sizing strategies to remain cost-effective [3].

Explainability and Trust: AI decisions must be explainable to meet regulatory requirements and gain user trust. Lack of transparency in black-box models can hinder adoption in sectors like finance and government [11].

Vendor Lock-in and Interoperability: Reliance on proprietary tools and services can restrict flexibility and future migration options. Multi-cloud and open-source solutions offer alternatives, but often require greater integration efforts.

AI Model Drift and Lifecycle Maintenance: Cloud-based AI models must be continuously retrained to reflect changing user behavior, regulations, and data distributions. Without proper monitoring and MLOps integration, model performance may degrade rapidly.

Observability and Governance Gaps: While cloud platforms offer scalability, they often lack unified tools for AI observability—making it difficult to track model lineage, explainability, and auditability over time [21].

Workload Placement and Compliance Complexity: Determining where an AI workload should run (e.g., public cloud, private cloud, or edge) based on cost, latency, and regulatory constraints is a non-trivial challenge in hybrid and multi-cloud setups.

Generative AI and Autonomous Agents: With the rise of large language models and autonomous agents, enterprises can automate complex knowledge work—from summarizing reports to automating workflows—thereby increasing productivity and reducing manual effort [1].

AI Governance and Ethical Frameworks: The development of standardized governance models offers enterprises the ability to deploy AI responsibly. Initiatives in ethical prompt engineering and explainable AI (XAI) help embed fairness and compliance into systems by design [12].

Cross-Industry Collaboration: Cross-sector innovation is emerging as a driver of cloud-based AI maturity, with shared platforms, open datasets, and federated ecosystems allowing enterprises to co-develop AI models without compromising privacy.

Cloud-Native Tooling and MLOps: The rise of MLOps platforms for versioning, monitoring, and continuous integration of AI models is making cloud-based AI deployment more streamlined, reproducible, and scalable [5, 22].

AI-Driven Organizational Intelligence: Cloud-based AI enables real-time enterprise insights by integrating structured and unstructured data across departments, thereby augmenting human decision-making at scale.

Composable AI Services: Cloud-native platforms increasingly support plug-and-play AI modules that enterprises can rapidly compose into solutions—speeding up experimentation and reducing development overhead.

Enterprises that proactively address these challenges while embracing emerging opportunities are well-positioned to build intelligent, resilient, and ethical AI ecosystems. Strategic investment in responsible AI, combined with robust cloud-native infrastructure, is key to unlocking long-term digital transformation.

This study has explored how cloud-based AI solutions are enabling scalable and intelligent enterprise modernization. By examining core technologies such as AI-as-a-Service (AIaaS), containerization, serverless computing, and federated learning, and analyzing cloud-native architectural frameworks—including microservices, data lakehouses, and zero-trust models—we have presented a comprehensive view of the digital transformation landscape.

Real-world use cases across healthcare, finance, manufacturing, retail, and the public sector demonstrate the widespread applicability and benefits of cloud-based AI. These include real-time insights, improved automation, enhanced personalization, and operational efficiency. However, as highlighted in this study, enterprises must also navigate challenges related to privacy, cost, latency, and trust.

To remain competitive, enterprises should adopt a proactive approach that integrates cloud-native infrastructure, ethical AI governance, and robust deployment pipelines (e.g., MLOps). The path forward involves not only technological adaptation but also cultural and organizational change to support AI-driven decision-making and innovation.

As cloud-based AI continues to evolve, future research and enterprise strategies may focus on:

Federated and Decentralized AI: Advancing techniques that allow secure and collaborative AI development across data silos and geographies without compromising privacy.

Autonomous Enterprise Systems: Enabling end-to-end business automation using AI agents and generative models that can reason, plan, and execute tasks.

AI Model Lifecycle Governance: Building frameworks for continuous monitoring, validation, and auditing of AI models to ensure reliability and fairness over time.

Quantum-AI Convergence: Exploring the role of quantum computing in accelerating AI model training and optimization within cloud ecosystems. Future-ready AI architectures must integrate both classical high-performance techniques and emerging quantum paradigms [23].

Sustainability and Green AI: Designing cloud-based AI architectures that are energy-efficient and aligned with sustainability goals.

Ultimately, cloud-based AI stands at the forefront of enterprise innovation. As the technology matures, its successful adoption will depend on balancing performance, scalability, and responsibility—ensuring that intelligent systems are not only powerful, but also ethical, secure, and future-ready.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. ICCK Transactions on Emerging Topics in Artificial Intelligence

ISSN: 3068-6652 (Online)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/