Abstract

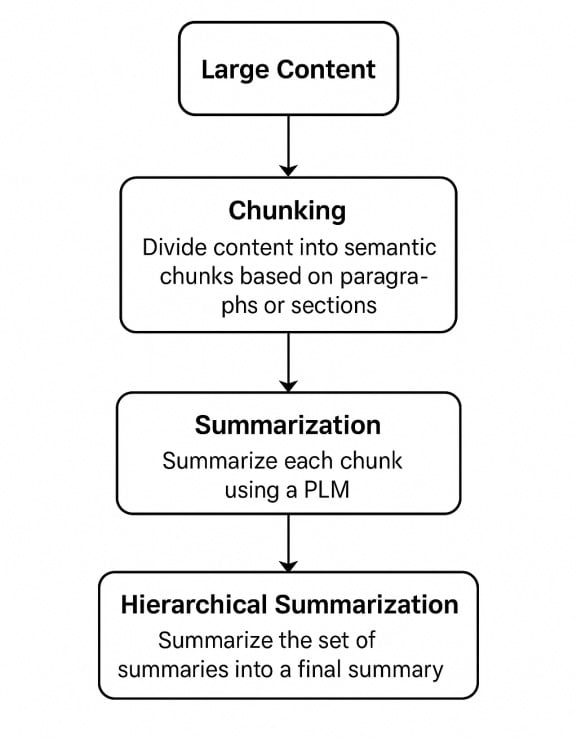

In today's world anything almost everything related to literature can be achieved by LLMs. Be it summarization, abstraction, translation, transformation, etc. But not always is it possible to do those operations on extremely large content. Even with the large token output limits of newly launched advanced LLMs it is not always economically and technically feasible to perform such operations. To cater to such a problem this paper explores the idea of summarization of extensive contents by a chunk-based approach which is both efficient and economical. This approach also understands the drawback of loss of information while chunking and efficiently solves that issue. The usage of such a framework is highly demandable across various enterprise software industries as well as healthcare and financial industries to store, summarize as well as query various large contents which are sometimes challenging to maintain and query. To create a generic framework the approach used for the summarization is mainly zero-shot summarization.

Data Availability Statement

Data will be made available on request.

Funding

This work was supported without any funding.

Conflicts of Interest

Avishto Banerjee is an employee of SAP Labs India Pvt. Ltd., Bengaluru 560066, India. The author declares no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Banerjee, A. (2025). Towards Economical Long-Form Summarization: A Chunk-Based Approach Using LLMs. ICCK Transactions on Large Language Models, 1(1), 4–8. https://doi.org/10.62762/TLLM.2025.674475

Publisher's Note

ICCK stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and Permissions

Institute of Central Computation and Knowledge (ICCK) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue