ICCK Transactions on Sensing, Communication, and Control

ISSN: 3068-9287 (Online) | ISSN: 3068-9279 (Print)

Email: [email protected]

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

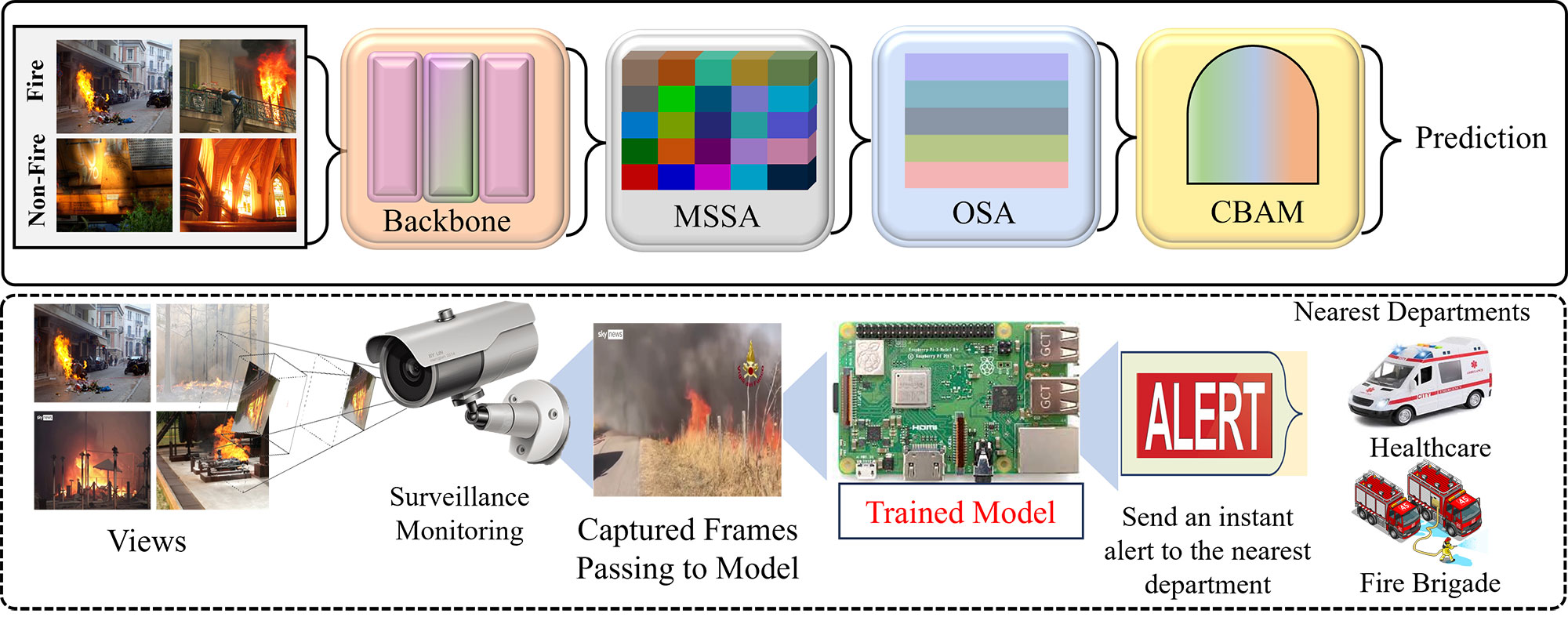

TY - JOUR AU - Khan, Ikram Majeed AU - Zahoor, Faryal PY - 2026 DA - 2026/02/13 TI - Intelligent Fire Recognition for Surveillance Control Using Cascaded Multi-Scale Attention Framework JO - ICCK Transactions on Sensing, Communication, and Control T2 - ICCK Transactions on Sensing, Communication, and Control JF - ICCK Transactions on Sensing, Communication, and Control VL - 3 IS - 1 SP - 15 EP - 26 DO - 10.62762/TSCC.2025.862776 UR - https://www.icck.org/article/abs/TSCC.2025.862776 KW - fire detection KW - strip pooling KW - surveillance monitoring KW - intelligent sensing KW - safety control KW - visual recognition AB - Fire incidents cause devastating environmental damage and human casualties, necessitating robust automated detection systems. Existing fire recognition methods struggle with visual ambiguities, illumination variations, and computational constraints, while current attention mechanisms lack hierarchical integration for comprehensive feature refinement. We propose a cascaded multi-attention architecture that combines Multi-Scale Strip Attention (MSSA), Optimized Spatial Attention (OSA), and the Convolutional Block Attention Module (CBAM) to enhance fire detection. MSSA employs three-scale orthogonal strip pooling to capture fire patterns across varying spatial extents through horizontal and vertical feature decomposition. OSA employs optimized convolutions rather than conventional kernels, reducing computational cost while maintaining spatial localization accuracy. CBAM applies sequential channel- and spatial-level attention for comprehensive feature recalibration. Operating on the EfficientNetB7 backbone, the cascaded design progressively refines representations through complementary mechanisms. Systematic ablation studies validate individual module contributions, while Grad-CAM visualizations confirm precise localization of fire regions. Extensive experiments on the FD and BoWFire datasets demonstrate superior performance compared to state-of-the-art approaches. SN - 3068-9287 PB - Institute of Central Computation and Knowledge LA - English ER -

@article{Khan2026Intelligen,

author = {Ikram Majeed Khan and Faryal Zahoor},

title = {Intelligent Fire Recognition for Surveillance Control Using Cascaded Multi-Scale Attention Framework},

journal = {ICCK Transactions on Sensing, Communication, and Control},

year = {2026},

volume = {3},

number = {1},

pages = {15-26},

doi = {10.62762/TSCC.2025.862776},

url = {https://www.icck.org/article/abs/TSCC.2025.862776},

abstract = {Fire incidents cause devastating environmental damage and human casualties, necessitating robust automated detection systems. Existing fire recognition methods struggle with visual ambiguities, illumination variations, and computational constraints, while current attention mechanisms lack hierarchical integration for comprehensive feature refinement. We propose a cascaded multi-attention architecture that combines Multi-Scale Strip Attention (MSSA), Optimized Spatial Attention (OSA), and the Convolutional Block Attention Module (CBAM) to enhance fire detection. MSSA employs three-scale orthogonal strip pooling to capture fire patterns across varying spatial extents through horizontal and vertical feature decomposition. OSA employs optimized convolutions rather than conventional kernels, reducing computational cost while maintaining spatial localization accuracy. CBAM applies sequential channel- and spatial-level attention for comprehensive feature recalibration. Operating on the EfficientNetB7 backbone, the cascaded design progressively refines representations through complementary mechanisms. Systematic ablation studies validate individual module contributions, while Grad-CAM visualizations confirm precise localization of fire regions. Extensive experiments on the FD and BoWFire datasets demonstrate superior performance compared to state-of-the-art approaches.},

keywords = {fire detection, strip pooling, surveillance monitoring, intelligent sensing, safety control, visual recognition},

issn = {3068-9287},

publisher = {Institute of Central Computation and Knowledge}

}

ICCK Transactions on Sensing, Communication, and Control

ISSN: 3068-9287 (Online) | ISSN: 3068-9279 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/