ICCK Transactions on Sensing, Communication, and Control | Volume 2, Issue 4: 276-289, 2025 | DOI: 10.62762/TSCC.2025.308540

Abstract

Video summarization (VS) aims to generate concise representations of long videos by extracting the most informative frames while maintaining essential content. Existing methods struggle to capture multi-scale dependencies and often rely on suboptimal feature representations, limiting their ability to model complex inter-frame relationships. To address these issues, we propose a multi-scale sensing network that incorporates three key innovations to improve VS. First, we introduce multi-scale dilated convolution blocks with progressively increasing dilation rates to capture temporal context at multiple levels, enabling the network to understand both local transitions and long-range dependencie... More >

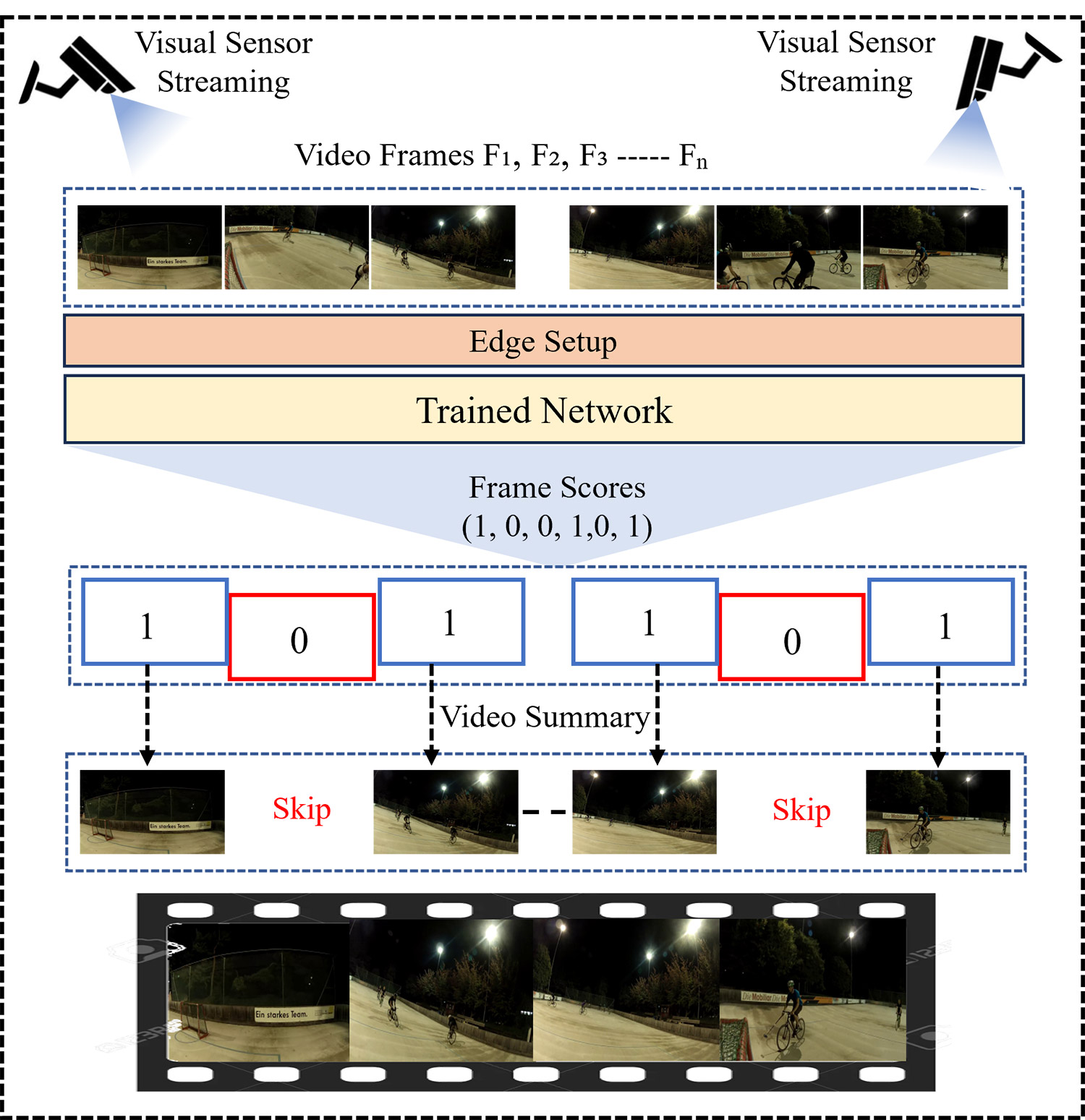

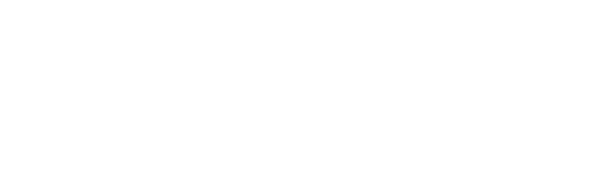

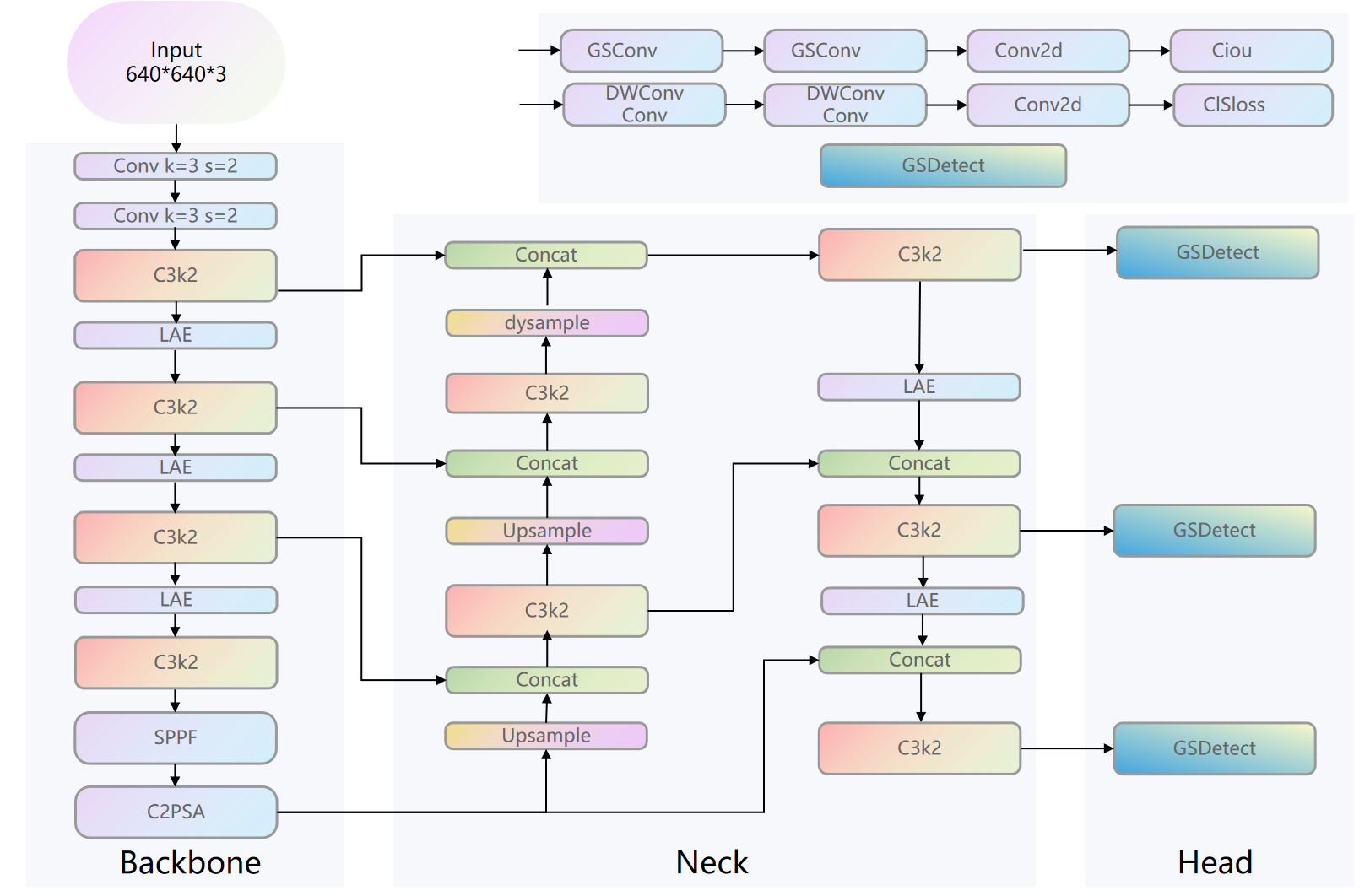

Graphical Abstract