ICCK Transactions on Sensing, Communication, and Control

ISSN: 3068-9287 (Online) | ISSN: 3068-9279 (Print)

Email: [email protected]

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

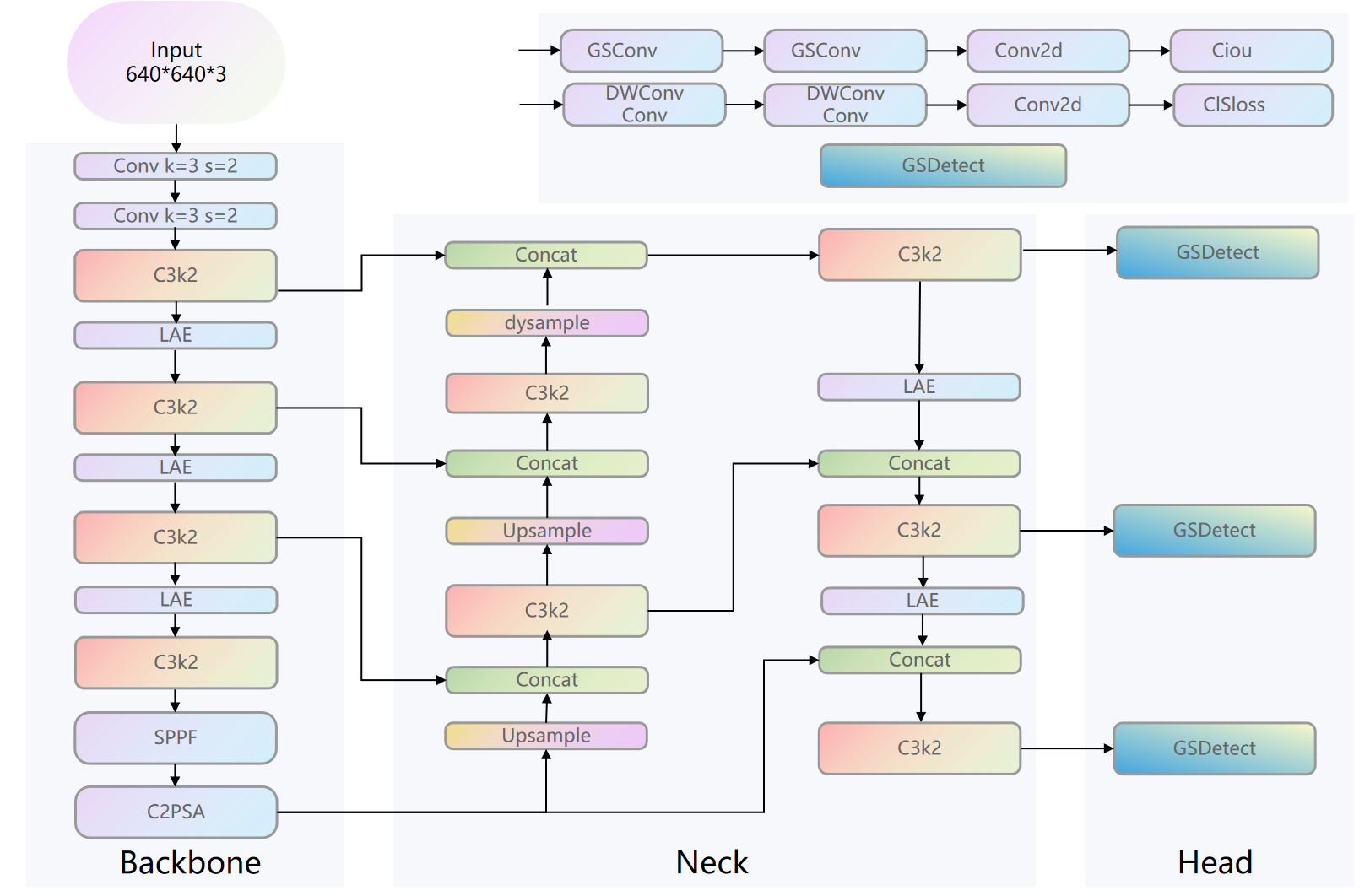

TY - JOUR AU - Chang, Bo AU - Tang, Lichao AU - Hu, Chen AU - Zhu, Mengxiao AU - Dou, Huijie AU - Ali, Kharudin Bin PY - 2025 DA - 2025/12/18 TI - LAE-GSDetect: A Lightweight Fusion Framework for Robust Small-Face Detection in Low-Light Conditions JO - ICCK Transactions on Sensing, Communication, and Control T2 - ICCK Transactions on Sensing, Communication, and Control JF - ICCK Transactions on Sensing, Communication, and Control VL - 2 IS - 4 SP - 250 EP - 262 DO - 10.62762/TSCC.2025.972040 UR - https://www.icck.org/article/abs/TSCC.2025.972040 KW - low-light KW - face detection KW - image enhancement KW - lightweight KW - deep learning AB - In response to the challenges of insufficient accuracy in face detection and missed small targets under low-light conditions, this paper proposes a detection scheme that combines image preprocessing and detection model optimization. Firstly, Zero-DCE low-light enhancement is introduced to adaptively restore image details and contrast, providing high-quality inputs for subsequent detection. Secondly, YOLOv11n is enhanced through the following improvements: a P2 small-target detection layer is added while the P5 layer is removed, addressing the original model's deficiency in detecting small targets and streamlining the computational process to balance model complexity and efficiency; the P2 upsampling is replaced with DySample dynamic upsampling, which adaptively adjusts the sampling strategy based on features to improve the accuracy of feature fusion; a lightweight adaptive extraction module (LAE) is incorporated to reduce the number of parameters and computational costs; finally, the detection head is replaced with GSDetect to maintain accuracy while reducing computational overhead. Experimental results show that the improved model reaches an mAP50 of 58.7%, which is a 10.2% increase compared with the original model. Although the computational complexity increases to 7.5 GFLOPs, the parameter count is reduced by 45%, offering a more optimal solution for face detection in low-light environments. SN - 3068-9287 PB - Institute of Central Computation and Knowledge LA - English ER -

@article{Chang2025LAEGSDetec,

author = {Bo Chang and Lichao Tang and Chen Hu and Mengxiao Zhu and Huijie Dou and Kharudin Bin Ali},

title = {LAE-GSDetect: A Lightweight Fusion Framework for Robust Small-Face Detection in Low-Light Conditions},

journal = {ICCK Transactions on Sensing, Communication, and Control},

year = {2025},

volume = {2},

number = {4},

pages = {250-262},

doi = {10.62762/TSCC.2025.972040},

url = {https://www.icck.org/article/abs/TSCC.2025.972040},

abstract = {In response to the challenges of insufficient accuracy in face detection and missed small targets under low-light conditions, this paper proposes a detection scheme that combines image preprocessing and detection model optimization. Firstly, Zero-DCE low-light enhancement is introduced to adaptively restore image details and contrast, providing high-quality inputs for subsequent detection. Secondly, YOLOv11n is enhanced through the following improvements: a P2 small-target detection layer is added while the P5 layer is removed, addressing the original model's deficiency in detecting small targets and streamlining the computational process to balance model complexity and efficiency; the P2 upsampling is replaced with DySample dynamic upsampling, which adaptively adjusts the sampling strategy based on features to improve the accuracy of feature fusion; a lightweight adaptive extraction module (LAE) is incorporated to reduce the number of parameters and computational costs; finally, the detection head is replaced with GSDetect to maintain accuracy while reducing computational overhead. Experimental results show that the improved model reaches an mAP50 of 58.7\%, which is a 10.2\% increase compared with the original model. Although the computational complexity increases to 7.5 GFLOPs, the parameter count is reduced by 45\%, offering a more optimal solution for face detection in low-light environments.},

keywords = {low-light, face detection, image enhancement, lightweight, deep learning},

issn = {3068-9287},

publisher = {Institute of Central Computation and Knowledge}

}

ICCK Transactions on Sensing, Communication, and Control

ISSN: 3068-9287 (Online) | ISSN: 3068-9279 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/