ICCK Transactions on Sensing, Communication, and Control

ISSN: 3068-9287 (Online) | ISSN: 3068-9279 (Print)

Email: [email protected]

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

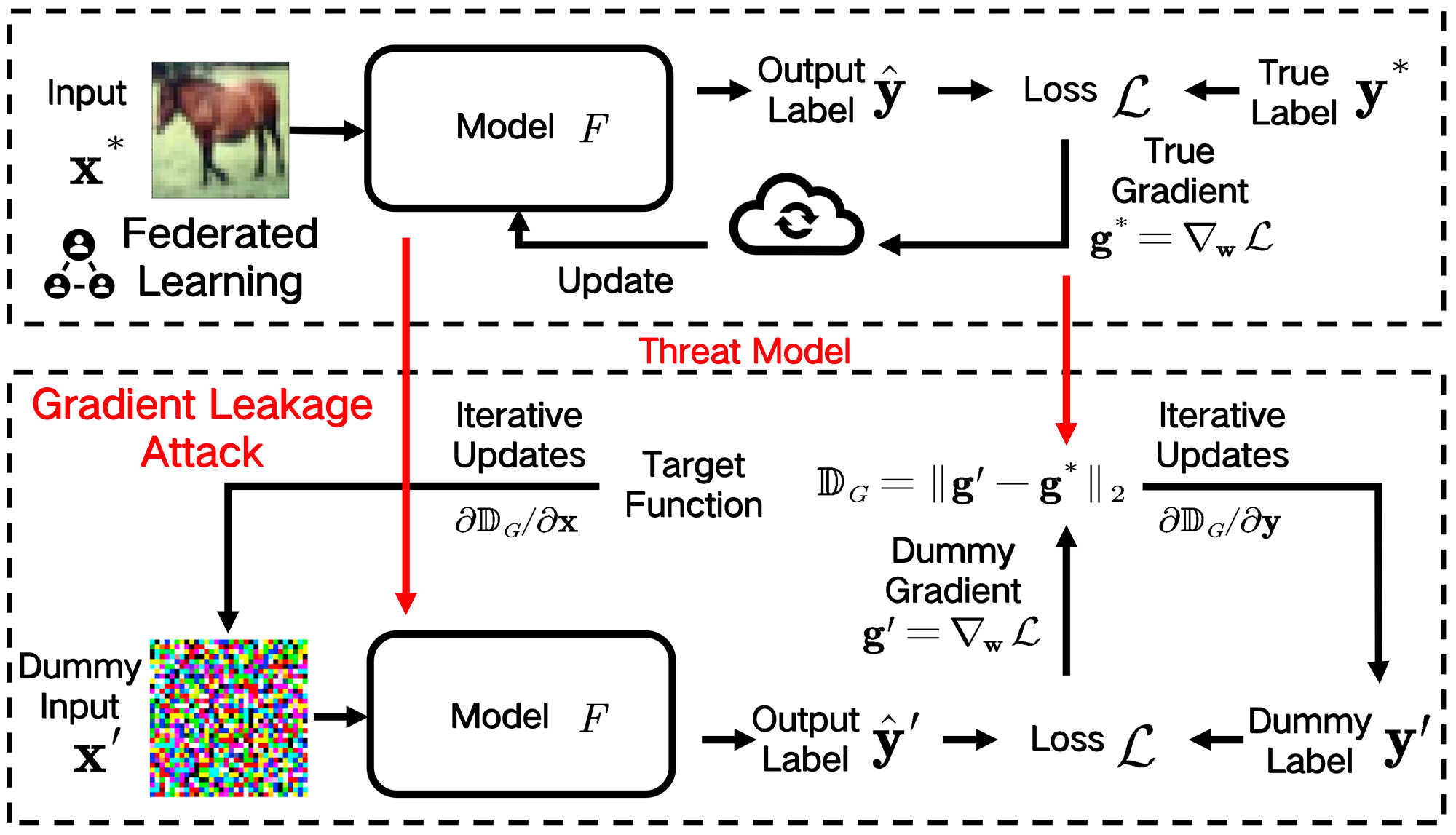

TY - JOUR AU - Wang, Zhiyuan AU - Tian, Ming AU - Qiu, Yutao AU - Zhao, Zhixu AU - Zhang, Yongpo AU - Liu, Kun PY - 2025 DA - 2025/11/28 TI - Federated Learning Privacy Protection via Training Randomness JO - ICCK Transactions on Sensing, Communication, and Control T2 - ICCK Transactions on Sensing, Communication, and Control JF - ICCK Transactions on Sensing, Communication, and Control VL - 2 IS - 4 SP - 226 EP - 237 DO - 10.62762/TSCC.2025.779613 UR - https://www.icck.org/article/abs/TSCC.2025.779613 KW - federated learning KW - privacy-preserving strategy KW - gradient leakage attack KW - dropout method KW - differential privacy AB - Federated learning is a collaborative machine learning paradigm that trains models across multiple computing nodes while aiming to preserve the privacy of local data held by participants. However, because of the open network environment, federated learning faces severe privacy and security challenges. Studies have shown that attackers can reconstruct original training data by intercepting gradients transmitted across the network, thereby posing a serious threat to user privacy. One representative attack is the Deep Leakage from Gradients (DLG), which iteratively recovers training data by optimizing dummy inputs to match the observed gradients. To address this challenge, this paper proposes a novel privacy-preserving strategy that leverages the randomness inherent in model training. Specifically, during the training process, we introduce the dropout method to selectively disconnect neuronal connections, while adding Gaussian noise to mask the gradients of the disconnected neurons. This approach effectively disrupts gradient-leakage attacks and reduces overfitting, thereby enhancing model generalization. We further provide a theoretical analysis of privacy guarantees using differential privacy metrics. Extensive experiments under federated-learning attack-defense scenarios demonstrate the effectiveness of the proposed strategy. Compared with existing defenses, our method achieves strong privacy protection against gradient leakage while maintaining competitive model accuracy, offering new insights and techniques for federated learning privacy preservation. SN - 3068-9287 PB - Institute of Central Computation and Knowledge LA - English ER -

@article{Wang2025Federated,

author = {Zhiyuan Wang and Ming Tian and Yutao Qiu and Zhixu Zhao and Yongpo Zhang and Kun Liu},

title = {Federated Learning Privacy Protection via Training Randomness},

journal = {ICCK Transactions on Sensing, Communication, and Control},

year = {2025},

volume = {2},

number = {4},

pages = {226-237},

doi = {10.62762/TSCC.2025.779613},

url = {https://www.icck.org/article/abs/TSCC.2025.779613},

abstract = {Federated learning is a collaborative machine learning paradigm that trains models across multiple computing nodes while aiming to preserve the privacy of local data held by participants. However, because of the open network environment, federated learning faces severe privacy and security challenges. Studies have shown that attackers can reconstruct original training data by intercepting gradients transmitted across the network, thereby posing a serious threat to user privacy. One representative attack is the Deep Leakage from Gradients (DLG), which iteratively recovers training data by optimizing dummy inputs to match the observed gradients. To address this challenge, this paper proposes a novel privacy-preserving strategy that leverages the randomness inherent in model training. Specifically, during the training process, we introduce the dropout method to selectively disconnect neuronal connections, while adding Gaussian noise to mask the gradients of the disconnected neurons. This approach effectively disrupts gradient-leakage attacks and reduces overfitting, thereby enhancing model generalization. We further provide a theoretical analysis of privacy guarantees using differential privacy metrics. Extensive experiments under federated-learning attack-defense scenarios demonstrate the effectiveness of the proposed strategy. Compared with existing defenses, our method achieves strong privacy protection against gradient leakage while maintaining competitive model accuracy, offering new insights and techniques for federated learning privacy preservation.},

keywords = {federated learning, privacy-preserving strategy, gradient leakage attack, dropout method, differential privacy},

issn = {3068-9287},

publisher = {Institute of Central Computation and Knowledge}

}

ICCK Transactions on Sensing, Communication, and Control

ISSN: 3068-9287 (Online) | ISSN: 3068-9279 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/