Abstract

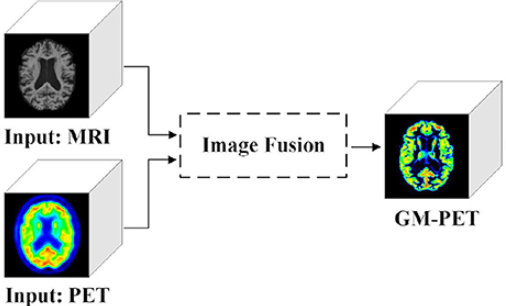

Fusion of multi-modal medical images has transformed healthcare by overcoming the limitations of single-modality imaging, where modalities such as CT, MRI, PET, and SPECT provide complementary information. This review systematically traces the evolution of multi-modal medical image fusion from conventional mathematical models to state-of-the-art artificial intelligence (AI) techniques. We examine the transition from classical approaches---such as multiscale transformations, wavelet decompositions, and sparse representation---to modern deep learning methods, including convolutional neural networks, generative adversarial networks, and transformer architectures. Key limitations of existing methods are highlighted, including limited interpretability, insufficient preservation of modality-specific details, poor cross-dataset generalization, and inadequate post-fusion refinement. The review categorizes fusion strategies into three hierarchical levels---pixel-level, feature-level, and decision-level---and analyzes their benefits and computational complexities. We provide a comparative evaluation of hybrid methods combining classical transform-based approaches with deep learning models, which show improved preservation of anatomical structures and functional information. Major clinical applications are discussed in oncology (tumor detection, staging), neurology (brain tumor localization, surgical planning), ophthalmology (disease characterization), and orthopedics (fracture detection). Challenges to clinical adoption---such as modality misalignment, information loss, high computational requirements, and lack of universal benchmarks---are examined alongside emerging concerns over data privacy and security in telehealth. We also analyze interpretability frameworks, modality-preservation techniques, cross-dataset generalization strategies, and post-fusion refinement methods. The review concludes with strategic recommendations to develop interpretable, real-time AI fusion models with robust clinical validation and standardized benchmarks to bridge the gap between theoretical advances and practical clinical integration, ultimately enhancing diagnostic accuracy and patient outcomes.

Keywords

biomedical informatics

smart healthcare

artificial intelligence in medicine

precision medicine

digital health

Data Availability Statement

Not applicable.

Funding

This work was supported without any funding.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Barola, V. A., Singh, P., & Diwakar, M. (2025). A Recent Survey on Multi-modal Medical Image Fusion. Biomedical Informatics and Smart Healthcare, 1(3), 89–97. https://doi.org/10.62762/BISH.2025.414869

Publisher's Note

ICCK stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and Permissions

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (

https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.