Abstract

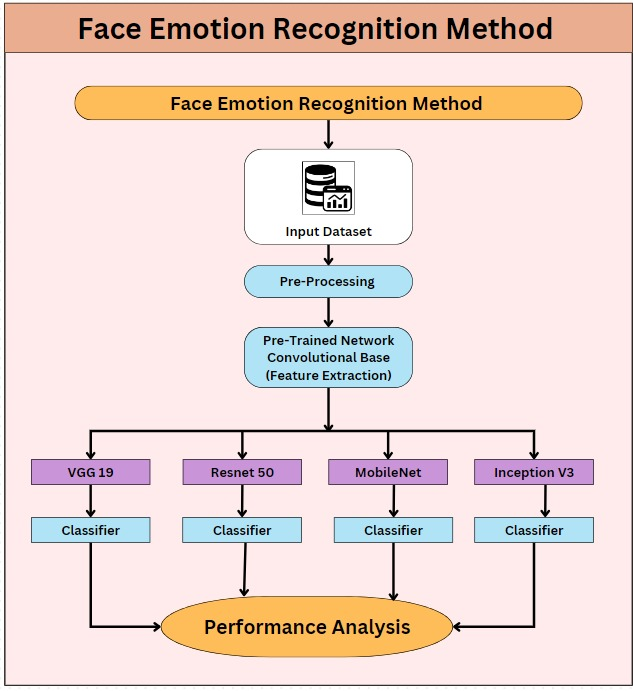

Deep learning has substantially enhanced facial emotion recognition, an essential element of human--computer interaction. This study evaluates the performance of multiple architectures, including a custom CNN, VGG-16, ResNet-50, and a hybrid CNN-LSTM framework, across FER2013 and CK+ datasets. Preprocessing steps involved grayscale conversion, image resizing, and pixel normalization. Experimental results show that ResNet-50 achieved the highest accuracy on FER2013 (76.85%), while the hybrid CNN-LSTM model attained superior performance on CK+ (92.30%). Performance metrics such as precision, recall, and F1-score were used for evaluation. Findings highlight the trade-off between computational efficiency and recognition accuracy, offering insights for developing robust, real-time emotion recognition systems.

Data Availability Statement

Data will be made available on request.

Funding

This work was supported without any funding.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Sarvakar, K., & Rana, K. (2025). A Hybrid Framework Combining CNN, LSTM, and Transfer Learning for Emotion Recognition. ICCK Transactions on Machine Intelligence, 1(2), 103–116. https://doi.org/10.62762/TMI.2025.572412

Publisher's Note

ICCK stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and Permissions

Institute of Central Computation and Knowledge (ICCK) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue