Abstract

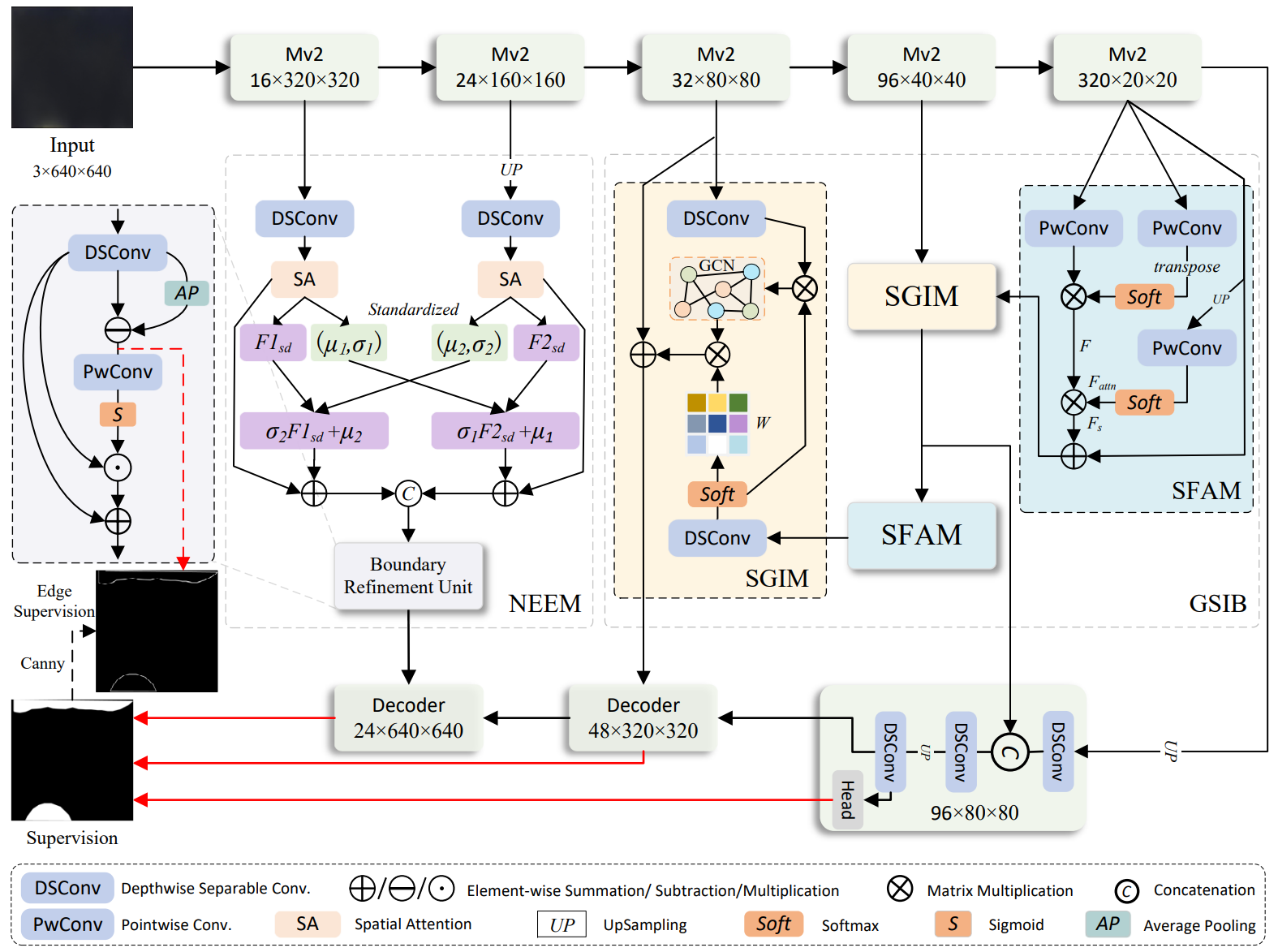

Considering the large-area distribution, smooth brightness gradients, and blurred boundaries of Mura defects in real industrial scenarios, as well as the challenge of balancing accuracy and efficiency in existing methods, we propose a lightweight deep learning-based detection method for large-area Mura defects, termed SIFNet. The SIFNet adopts a classical encoder-decoder architecture with MobileNet-V2 as the backbone. Furthermore, we design a Graph-based Semantic Interscale-fusion Block (GSIB) that integrates the Semantic Fluid Aggregation Module (SFAM) and the Semantic Graph Inference Module (SGIM) to collaboratively extract high-level semantic features across multiple scales and establish abstract semantic representations for accurately localizing large-area Mura defects. Specifically, SFAM leverages a global attention mechanism to extract cross-spatial semantic flows, guiding the model to focus on potential brightness anomaly regions in the image and SGIM explicitly models the semantic relationships between multi-scale features using graph convolution, enhancing the model's ability to interpret regions with blurred boundaries and ambiguous structures. To further improve the model’s sensitivity to edges in regions with smooth brightness transitions, we introduce a NeighborFusion Edge Enhancement Module (NEEM). This module integrates depthwise separable convolutions with a spatial attention mechanism and introduces a CrossNorm-based feature alignment strategy to enhance spatial collaboration across feature layers. Additionally, an edge enhancement mechanism is employed to significantly improve the model’s ability to delineate blurred Mura defect boundaries, while keeping computational cost low and strengthening edge perception and representation. Extensive quantitative and qualitative experiments on three large-area Mura defect datasets constructed in this study demonstrate that SIFNet achieves excellent detection performance with only 3.92M parameters and 6.89 GFLOPs, striking an effective balance between accuracy and efficiency, and fully meeting the demands of industrial deployment.

Data Availability Statement

Data will be made available on request.

Funding

This work was supported by the YangFan Project of Guangdong Province of China under Grant 2020-05.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Wang, Z., He, J., & Chen, H. (2025). Lightweight Mura Defect Detection via Semantic Interscale Integration and Neighbor Fusion. Chinese Journal of Information Fusion, 2(3), 237–252. https://doi.org/10.62762/CJIF.2025.864944

Publisher's Note

ICCK stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and Permissions

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (

https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.