Abstract

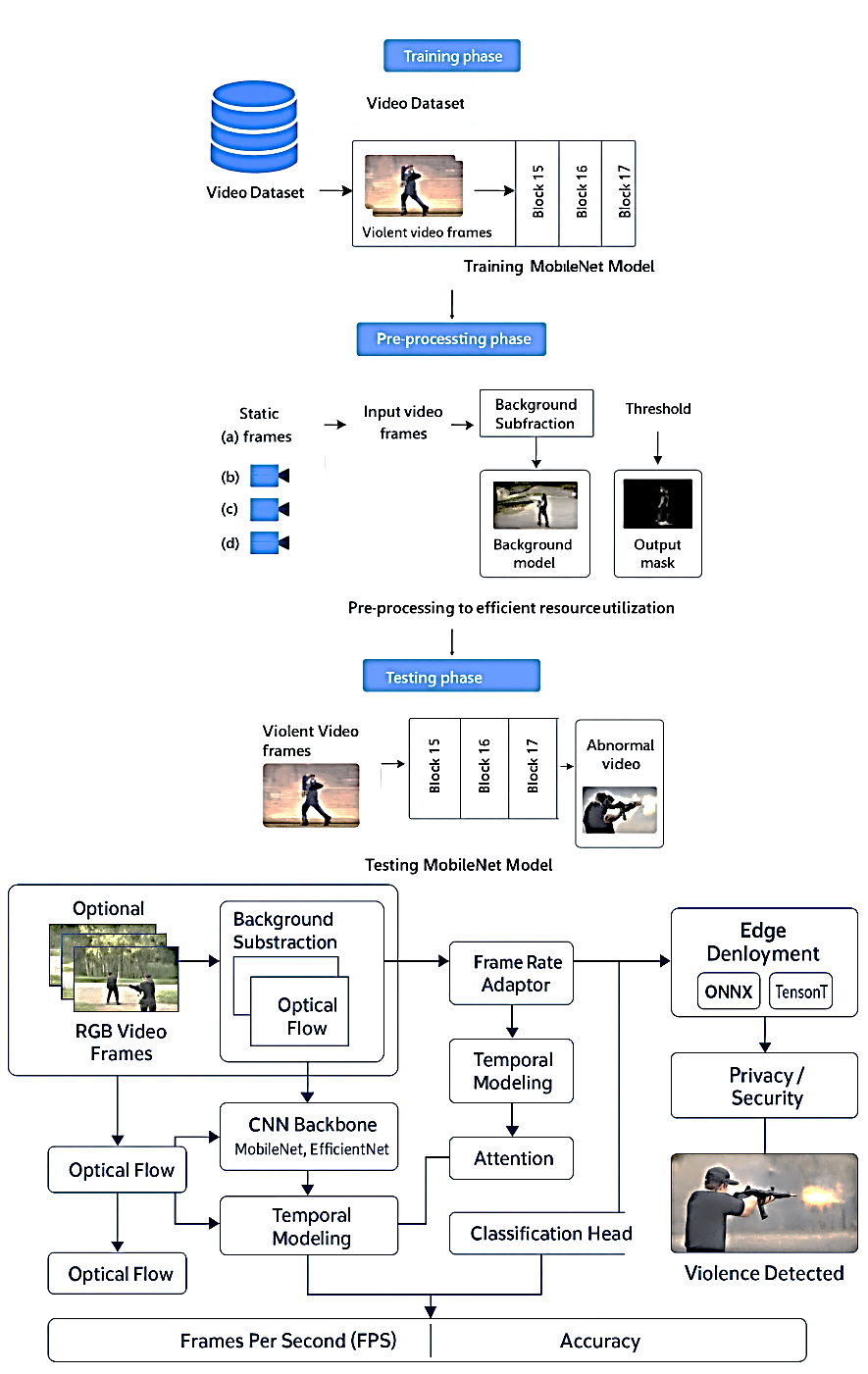

Real-time detection of violent behavior through surveillance technologies is increasingly important for public safety. This study tackles the challenge of automatically distinguishing violent from non-violent activities in continuous video streams. Traditional surveillance depends on human monitoring, which is time-consuming and error-prone, highlighting the need for intelligent systems that detect abnormal behaviors accurately with low computational cost. A key difficulty lies in the ambiguity of defining violent actions and the reliance on large annotated datasets, which are costly to produce. Many existing approaches also demand high computational resources, limiting real-time deployment on resource-constrained devices. To overcome these issues, the present work employs the lightweight MobileNet deep learning architecture for violence detection in surveillance videos. MobileNet is well-suited for embedded devices such as Raspberry Pi and Jetson Nano while maintaining competitive accuracy. In Python-based simulations on the Hockey Fight dataset, MobileNet is compared with AlexNet, VGG-16, and GoogleNet. Results show that MobileNet achieved 96.66% accuracy with a loss of 0.1329, outperforming the other models in both accuracy and efficiency. These findings demonstrate MobileNet’s superior balance of precision, computational cost, and real-time feasibility, offering a robust framework for intelligent surveillance in public safety monitoring, crowd management, and anomaly detection.

Keywords

real-time violence detection

CCTV surveillance video

convolutional neural networks

VGG-16

GoogLeNet

AlexNet

MobileNet

Data Availability Statement

Data will be made available on request.

Funding

This work was supported without any funding.

Conflicts of Interest

The author declares no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Hussain, A. (2025). Detection and Recognition of Real-Time Violence and Human Actions Recognition in Surveillance using Lightweight MobileNet Model. ICCK Journal of Image Analysis and Processing, 1(3), 125–146. https://doi.org/10.62762/JIAP.2025.839123

Publisher's Note

ICCK stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and Permissions

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (

https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.