ICCK Transactions on Advanced Computing and Systems

ISSN: 3068-7969 (Online)

Email: [email protected]

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

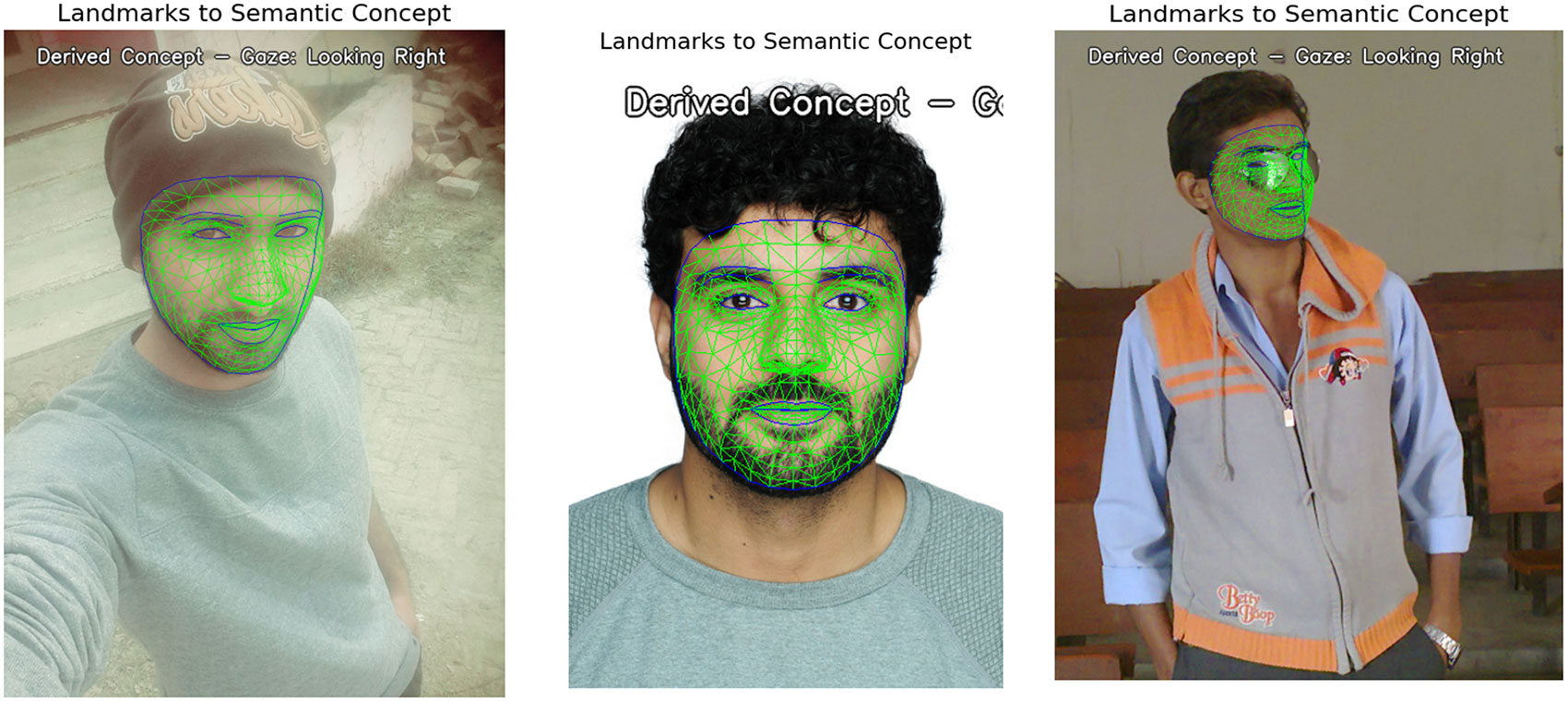

TY - JOUR AU - Khalid, Muhammad Imran AU - Komal, Asma AU - Hussain, Nasir AU - Idrees, Muhammad AU - Wagan, Atif Ali AU - Hussain, Syed Akif PY - 2026 DA - 2026/02/11 TI - GeoGaze: A Real-time, Lightweight Gaze Estimation Framework via Geometric Landmark Analysis JO - ICCK Transactions on Advanced Computing and Systems T2 - ICCK Transactions on Advanced Computing and Systems JF - ICCK Transactions on Advanced Computing and Systems VL - 2 IS - 2 SP - 107 EP - 115 DO - 10.62762/TACS.2025.798133 UR - https://www.icck.org/article/abs/TACS.2025.798133 KW - machine learning KW - deep learning KW - face direction KW - student monitoring AB - Gaze estimation plays a vital role in human-computer interaction, driver monitoring, and psychological analysis. While state-of-the-art appearance-based methods achieve high accuracy using deep learning, they often demand substantial computational resources, including GPU acceleration and extensive training, limiting their use in resource-constrained or real-time scenarios. This paper introduces GeoGaze, a novel, lightweight, training-free framework that infers categorical gaze direction (“Left”, “Center”, “Right”) solely from geometric analysis of facial landmarks. Leveraging the high-precision 478-point face mesh and iris landmarks provided by MediaPipe, GeoGaze computes a simple normalized iris-to-eye-corner ratio and applies intuitive thresholds, eliminating the need for model training or GPU support. Evaluated on a simulated 1,500-image dataset (SGDD-1500), GeoGaze delivers competitive directional classification accuracy while achieving real-time performance (~66 FPS on CPU), outperforming typical deep learning baselines by more than an order of magnitude in speed. These results position GeoGaze as an efficient, interpretable alternative for edge devices and applications where precise angular gaze is unnecessary and directional intent suffices. SN - 3068-7969 PB - Institute of Central Computation and Knowledge LA - English ER -

@article{Khalid2026GeoGaze,

author = {Muhammad Imran Khalid and Asma Komal and Nasir Hussain and Muhammad Idrees and Atif Ali Wagan and Syed Akif Hussain},

title = {GeoGaze: A Real-time, Lightweight Gaze Estimation Framework via Geometric Landmark Analysis},

journal = {ICCK Transactions on Advanced Computing and Systems},

year = {2026},

volume = {2},

number = {2},

pages = {107-115},

doi = {10.62762/TACS.2025.798133},

url = {https://www.icck.org/article/abs/TACS.2025.798133},

abstract = {Gaze estimation plays a vital role in human-computer interaction, driver monitoring, and psychological analysis. While state-of-the-art appearance-based methods achieve high accuracy using deep learning, they often demand substantial computational resources, including GPU acceleration and extensive training, limiting their use in resource-constrained or real-time scenarios. This paper introduces GeoGaze, a novel, lightweight, training-free framework that infers categorical gaze direction (“Left”, “Center”, “Right”) solely from geometric analysis of facial landmarks. Leveraging the high-precision 478-point face mesh and iris landmarks provided by MediaPipe, GeoGaze computes a simple normalized iris-to-eye-corner ratio and applies intuitive thresholds, eliminating the need for model training or GPU support. Evaluated on a simulated 1,500-image dataset (SGDD-1500), GeoGaze delivers competitive directional classification accuracy while achieving real-time performance (~66 FPS on CPU), outperforming typical deep learning baselines by more than an order of magnitude in speed. These results position GeoGaze as an efficient, interpretable alternative for edge devices and applications where precise angular gaze is unnecessary and directional intent suffices.},

keywords = {machine learning, deep learning, face direction, student monitoring},

issn = {3068-7969},

publisher = {Institute of Central Computation and Knowledge}

}

Copyright © 2026 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2026 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

ICCK Transactions on Advanced Computing and Systems

ISSN: 3068-7969 (Online)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/