Abstract

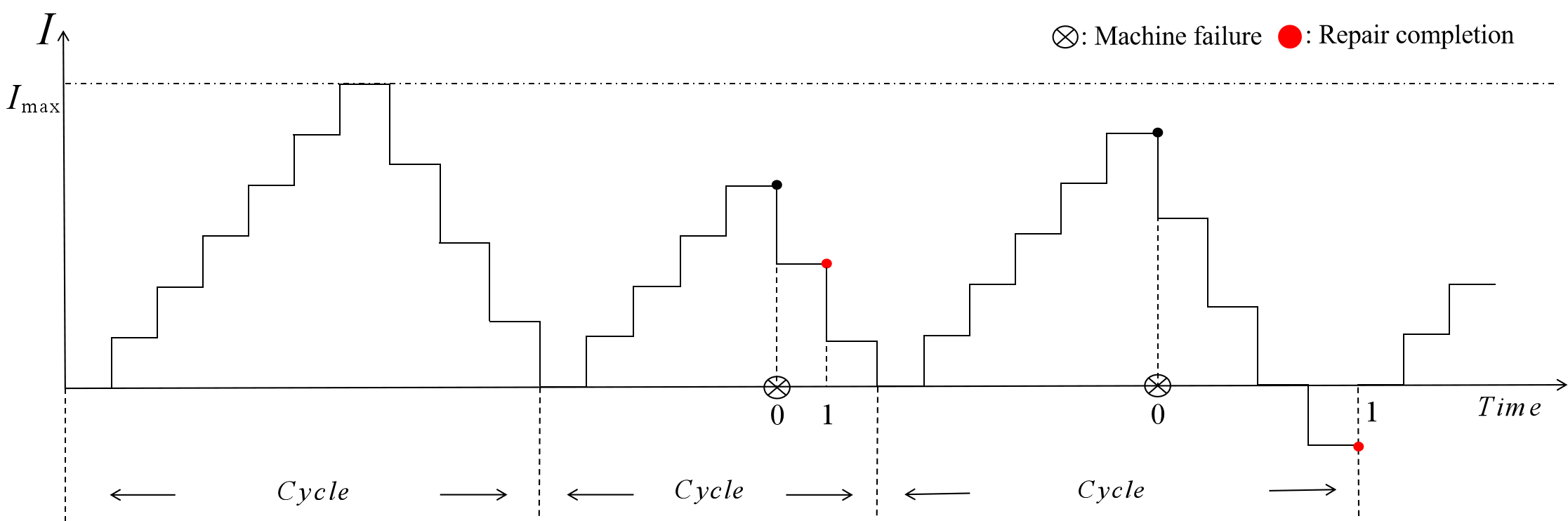

This study introduces a novel approach for enhancing production decision-making by applying Reinforcement Learning to optimize the Economic Manufacturing Quantity (EMQ) model within discrete-time production-inventory systems. By incorporating machine status, inventory levels, and production choices, a Markov Decision Process (MDP) is constructed and combined with the Q-learning algorithm to derive an adaptive control method. This method enables the dynamic adaptation of production decisions, by effectively balancing the normal operation and shutdown for rest states. Numerical simulations show that the suggested Reinforcement Learning model surpasses conventional EMQ models and steady-state probability models in both convergence speed and cost-effectiveness. This study offers a data-driven approach for optimizing production processes in smart manufacturing settings. It also supports the evolution of production-inventory systems from static planning to dynamic intelligent decision-making.

Keywords

reinforcement learning

economic manufacturing quantity

production inventory optimization

Q-Learning

dynamic decision-making

Data Availability Statement

Data will be made available on request.

Funding

This work was supported in part by the Shijiazhuang Science and Technology Project under Grant 241790737A; in part by the Natural Science Foundation of Hebei Province under Grant G2025203034; in part by the Shanxi Provincial Basic Research Program Youth Project under Grant 202403021212004.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Wang, R., Gong, Y., Su, P., Hu, L., & Jiang, X. (2025). Optimization and Control of Discrete-Time Production-Inventory Systems Using Reinforcement Learning. ICCK Transactions on Systems Safety and Reliability, 1(2), 98–113. https://doi.org/10.62762/TSSR.2025.621059

Publisher's Note

ICCK stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and Permissions

Institute of Central Computation and Knowledge (ICCK) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue