Next-Generation Computing Systems and Technologies

ISSN: 3070-3328 (Online)

Email: [email protected]

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

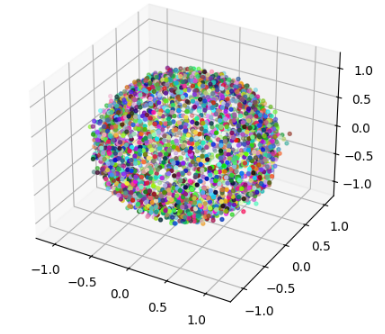

TY - JOUR AU - Kar, Santosh Kumar AU - Subudhi, B. Ujalesh AU - Mishra, Brojo Kishore AU - Panda, Bandhan PY - 2025 DA - 2025/12/22 TI - A Comprehensive Review of Diffusion Models, Gaussian Splatting and Their Integration in Augmented and Virtual Reality JO - Next-Generation Computing Systems and Technologies T2 - Next-Generation Computing Systems and Technologies JF - Next-Generation Computing Systems and Technologies VL - 1 IS - 2 SP - 102 EP - 112 DO - 10.62762/NGCST.2025.477710 UR - https://www.icck.org/article/abs/NGCST.2025.477710 KW - text-to-3D generation KW - diffusion models KW - gaussian splatting KW - augmented and virtual reality (AR/VR) KW - human-in-the-loop optimization AB - The new progress in text-to-3D technology has greatly changed and improved the artificial intelligence (AI) applications in augmented and virtual reality (AR/VR) environments. Many different techniques in 2024-2025 like diffusion models, Gaussian splatting, and physics aware models have helped the text-to-3D much better by improving the visual fidelity, semantic coherence, and generation efficiency. Some models like Turbo3D, Dive3D and Instant3D are deigned to make the 3D generation faster by improving the working process of diffusion models. Other frameworks like LAYOUTDREAMER, PhiP-G and CompGS focus on creating scenes that are well organized and structured. Dream Reward and Coheren Dream methods use the feedback from the humans and information from multiple types of data ton improve the 3D results that will match with the expectation of the people. There are some major challenges still remain even with all these improvements. These can be current text-to-3D methods need a lot of computing power which makes it difficult to employ at large scale or in real time AR/VR applications. Other problems like multi-view inconsistencies and absence of any standard benchmark makes it very difficult to compare the methods fairly. Without combining text, physics, and spatial logic the 3D scenes look less real and difficult to achieve natural interactions with the objects. This review explains and examines the latest advancements in text-to-3D generation. It closely looks at how these methods are designed, optimized and customized for different areas of applications. The review points out probable future research ideas like creation of faster and smaller 3D generation methods, renderings that will understand the real world physics and include the human help to guide the model as per the requirements in the process and use common standards to get fairness in the evaluation of the model. The study bows to explain the current progress, innovative ideas and the challenges faced by the artificial intelligence (AI) in creating AR/VR 3D contents. SN - 3070-3328 PB - Institute of Central Computation and Knowledge LA - English ER -

@article{Kar2025A,

author = {Santosh Kumar Kar and B. Ujalesh Subudhi and Brojo Kishore Mishra and Bandhan Panda},

title = {A Comprehensive Review of Diffusion Models, Gaussian Splatting and Their Integration in Augmented and Virtual Reality},

journal = {Next-Generation Computing Systems and Technologies},

year = {2025},

volume = {1},

number = {2},

pages = {102-112},

doi = {10.62762/NGCST.2025.477710},

url = {https://www.icck.org/article/abs/NGCST.2025.477710},

abstract = {The new progress in text-to-3D technology has greatly changed and improved the artificial intelligence (AI) applications in augmented and virtual reality (AR/VR) environments. Many different techniques in 2024-2025 like diffusion models, Gaussian splatting, and physics aware models have helped the text-to-3D much better by improving the visual fidelity, semantic coherence, and generation efficiency. Some models like Turbo3D, Dive3D and Instant3D are deigned to make the 3D generation faster by improving the working process of diffusion models. Other frameworks like LAYOUTDREAMER, PhiP-G and CompGS focus on creating scenes that are well organized and structured. Dream Reward and Coheren Dream methods use the feedback from the humans and information from multiple types of data ton improve the 3D results that will match with the expectation of the people. There are some major challenges still remain even with all these improvements. These can be current text-to-3D methods need a lot of computing power which makes it difficult to employ at large scale or in real time AR/VR applications. Other problems like multi-view inconsistencies and absence of any standard benchmark makes it very difficult to compare the methods fairly. Without combining text, physics, and spatial logic the 3D scenes look less real and difficult to achieve natural interactions with the objects. This review explains and examines the latest advancements in text-to-3D generation. It closely looks at how these methods are designed, optimized and customized for different areas of applications. The review points out probable future research ideas like creation of faster and smaller 3D generation methods, renderings that will understand the real world physics and include the human help to guide the model as per the requirements in the process and use common standards to get fairness in the evaluation of the model. The study bows to explain the current progress, innovative ideas and the challenges faced by the artificial intelligence (AI) in creating AR/VR 3D contents.},

keywords = {text-to-3D generation, diffusion models, gaussian splatting, augmented and virtual reality (AR/VR), human-in-the-loop optimization},

issn = {3070-3328},

publisher = {Institute of Central Computation and Knowledge}

}

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Central Computation and Knowledge. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. Next-Generation Computing Systems and Technologies

ISSN: 3070-3328 (Online)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/icck/